过年没空去开会?这一份77页AAAI2019会议笔记干货了解一下

【导读】人工智能领域的国际顶级会议 AAAI 2019 于 1 月 27 日至 2 月 1 日在美国夏威夷举行。 AAAI会议日程之紧凑,会议内容之丰富,令人目不暇接。如果你回家过年,或者因为奔波在各个会场而漏听了感兴趣的报告,这里有一份来自布朗大学David Abel博士的 77页的AAAI 2019的会议笔记,了解一下。

作者 | David Abel

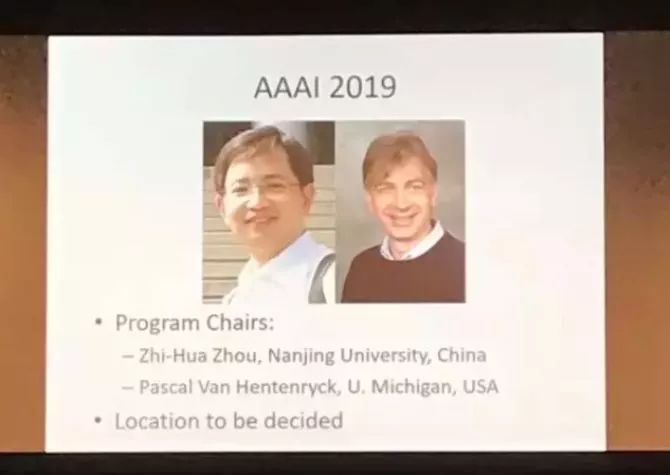

今年AAAI 2019的两位程序主席之一是南京大学的周志华教授(下图左),周志华教授也是AAAI自1980年创办以来,首位担任大会程序主席的非欧美学者。

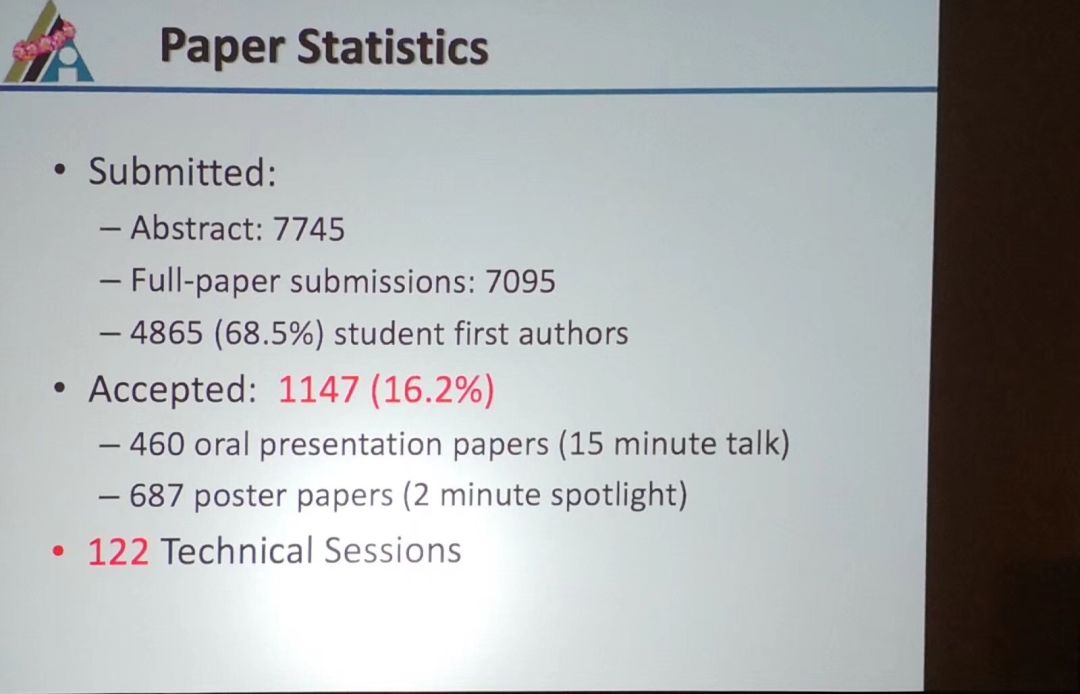

作为AI领域最负盛名的会议之一,本次会议共收到7095篇论文。其中,1147篇被接收,接收率为16.2%。

虽然大会持续6天,但是会议日程之紧凑,会议内容之丰富,令人目不暇接。 特别的,并行开始的许多会议,让不少参会者奔波于各个会场之间,很容易漏听掉某些精彩内容。另外,除了Tutorial, Keynote, Oral Paper, Best Paper,还有不少workshop在探讨目前机器学习领域的热门话题和及其在工业界的应用,真是一场学术盛会。

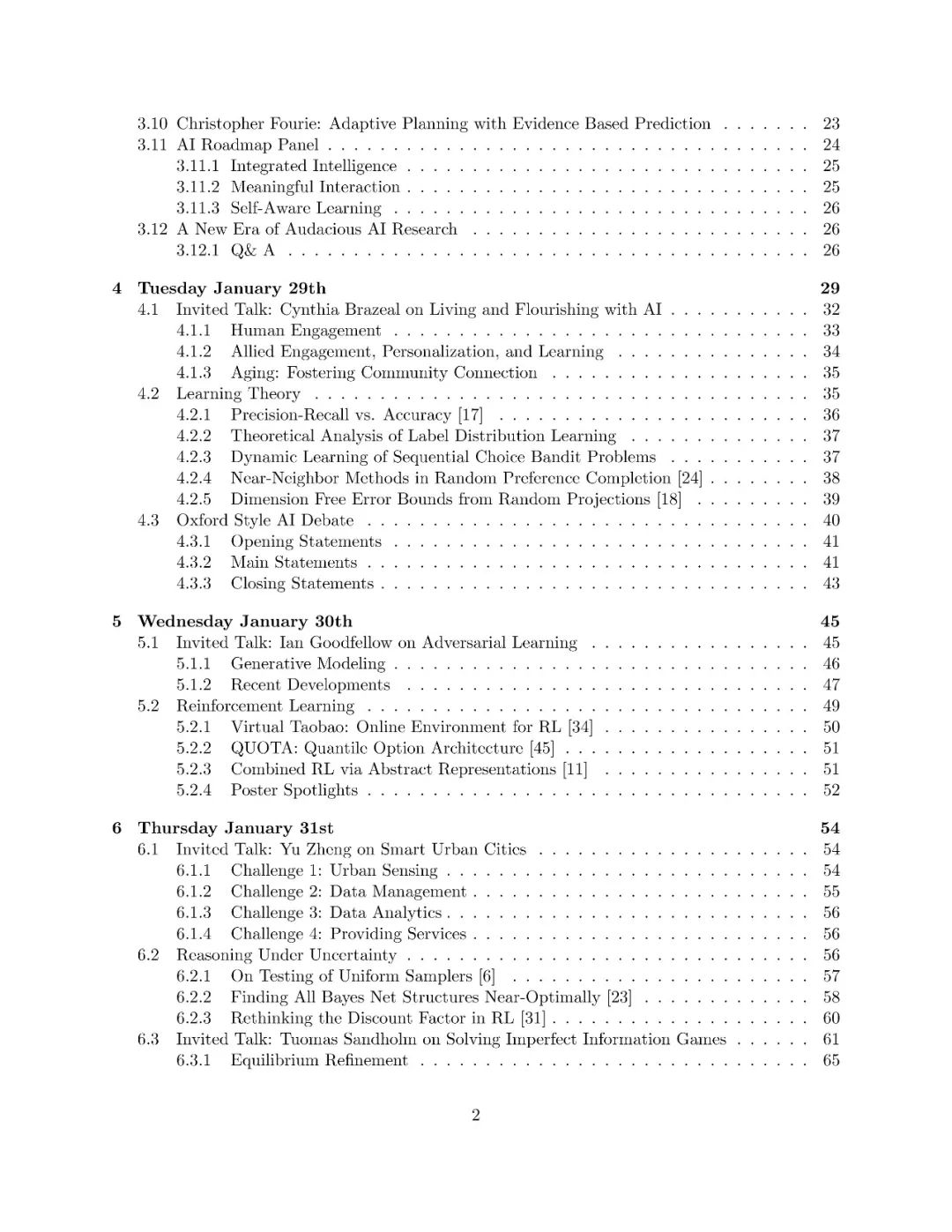

今天,美国布朗大学的一位同学 David Abel,公开分享了他在这次大会上整理的长达77页的会议笔记。这份笔记内容详实,包含了不少来自现场拍摄的PPT图片,还根据参会日期和会场做了详细的目录。 今年重点关注的内容包括机器学习、视觉和NLP、博弈论、启发式搜索和认知系统。

David Abel 是美国布朗大学计算机科学专业的在读博士生,师从Michael Littman,研究重点是抽象概念及其在智能中的应用。同时还是牛津大学Future of Humanity Institute 的一名实习生。

个人主页 https://david-abel.github.io/

【AAAI 2019会议笔记下载】

请关注专知公众号(扫一扫最下面专知二维码,或者点击上方蓝色专知),

后台回复“AAAI2019” 就可以获取 AAAI 2019 Notes by David Abel 下载链接

专知2019年《深度学习:算法到实战》精品课程,欢迎扫码报名学习!

附笔记原文

-END-

专 · 知

专知《深度学习:算法到实战》课程全部完成!470+位同学在学习,现在报名,限时优惠!网易云课堂人工智能畅销榜首位!

请加专知小助手微信(扫一扫如下二维码添加),咨询《深度学习:算法到实战》参团限时优惠报名~

欢迎微信扫一扫加入专知人工智能知识星球群,获取专业知识教程视频资料和与专家交流咨询!

请PC登录www.zhuanzhi.ai或者点击阅读原文,注册登录专知,获取更多AI知识资料!

点击“阅读原文”,了解报名专知《深度学习:算法到实战》课程