【论文推荐】最新八篇目标跟踪相关论文—自适应相关滤波、因果关系图模型、TrackingNet、ClickBAIT、图像矩模型

【导读】专知内容组整理了最近八篇目标跟踪(Object Tracking)相关文章,为大家进行介绍,欢迎查看!

1. Adaptive Correlation Filters with Long-Term and Short-Term Memory for Object Tracking(基于具有长期和短期自适应记忆相关滤波的目标跟踪)

作者:Chao Ma,Jia-Bin Huang,Xiaokang Yang,Ming-Hsuan Yang

机构:National Tsing Hua University,National Chiao Tong University

摘要:Object tracking is challenging as target objects often undergo drastic appearance changes over time. Recently, adaptive correlation filters have been successfully applied to object tracking. However, tracking algorithms relying on highly adaptive correlation filters are prone to drift due to noisy updates. Moreover, as these algorithms do not maintain long-term memory of target appearance, they cannot recover from tracking failures caused by heavy occlusion or target disappearance in the camera view. In this paper, we propose to learn multiple adaptive correlation filters with both long-term and short-term memory of target appearance for robust object tracking. First, we learn a kernelized correlation filter with an aggressive learning rate for locating target objects precisely. We take into account the appropriate size of surrounding context and the feature representations. Second, we learn a correlation filter over a feature pyramid centered at the estimated target position for predicting scale changes. Third, we learn a complementary correlation filter with a conservative learning rate to maintain long-term memory of target appearance. We use the output responses of this long-term filter to determine if tracking failure occurs. In the case of tracking failures, we apply an incrementally learned detector to recover the target position in a sliding window fashion. Extensive experimental results on large-scale benchmark datasets demonstrate that the proposed algorithm performs favorably against the state-of-the-art methods in terms of efficiency, accuracy, and robustness.

期刊:arXiv, 2018年3月23日

网址:

http://www.zhuanzhi.ai/document/ea8ebd45256ea020aefbb7cf7fbd7740

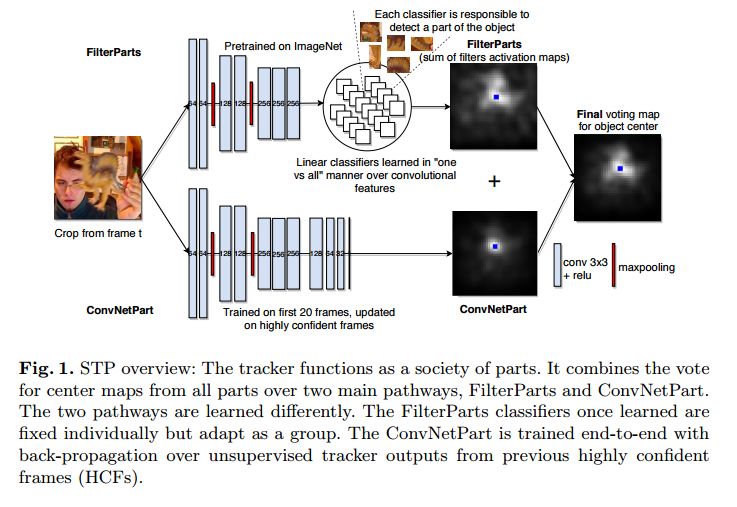

2. Learning a Robust Society of Tracking Parts using Co-occurrence Constraints(使用共存约束来学习一个鲁棒的跟踪部件社会)

作者:Elena Burceanu,Marius Leordeanu

机构:University of Bucharest,University Politehnica of Bucharest

摘要:Object tracking is an essential problem in computer vision that has been researched for several decades. One of the main challenges in tracking is to adapt to object appearance changes over time, in order to avoid drifting to background clutter. We address this challenge by proposing a deep neural network architecture composed of different parts, which functions as a society of tracking parts. The parts work in conjunction according to a certain policy and learn from each other in a robust manner, using co-occurrence constraints that ensure robust inference and learning. From a structural point of view, our network is composed of two main pathways. One pathway is more conservative. It carefully monitors a large set of simple tracker parts learned as linear filters over deep feature activation maps. It assigns the parts different roles. It promotes the reliable ones and removes the inconsistent ones. We learn these filters simultaneously in an efficient way, with a single closed-form formulation for which we propose novel theoretical properties. The second pathway is more progressive. It is learned completely online and thus it is able to better model object appearance changes. In order to adapt in a robust manner, it is learned only on highly confident frames, which are decided using co-occurrences with the first pathway. Thus, our system has the full benefit of two main approaches in tracking. The larger set of simpler filter parts offers robustness, while the full deep network learned online provides adaptability to change. As shown in the experimental section, our approach achieves state of the art performance on the challenging VOT17 benchmark, outperforming the existing published methods both on the general EAO metric as well as in the number of fails by a significant margin.

期刊:arXiv, 2018年4月5日

网址:

http://www.zhuanzhi.ai/document/3ea6c6887b527460c45ff5f2fa4e2c3a

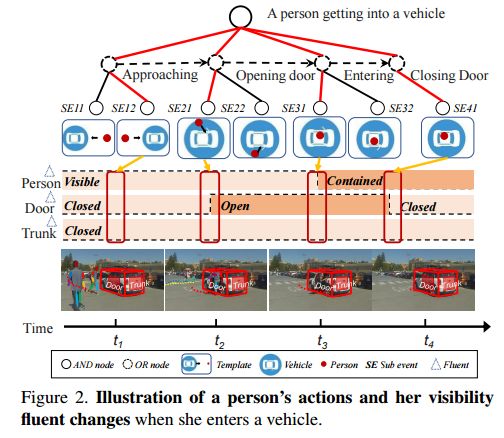

3. A Causal And-Or Graph Model for Visibility Fluent Reasoning in Tracking Interacting Objects(用于跟踪交互对象可见性的一种因果关系图模型)

作者:Yuanlu Xu,Lei Qin,Xiaobai Liu,Jianwen Xie,Song-Chun Zhu

机构:San Diego State University

摘要:Tracking humans that are interacting with the other subjects or environment remains unsolved in visual tracking, because the visibility of the human of interests in videos is unknown and might vary over time. In particular, it is still difficult for state-of-the-art human trackers to recover complete human trajectories in crowded scenes with frequent human interactions. In this work, we consider the visibility status of a subject as a fluent variable, whose change is mostly attributed to the subject's interaction with the surrounding, e.g., crossing behind another object, entering a building, or getting into a vehicle, etc. We introduce a Causal And-Or Graph (C-AOG) to represent the causal-effect relations between an object's visibility fluent and its activities, and develop a probabilistic graph model to jointly reason the visibility fluent change (e.g., from visible to invisible) and track humans in videos. We formulate this joint task as an iterative search of a feasible causal graph structure that enables fast search algorithm, e.g., dynamic programming method. We apply the proposed method on challenging video sequences to evaluate its capabilities of estimating visibility fluent changes of subjects and tracking subjects of interests over time. Results with comparisons demonstrate that our method outperforms the alternative trackers and can recover complete trajectories of humans in complicated scenarios with frequent human interactions.

期刊:arXiv, 2018年3月29日

网址:

http://www.zhuanzhi.ai/document/c221882a888285b35037f10ba9eb609c

4. TrackingNet: A Large-Scale Dataset and Benchmark for Object Tracking in the Wild(TrackingNet:针对自然条件下目标跟踪的大规模数据集和基准)

作者:Matthias Müller,Adel Bibi,Silvio Giancola,Salman Al-Subaihi,Bernard Ghanem

机构:National Tsing Hua University,National Chiao Tong University

摘要:Despite the numerous developments in object tracking, further development of current tracking algorithms is limited by small and mostly saturated datasets. As a matter of fact, data-hungry trackers based on deep-learning currently rely on object detection datasets due to the scarcity of dedicated large-scale tracking datasets. In this work, we present TrackingNet, the first large-scale dataset and benchmark for object tracking in the wild. We provide more than 30K videos with more than 14 million dense bounding box annotations. Our dataset covers a wide selection of object classes in broad and diverse context. By releasing such a large-scale dataset, we expect deep trackers to further improve and generalize. In addition, we introduce a new benchmark composed of 500 novel videos, modeled with a distribution similar to our training dataset. By sequestering the annotation of the test set and providing an online evaluation server, we provide a fair benchmark for future development of object trackers. Deep trackers fine-tuned on a fraction of our dataset improve their performance by up to 1.6% on OTB100 and up to 1.7% on TrackingNet Test. We provide an extensive benchmark on TrackingNet by evaluating more than 20 trackers. Our results suggest that object tracking in the wild is far from being solved.

期刊:arXiv, 2018年3月29日

网址:

http://www.zhuanzhi.ai/document/3a9fb31478bb3df6d19eaa7863daf4e3

5. A Framework for Evaluating 6-DOF Object Trackers(一个评估6-DOF目标跟踪器的框架)

作者:Mathieu Garon,Denis Laurendeau,Jean-François Lalonde

机构:National Tsing Hua University,National Chiao Tong University

摘要:We present a challenging and realistic novel dataset for evaluating 6-DOF object tracking algorithms. Existing datasets show serious limitations---notably, unrealistic synthetic data, or real data with large fiducial markers---preventing the community from obtaining an accurate picture of the state-of-the-art. Our key contribution is a novel pipeline for acquiring accurate ground truth poses of real objects w.r.t a Kinect V2 sensor by using a commercial motion capture system. A total of 100 calibrated sequences of real objects are acquired in three different scenarios to evaluate the performance of trackers in various scenarios: stability, robustness to occlusion and accuracy during challenging interactions between a person and the object. We conduct an extensive study of a deep 6-DOF tracking architecture and determine a set of optimal parameters. We enhance the architecture and the training methodology to train a 6-DOF tracker that can robustly generalize to objects never seen during training, and demonstrate favorable performance compared to previous approaches trained specifically on the objects to track.

期刊:arXiv, 2018年3月28日

网址:

http://www.zhuanzhi.ai/document/23973751afcd9a7026bbac7bbea62dcd

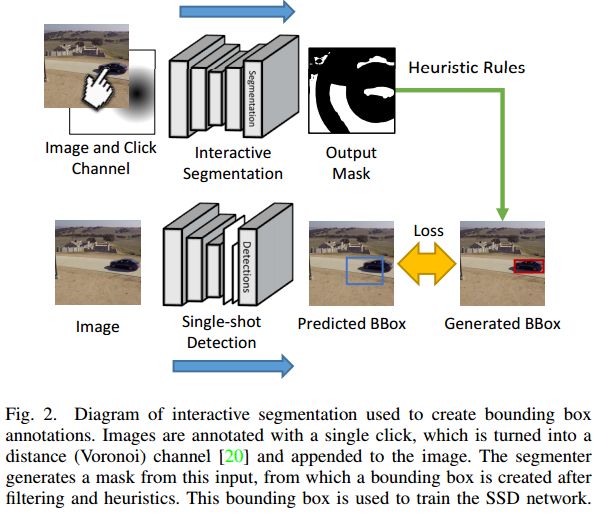

6. ClickBAIT-v2: Training an Object Detector in Real-Time(ClickBAIT-v2:实时训练物体检测器)

作者:Ervin Teng,Rui Huang,Bob Iannucci

机构:Carnegie Mellon University

摘要:Modern deep convolutional neural networks (CNNs) for image classification and object detection are often trained offline on large static datasets. Some applications, however, will require training in real-time on live video streams with a human-in-the-loop. We refer to this class of problem as time-ordered online training (ToOT). These problems will require a consideration of not only the quantity of incoming training data, but the human effort required to annotate and use it. We demonstrate and evaluate a system tailored to training an object detector on a live video stream with minimal input from a human operator. We show that we can obtain bounding box annotation from weakly-supervised single-point clicks through interactive segmentation. Furthermore, by exploiting the time-ordered nature of the video stream through object tracking, we can increase the average training benefit of human interactions by 3-4 times.

期刊:arXiv, 2018年3月28日

网址:

http://www.zhuanzhi.ai/document/089a26fd4d3f65fff95cbb8ac500cfe3

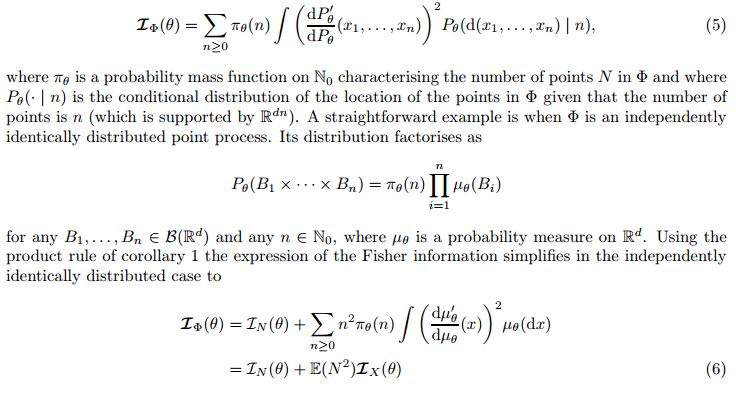

7. On the loss of Fisher information in some multi-object tracking observation models(一些多目标跟踪观测模型中的Fisher信息损失)

作者:Jeremie Houssineau,Ajay Jasra,Sumeetpal S. Singh

摘要:The concept of Fisher information can be useful even in cases where the probability distributions of interest are not absolutely continuous with respect to the natural reference measure on the underlying space. Practical examples where this extension is useful are provided in the context of multi-object tracking statistical models. Upon defining the Fisher information without introducing a reference measure, we provide remarkably concise proofs of the loss of Fisher information in some widely used multi-object tracking observation models.

期刊:arXiv, 2018年3月26日

网址:

http://www.zhuanzhi.ai/document/8d6ab121214f35ffdbc428c1f27fc74d

8. Image Moment Models for Extended Object Tracking(基于图像矩模型的扩展的目标跟踪)

作者:Gang Yao,Ashwin Dani

机构:National Tsing Hua University,National Chiao Tong University

摘要:In this paper, a novel image moments based model for shape estimation and tracking of an object moving with a complex trajectory is presented. The camera is assumed to be stationary looking at a moving object. Point features inside the object are sampled as measurements. An ellipsoidal approximation of the shape is assumed as a primitive shape. The shape of an ellipse is estimated using a combination of image moments. Dynamic model of image moments when the object moves under the constant velocity or coordinated turn motion model is derived as a function for the shape estimation of the object. An Unscented Kalman Filter-Interacting Multiple Model (UKF-IMM) filter algorithm is applied to estimate the shape of the object (approximated as an ellipse) and track its position and velocity. A likelihood function based on average log-likelihood is derived for the IMM filter. Simulation results of the proposed UKF-IMM algorithm with the image moments based models are presented that show the estimations of the shape of the object moving in complex trajectories. Comparison results, using intersection over union (IOU), and position and velocity root mean square errors (RMSE) as metrics, with a benchmark algorithm from literature are presented. Results on real image data captured from the quadcopter are also presented.

期刊:arXiv, 2018年4月9日

网址:

http://www.zhuanzhi.ai/document/d509f443418d07f5dbb12af0780c0da4

-END-

专 · 知

人工智能领域主题知识资料查看获取:【专知荟萃】人工智能领域26个主题知识资料全集(入门/进阶/论文/综述/视频/专家等)

请PC登录www.zhuanzhi.ai或者点击阅读原文,注册登录专知,获取更多AI知识资料!

请扫一扫如下二维码关注我们的公众号,获取人工智能的专业知识!

请加专知小助手微信(Rancho_Fang),加入专知主题人工智能群交流!加入专知主题群(请备注主题类型:AI、NLP、CV、 KG等)交流~

投稿&广告&商务合作:fangquanyi@gmail.com

点击“阅读原文”,使用专知