【论文推荐】最新六篇机器翻译相关论文—日语谓词共轭、推荐记忆、双曲注意力网络、机器翻译评价指标

【导读】专知内容组既前两天推出十九篇机器翻译(Machine Translation)相关论文,今天又推出最新六篇机器翻译相关论文,欢迎查看!

20.Japanese Predicate Conjugation for Neural Machine Translation(神经机器翻译的日语谓词共轭)

作者:Michiki Kurosawa,Yukio Matsumura,Hayahide Yamagishi,Mamoru Komachi

NAACL 2018 Student Research Workshop

机构:Tokyo Metropolitan University

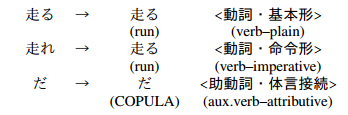

摘要:Neural machine translation (NMT) has a drawback in that can generate only high-frequency words owing to the computational costs of the softmax function in the output layer. In Japanese-English NMT, Japanese predicate conjugation causes an increase in vocabulary size. For example, one verb can have as many as 19 surface varieties. In this research, we focus on predicate conjugation for compressing the vocabulary size in Japanese. The vocabulary list is filled with the various forms of verbs. We propose methods using predicate conjugation information without discarding linguistic information. The proposed methods can generate low-frequency words and deal with unknown words. Two methods were considered to introduce conjugation information: the first considers it as a token (conjugation token) and the second considers it as an embedded vector (conjugation feature). The results using these methods demonstrate that the vocabulary size can be compressed by approximately 86.1% (Tanaka corpus) and the NMT models can output the words not in the training data set. Furthermore, BLEU scores improved by 0.91 points in Japanese-to-English translation, and 0.32 points in English-to-Japanese translation with ASPEC.

期刊:arXiv, 2018年5月25日

网址:

http://www.zhuanzhi.ai/document/64d4f5f6ff0f2db7eed08f134e189e67

21.Phrase Table as Recommendation Memory for Neural Machine Translation(短语表作为神经机器翻译的推荐记忆)

作者:Yang Zhao,Yining Wang,Jiajun Zhang,Chengqing Zong

accepted by IJCAI 2018

机构:University of Chinese Academy of Sciences

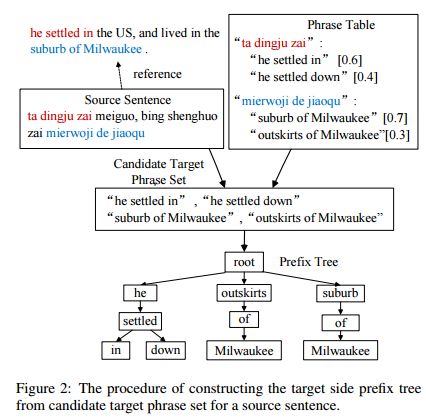

摘要:Neural Machine Translation (NMT) has drawn much attention due to its promising translation performance recently. However, several studies indicate that NMT often generates fluent but unfaithful translations. In this paper, we propose a method to alleviate this problem by using a phrase table as recommendation memory. The main idea is to add bonus to words worthy of recommendation, so that NMT can make correct predictions. Specifically, we first derive a prefix tree to accommodate all the candidate target phrases by searching the phrase translation table according to the source sentence. Then, we construct a recommendation word set by matching between candidate target phrases and previously translated target words by NMT. After that, we determine the specific bonus value for each recommendable word by using the attention vector and phrase translation probability. Finally, we integrate this bonus value into NMT to improve the translation results. The extensive experiments demonstrate that the proposed methods obtain remarkable improvements over the strong attentionbased NMT.

期刊:arXiv, 2018年5月25日

网址:

http://www.zhuanzhi.ai/document/3a7855d956fdd0cc27e90fab2d0dae86

22.Hyperbolic Attention Networks (双曲注意力网络)

作者:Caglar Gulcehre,Misha Denil,Mateusz Malinowski,Ali Razavi,Razvan Pascanu,Karl Moritz Hermann,Peter Battaglia,Victor Bapst,David Raposo,Adam Santoro,Nando de Freitas

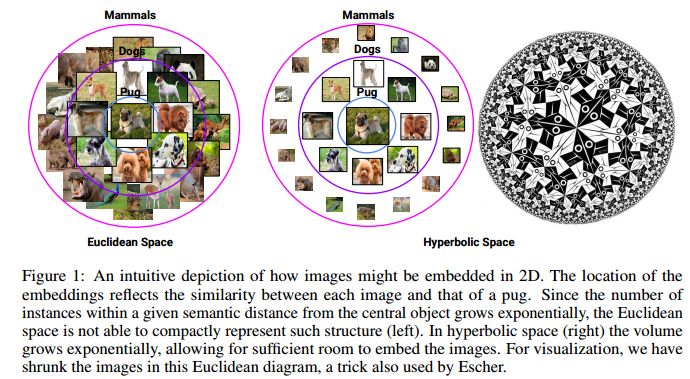

摘要:We introduce hyperbolic attention networks to endow neural networks with enough capacity to match the complexity of data with hierarchical and power-law structure. A few recent approaches have successfully demonstrated the benefits of imposing hyperbolic geometry on the parameters of shallow networks. We extend this line of work by imposing hyperbolic geometry on the activations of neural networks. This allows us to exploit hyperbolic geometry to reason about embeddings produced by deep networks. We achieve this by re-expressing the ubiquitous mechanism of soft attention in terms of operations defined for hyperboloid and Klein models. Our method shows improvements in terms of generalization on neural machine translation, learning on graphs and visual question answering tasks while keeping the neural representations compact.

期刊:arXiv, 2018年5月25日

网址:

http://www.zhuanzhi.ai/document/969cbeaaf672845e6f2e4ad21f35200f

23.Sparse and Constrained Attention for Neural Machine Translation (神经机器翻译注意力的稀缺性和局限性)

作者:Chaitanya Malaviya,Pedro Ferreira,André F. T. Martins

Proceedings of ACL 2018

机构:Universidade de Lisboa,Carnegie Mellon University

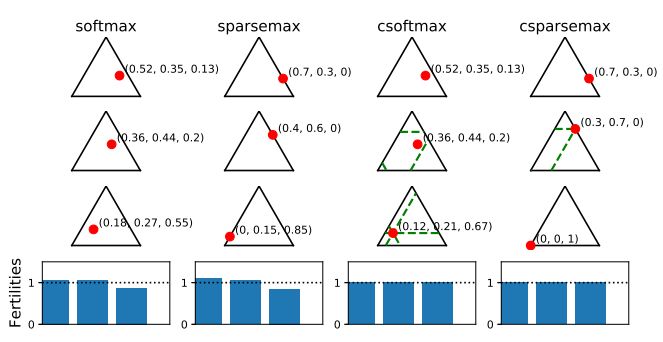

摘要:In NMT, words are sometimes dropped from the source or generated repeatedly in the translation. We explore novel strategies to address the coverage problem that change only the attention transformation. Our approach allocates fertilities to source words, used to bound the attention each word can receive. We experiment with various sparse and constrained attention transformations and propose a new one, constrained sparsemax, shown to be differentiable and sparse. Empirical evaluation is provided in three languages pairs.

期刊:arXiv, 2018年5月22日

网址:

http://www.zhuanzhi.ai/document/5a4b85891e7b8d16a0e92e4cbe26262d

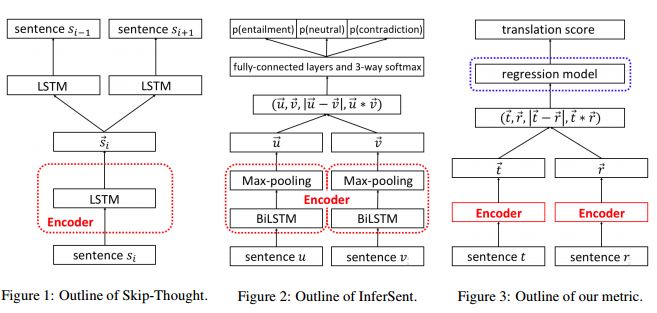

24.Metric for Automatic Machine Translation Evaluation based on Universal Sentence Representations (基于通用语句表示的机器翻译评价指标)

作者:Hiroki Shimanaka,Tomoyuki Kajiwara,Mamoru Komachi

NAACL 2018 Student Research Workshop

机构:Tokyo Metropolitan University,Osaka University

摘要:Sentence representations can capture a wide range of information that cannot be captured by local features based on character or word N-grams. This paper examines the usefulness of universal sentence representations for evaluating the quality of machine translation. Although it is difficult to train sentence representations using small-scale translation datasets with manual evaluation, sentence representations trained from large-scale data in other tasks can improve the automatic evaluation of machine translation. Experimental results of the WMT-2016 dataset show that the proposed method achieves state-of-the-art performance with sentence representation features only.

期刊:arXiv, 2018年5月19日

网址:

http://www.zhuanzhi.ai/document/9f7947bdd7b48ff5cba0edc07c24c1fc

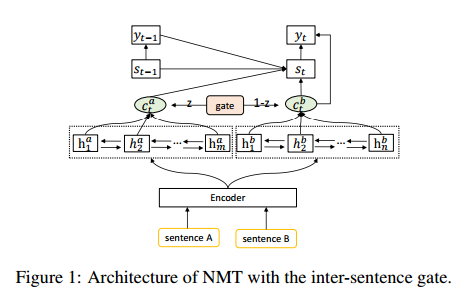

25.Fusing Recency into Neural Machine Translation with an Inter-Sentence Gate Model (用Inter-Sentence门模型将近似值融合到神经机器翻译中)

作者:Shaohui Kuang,Deyi Xiong

Accepted by COLING2018

机构:Soochow University

摘要:Neural machine translation (NMT) systems are usually trained on a large amount of bilingual sentence pairs and translate one sentence at a time, ignoring inter-sentence information. This may make the translation of a sentence ambiguous or even inconsistent with the translations of neighboring sentences. In order to handle this issue, we propose an inter-sentence gate model that uses the same encoder to encode two adjacent sentences and controls the amount of information flowing from the preceding sentence to the translation of the current sentence with an inter-sentence gate. In this way, our proposed model can capture the connection between sentences and fuse recency from neighboring sentences into neural machine translation. On several NIST Chinese-English translation tasks, our experiments demonstrate that the proposed inter-sentence gate model achieves substantial improvements over the baseline.

期刊:arXiv, 2018年6月12日

网址:

http://www.zhuanzhi.ai/document/128864139b0151ca784592411e24d51c

-END-

专 · 知

人工智能领域26个主题知识资料全集获取与加入专知人工智能服务群: 欢迎微信扫一扫加入专知人工智能知识星球群,获取专业知识教程视频资料和与专家交流咨询!

请PC登录www.zhuanzhi.ai或者点击阅读原文,注册登录专知,获取更多AI知识资料!

请加专知小助手微信(扫一扫如下二维码添加),加入专知主题群(请备注主题类型:AI、NLP、CV、 KG等)交流~

请关注专知公众号,获取人工智能的专业知识!

点击“阅读原文”,使用专知