超强干货!TensorFlow易用代码大集合...

摘自机器之心

实际上,在我们搭建自己的模型或系统时,复制并粘贴这些代码就行了。它们以规范的形式定义不同的功能模块,因此只要修改少量参数与代码,它们就能完美地融入到我们项目中。

项目链接:https://github.com/taki0112/Tensorflow-Cookbook

在这个项目中,作者重点突出这是一份易于使用的 TensorFlow 代码集,它包括常见的正则化、卷积运算和架构模块等代码。该项目包含一般深度学习架构所需要的代码,例如初始化和正则化、各种卷积运算、基本网络架构与模块、损失函数和其它数据预处理过程。此外,还特别增加了对 GAN 的支持,这主要体现在损失函数上,其中生成器损失和判别器损失可以使用推土机距离、最小二乘距离和 KL 散度等。

使用方法其实有两种,首先我们可以复制粘贴代码,这样对于模块的定制化非常有利。其次我们可以直接像使用 API 那样调用操作与模块,这种方法会使模型显得非常简洁,而且导入的源码也通俗易懂。首先对于第二种直接导入的方法,我们可以从 ops.py 和 utils.py 文件分别导入模型运算部分与图像预处理过程。

from ops import *

from utils import *

from ops import conv

x = conv(x, channels=64, kernel=3, stride=2, pad=1, pad_type= reflect , use_bias=True, sn=True, scope= conv )

而对于第一种复制粘贴,我们可能会根据实际修改一些参数与结构,但这要比从头写简单多了。如下所示,对于一般的神经网络,它会采用如下结构模板:

def network(x, is_training=True, reuse=False, scope="network"):

with tf.variable_scope(scope, reuse=reuse):

x = conv(...)

...return logit

其实深度神经网络就像一块块积木,我们按照上面的模板把 ops.py 中不同的模块堆叠起来,最终就能得到完整的前向传播过程。

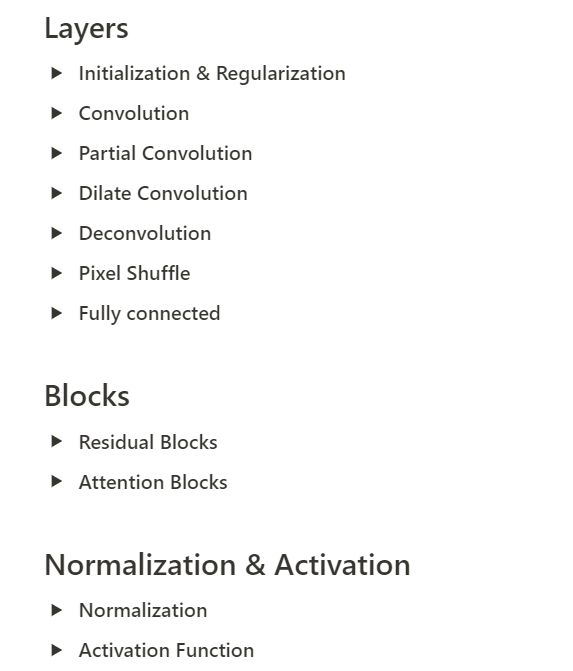

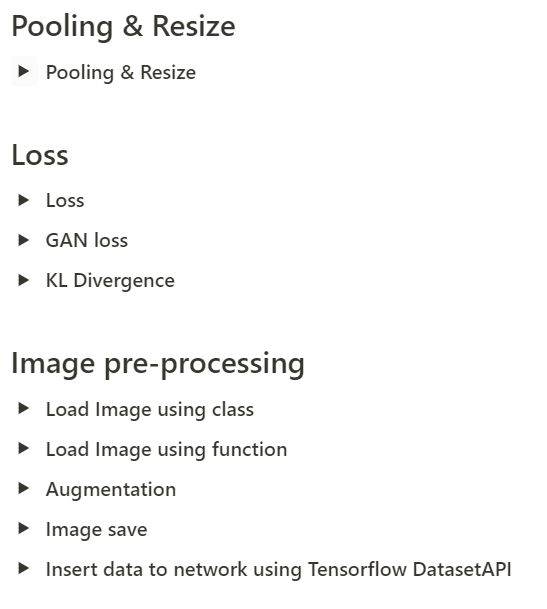

代码集目录

项目页面:https://www.notion.so/Simple-Tensorflow-Cookbook-6f4563d0cd7343cb9d1e60cd1698b54d

目前整个项目包含 20 种代码块,它们可用于快速搭建深度学习模型:

推荐阅读

15亿参数!史上最强通用NLP模型诞生:狂揽7大数据集最佳纪录