迁移自适应学习最新综述,附21页论文下载

【导读】迁移学习是目前人工智能领域中非常火爆的一个方向,受到研究人员的广泛关注,本文为大家带来了本领域最新的综述文章,详情如下。

介绍:

人类眼中的世界是时刻变化的,特别是不同“域”(domain)之间的状态、事物、人员等。这里的“域”指的是某一刻的世界状态。由于对两个时刻不同知识的协作处理需求,因此产生了域迁移自适应(domain transfer adaptation)这一领域。传统的机器学习方法,主要目标是通过对训练数据进行经验风险最小化学习,找出令测试数据期望风险最小化的模型,并在这一过程中假设,训练数据与测试数据共享了相似的联合概率分布。迁移自适应学习目标是构建一个可以处理来自不同概率空间的“源域”与“目标域”的模型,这是一个非常有前景的领域,并逐步吸引了更多研究者。

这篇文章综述了最近迁移自适应学习最新方法、可能的基准以及广泛的挑战。为研究者提供了一个不错的框架,以便更好的理解研究状态、挑战以及未来的领域方向。

迁移学习中的五个挑战:

Instance Re-weighting Adaptation。由于跨域数据间的概率分布差异,通过基于源和目标数据之间的特征分布匹配,以非参方式直接推断实例的重采样权重来自然考虑差异,参数分布假设下的权重参数估计仍然是一大挑战。

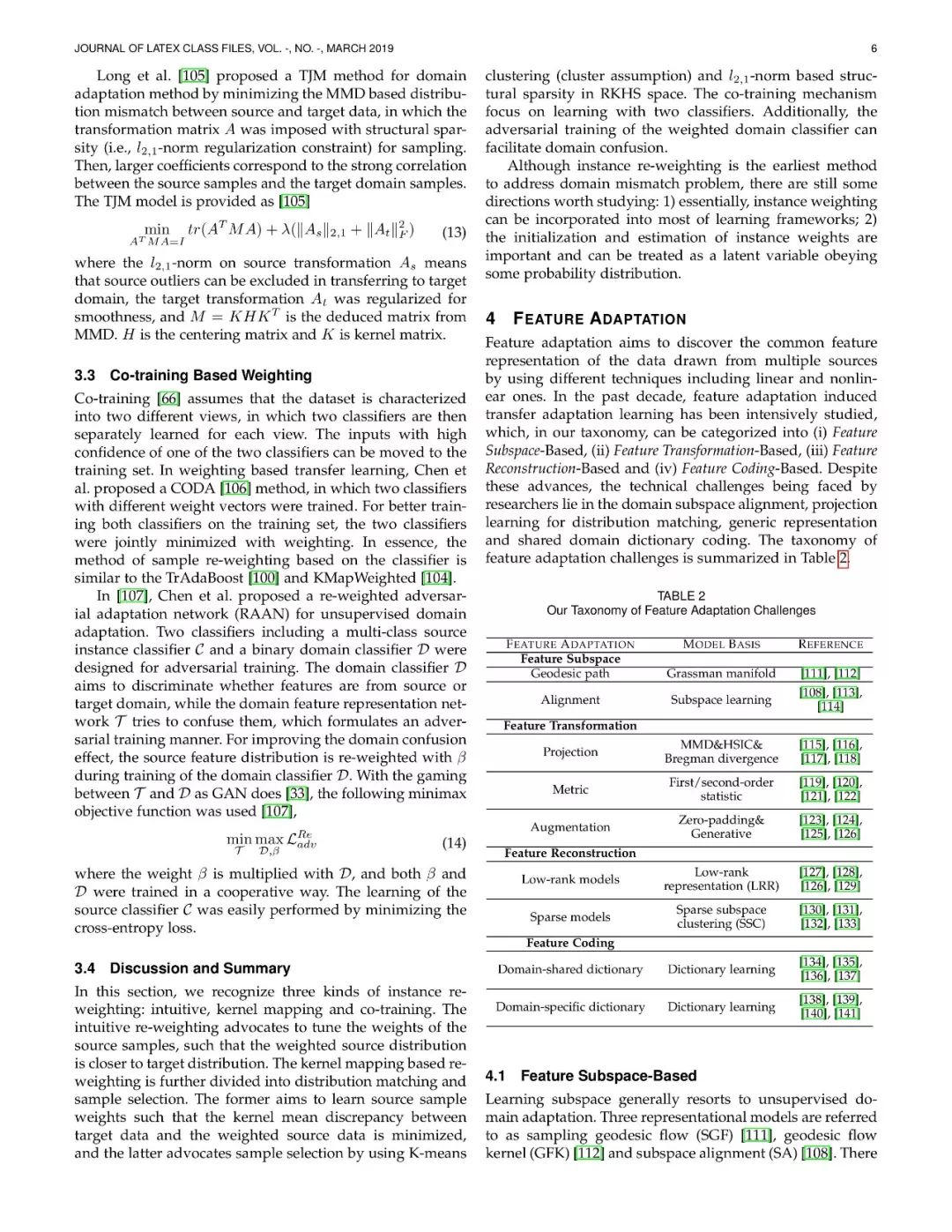

特征适应。为了适应多源数据,需要学习一个通用的特征空间或者表示,其中投影的源和目标域具有相似的分布,数据分布的异质性使得获得这样的通用特征表示相当的具有挑战性。

分类器适应。由于目标域的移位问题,对源域样本进行训练的分类器通常都是有偏差的。从多个域中学习可以用于其他不同域的通用分类器是一个非常具有挑战性的主题。

深度网络适应。目前已经认识到深度神经网络具有非常强大的特征表示能力,并且可以在单一域中构建通用的深度模型。域的大尺度变动使得深度神经网络难以构建出可迁移的深度表示。

对抗式适应。对抗性学习起源于生成式对抗网络,TL/DA目标是使得源域和目标域在特征空间内更加的接近。通常来说,两个域一定会发生混淆,难以区分。因此,使用对抗性训练和游戏策略的域混淆问题中,存在着不小的技术挑战。

本文分别针对以上5个挑战,从多个维度进行了梳理,包括:弱监督学习观点、Instance Re-weighting Adaptation、特征适应、分类器适应、深度网络适应、对抗适应、基准数据集等内容。

请关注专知公众号(点击上方蓝色专知关注)

后台回复“TALADS” 就可以获取最新论文的下载链接~

专 · 知

专知《深度学习:算法到实战》课程全部完成!500+位同学在学习,现在报名,限时优惠!网易云课堂人工智能畅销榜首位!

欢迎微信扫一扫加入专知人工智能知识星球群,获取最新AI专业干货知识教程视频资料和与专家交流咨询!

请加专知小助手微信(扫一扫如下二维码添加),加入专知人工智能主题群,咨询《深度学习:算法到实战》课程,咨询技术商务合作~

请PC登录www.zhuanzhi.ai或者点击阅读原文,注册登录专知,获取更多AI知识资料!

点击“阅读原文”,了解报名专知《深度学习:算法到实战》课程