DeepMind研究员Tor2019著作《赌博机算法》,555页带你学习专治选择困难症技术

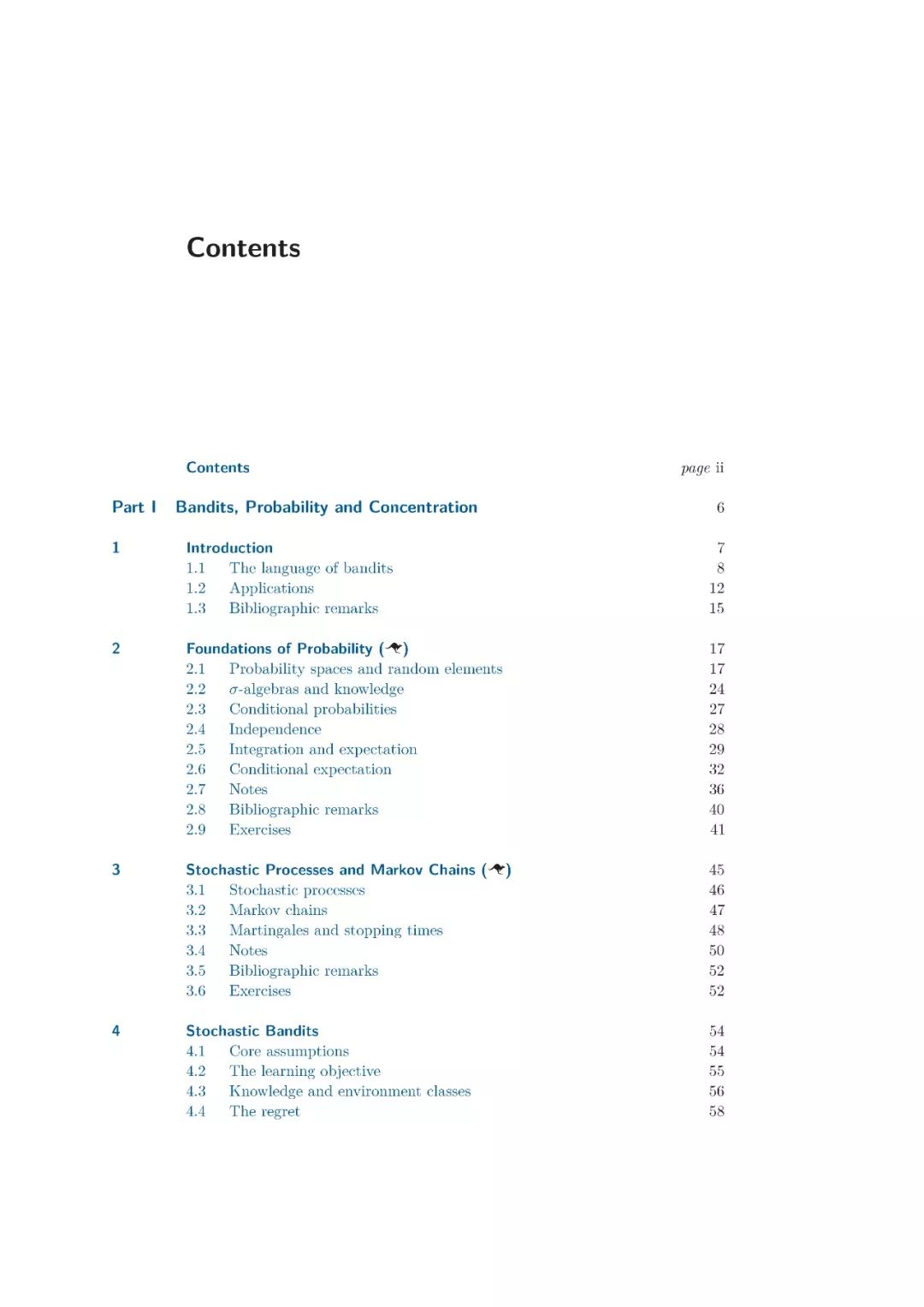

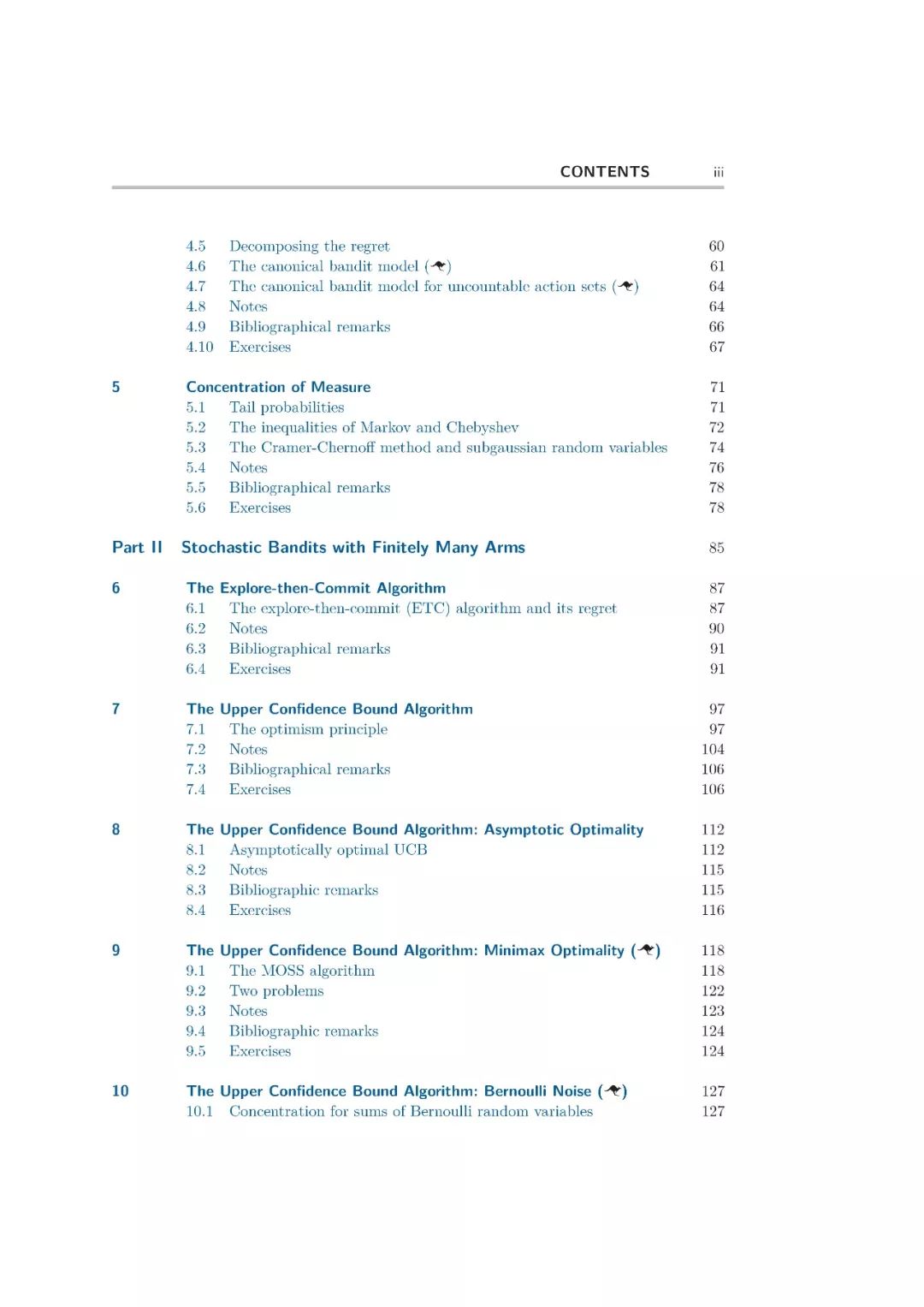

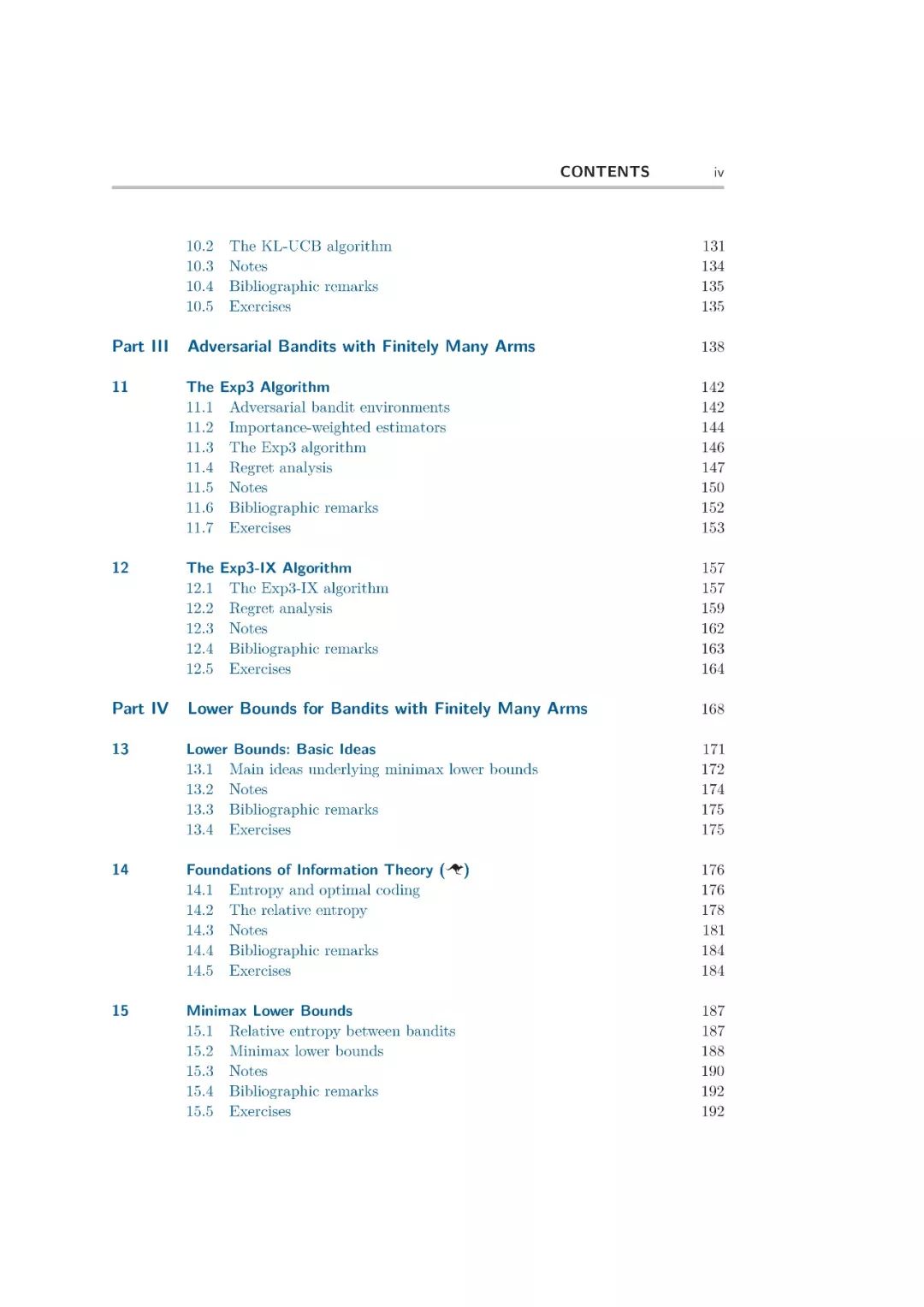

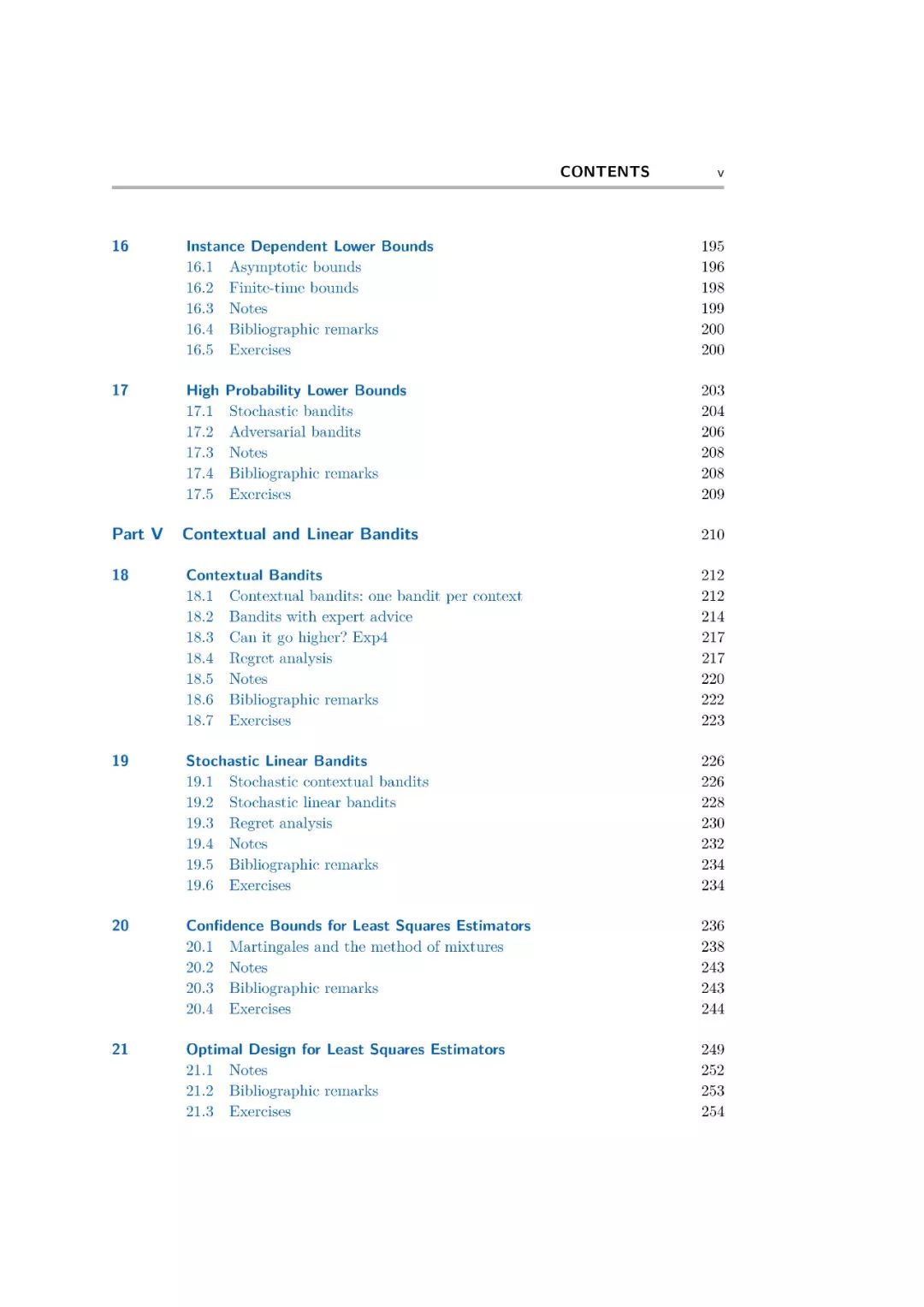

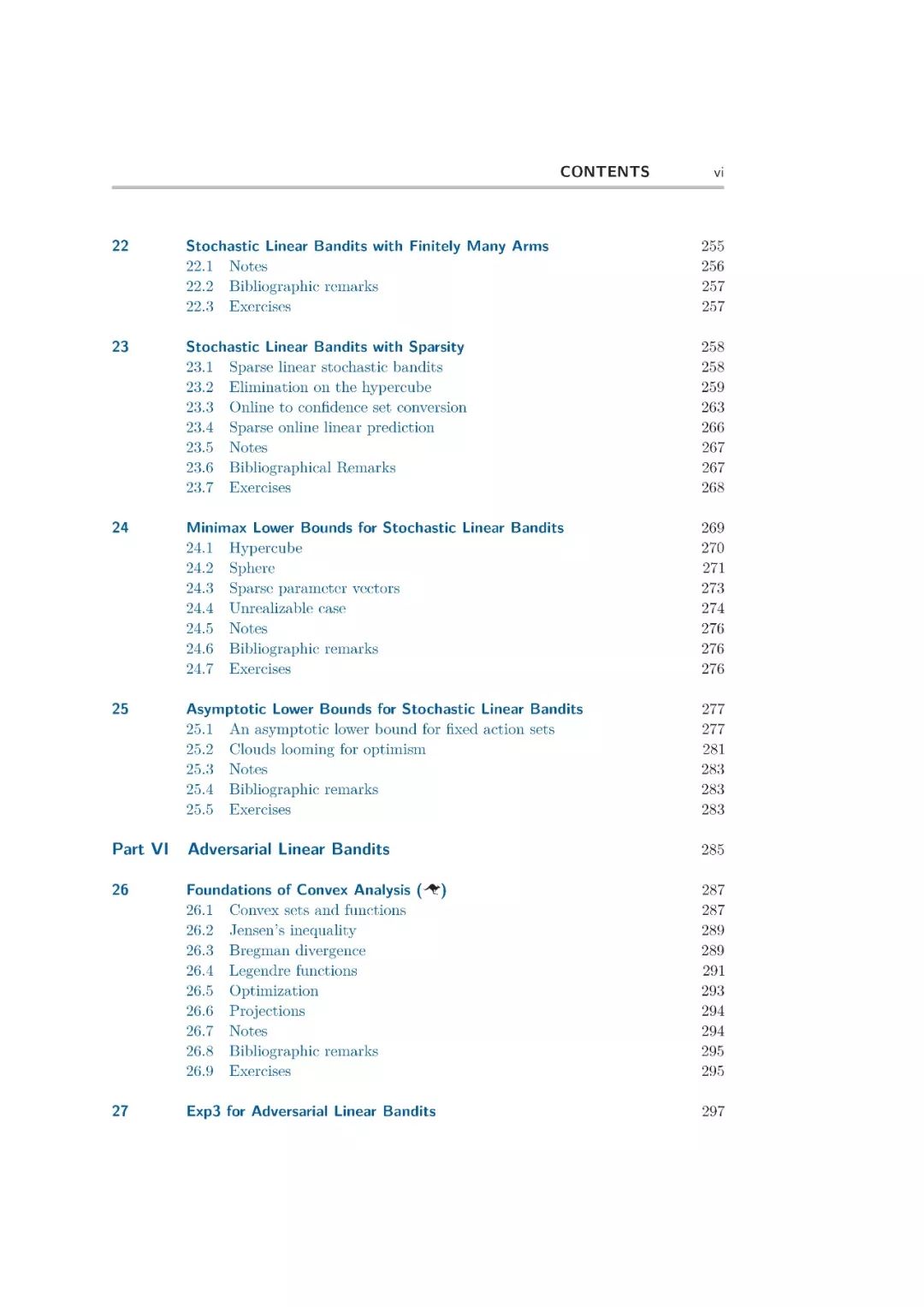

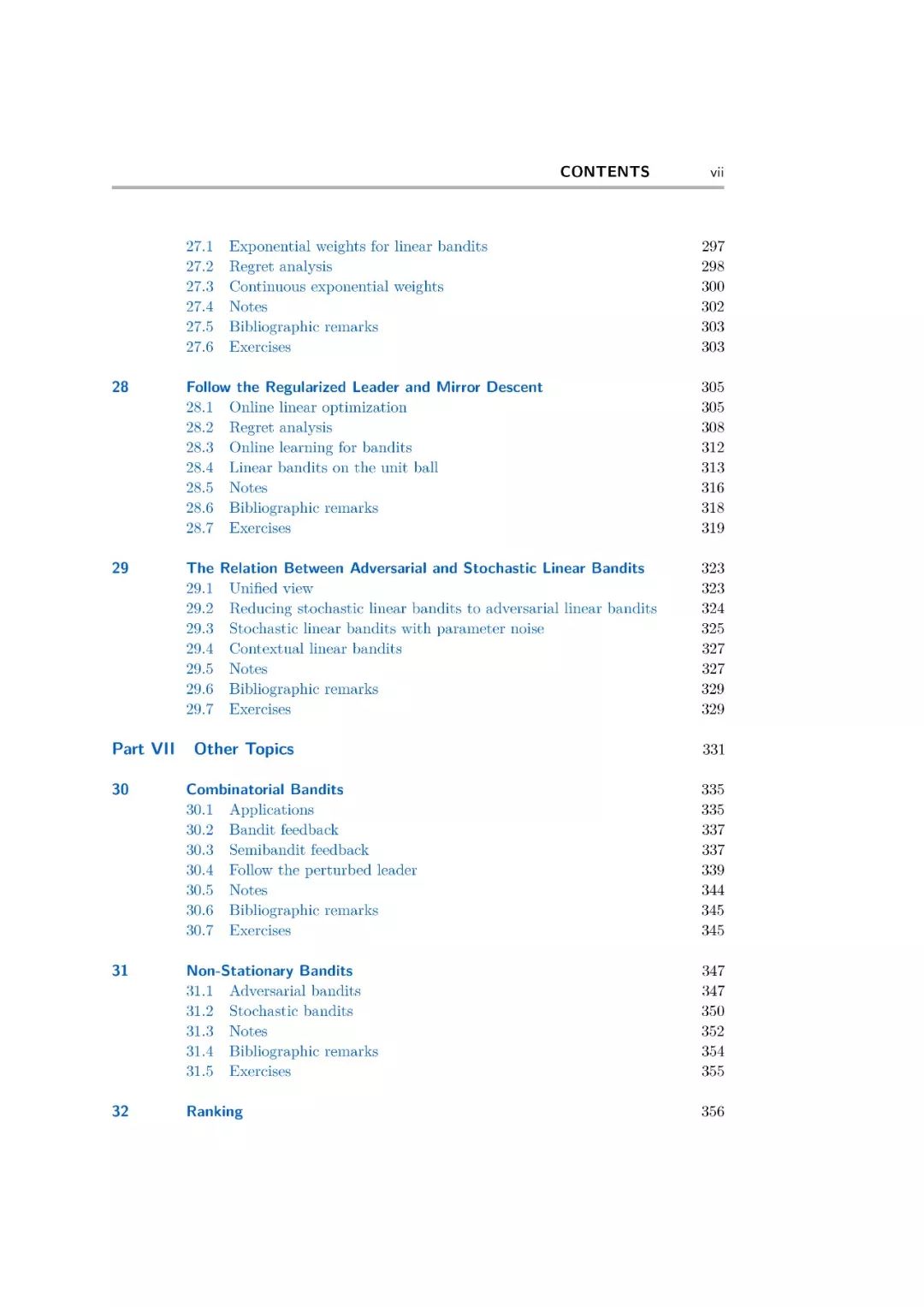

【导读】Bandit Algorithm,赌博机算法,在于解决在不同选择下分配有限资源来使得收益最大化的问题。上到国家,下到个人,都面临选择,选择决定命运。如何选择?是一门技术活。DeepMind科学家Tor Lattimore 和Csaba Szepesv´ari编著的赌博机算法《Bandit Algorithm》,剑桥大学出版,全面讲述了赌博机算法的基础,算法,应用等,是学习赌博机算法的最新系统性资料。

请关注专知公众号(扫一扫最下面专知二维码,或者点击上方蓝色专知)

后台回复“BA2019” 就可以获取赌博机算法的下载链接~

专知2019年1月将开设一门《深度学习:算法到实战》讲述相关强化学习博弈相关,欢迎报名!

专知开课啦!《深度学习: 算法到实战》, 中科院博士为你讲授!

Tor Lattimore

http://tor-lattimore.com/

Bandit问题-选择是一个技术活

选择决定命运,每个人小考、中考、高考、研究生考试、找工作等,每一个都是一个关口,都让选择困难症的我们头很大。那么,有办法能够应对这些问题吗?

答案是:有!而且是科学的办法,而不是“走近科学”的办法。那就是bandit算法!

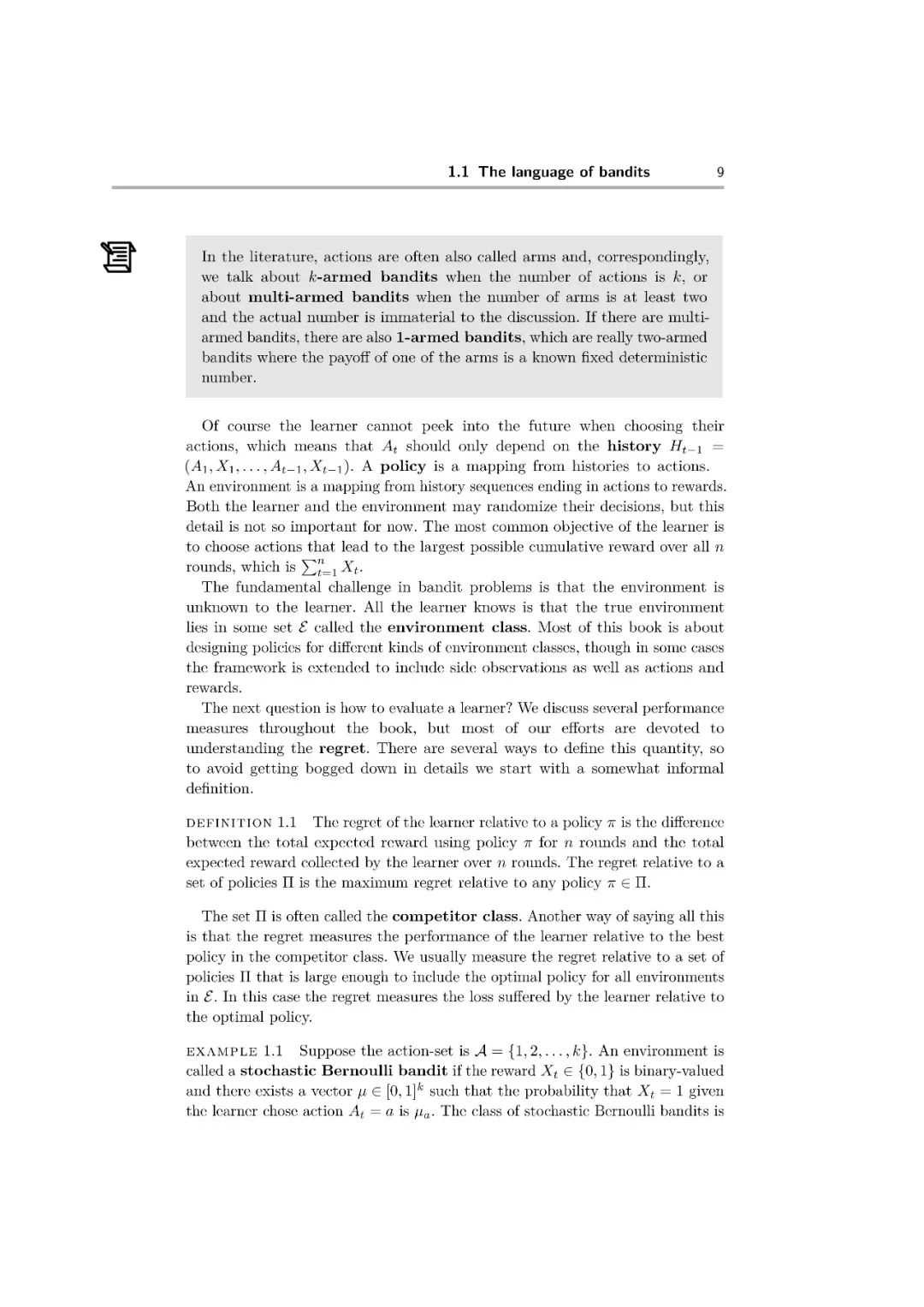

bandit算法来源于人民群众喜闻乐见的赌博学,它要解决的问题是这样的[1]:

一个赌徒,要去摇老虎机,走进赌场一看,一排老虎机,外表一模一样,但是每个老虎机吐钱的概率可不一样,他不知道每个老虎机吐钱的概率分布是什么,那么想最大化收益该怎么整?这就是多臂赌博机问题(Multi-armed bandit problem, K-armed bandit problem, MAB)。

怎么解决这个问题呢?求菩萨?拜赌神?都不好使,最好的办法是去试一试,而这个试一试也不是盲目地试,而是有策略地试,越快越好,这些策略就是bandit算法。

这个多臂问题,它是一个可以装下很多问题的万能框:

1. 假设一个用户对不同类别的内容感兴趣程度不同,那么我们的推荐系统初次见到这个用户时,怎么快速地知道他对每类内容的感兴趣程度?这就是推荐系统的冷启动。

2. 假设我们有若干广告库存,怎么知道该给每个用户展示哪个广告,从而获得最大的点击收益?是每次都挑效果最好那个么?那么新广告如何才有出头之日?

3. 我们的算法工程师又想出了新的模型,有没有比A/B test更快的方法知道它和旧模型相比谁更靠谱?

4. ...

全都是关于选择的问题。只要是关于选择的问题,都可以简化成一个多臂赌博机问题,毕竟小赌怡情嘛,人生何处不赌博。

赌博机问题,在计算广告、推荐系统、医疗试验、推荐系统、智能电网和众包等领域有广泛应用。

参考:

https://zhuanlan.zhihu.com/p/21388070

Bandit算法

目前对多臂赌博机问题的研究已有近一个世纪。虽然一开始的研究相当曲折,但现在每年都有一个大型社区发表数百篇文章。Bandit算法也在工业上的实际应用中找到了自己的方法,特别是在数据很容易获得和自动化是唯一扩展方法的在线平台上。

我们希望写一本全面的书,但现在的文献如此之多,许多主题都被排除在外。最后,我们确定了一个更为实际的目标,即为读者提供足够的专业知识,让他们能够自己探索专业文献,并根据其应用调整现有算法。后一点很重要。正如托尔斯泰可能会写的,“理论上的问题都是相似的;每个应用情况都是不同的。想要运用bandit算法的实践者必须理解理论中的哪些假设是重要的,以及当假设发生变化时如何修改算法。我们希望这本书能提供这样的理解。

书中所涵盖的内容有一定的深度。重点是对赌博机问题的算法数学分析,但这不是一本传统的数学书,引理后面跟着证明,定理和更多的引理。我们努力将设计算法的指导原则和分析算法的直觉纳入其中。许多算法都伴随着进一步帮助直觉的经验证明。

-END-

专 · 知

专知开课啦!《深度学习: 算法到实战》, 中科院博士为你讲授!

请加专知小助手微信(扫一扫如下二维码添加),咨询《深度学习:算法到实战》参团限时优惠报名~

欢迎微信扫一扫加入专知人工智能知识星球群,获取专业知识教程视频资料和与专家交流咨询!

请PC登录www.zhuanzhi.ai或者点击阅读原文,注册登录专知,获取更多AI知识资料!

点击“阅读原文”,了解报名专知《深度学习:算法到实战》课程