【AutoML干货】自动机器学习: 最新进展综述与开放挑战

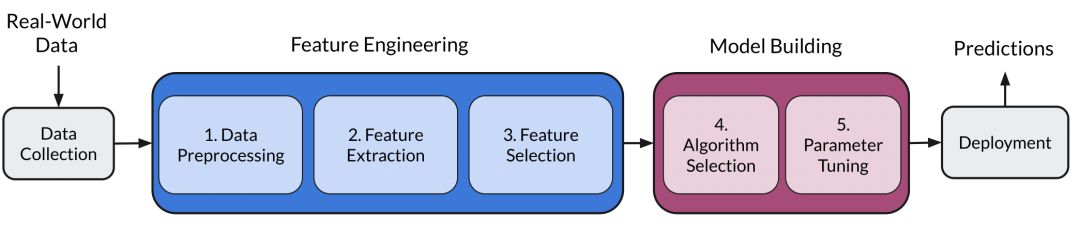

【导读】自动机器学习是当前学术工业界的热点。最近来自塔尔图大学Radwa Elshawi等的学者撰写了关于自动机器学习的进展综述,包括自动机器学习概念、元学习、神经架构搜索、超参优化等,涵盖137篇参考文献,非常值得一看。

https://github.com/DataSystemsGroupUT/AutoML_Survey

请关注专知公众号(点击上方蓝色专知关注)

后台回复“自动机器学习” 就可以获取《自动机器学习》论文下载链接~

自动机器学习进展:论文与参考文献

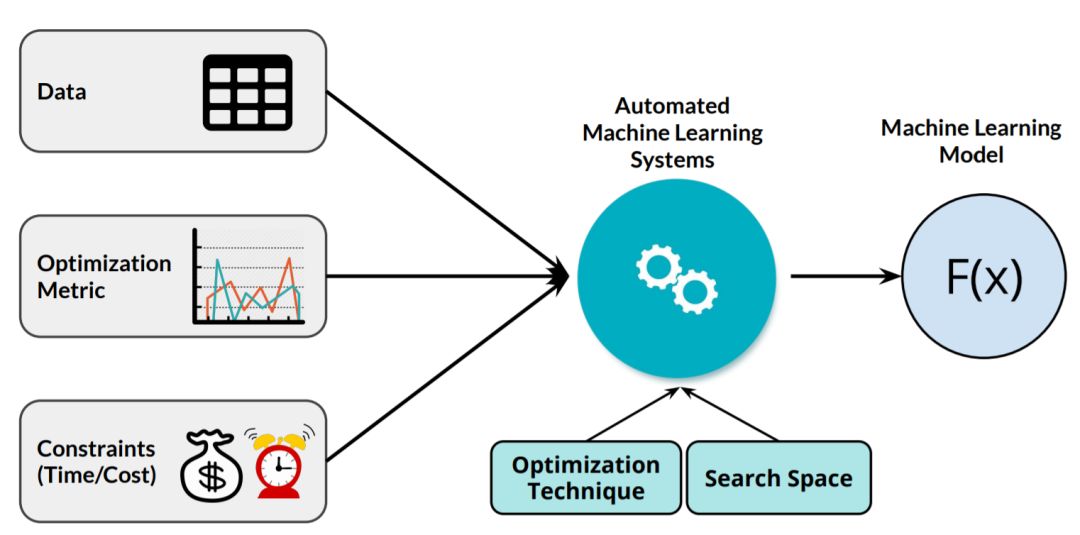

随着我们数字世界中数据量的不断增长,人们已经认识到,有知识的数据科学家无法规模化应对这些挑战。因此,将建立良好机器学习模型的过程自动化是至关重要的。在过去的几年里,已经引入了一些技术和框架来解决机器学习领域中组合算法选择和超参数调优(CASH)过程自动化的挑战。这些技术的主要目的是通过扮演领域专家的角色,减少人工在循环中的作用,填补非专家机器学习用户的空白。

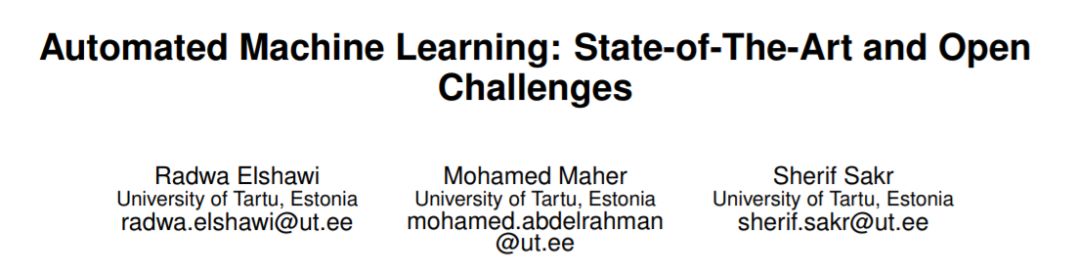

在这篇文章中,我们提出了全面的调查,为解决CASH问题的最新努力。此外,我们还重点研究了从数据理解到模型部署的整个复杂机器学习管道(AutoML)的其他步骤的自动化。此外,我们还全面介绍了该领域中引入的各种工具和框架。最后,我们讨论了一些研究方向和需要解决的开放挑战,以实现自动过程的愿景和目标。

传统机器学习流程

1. 元学习

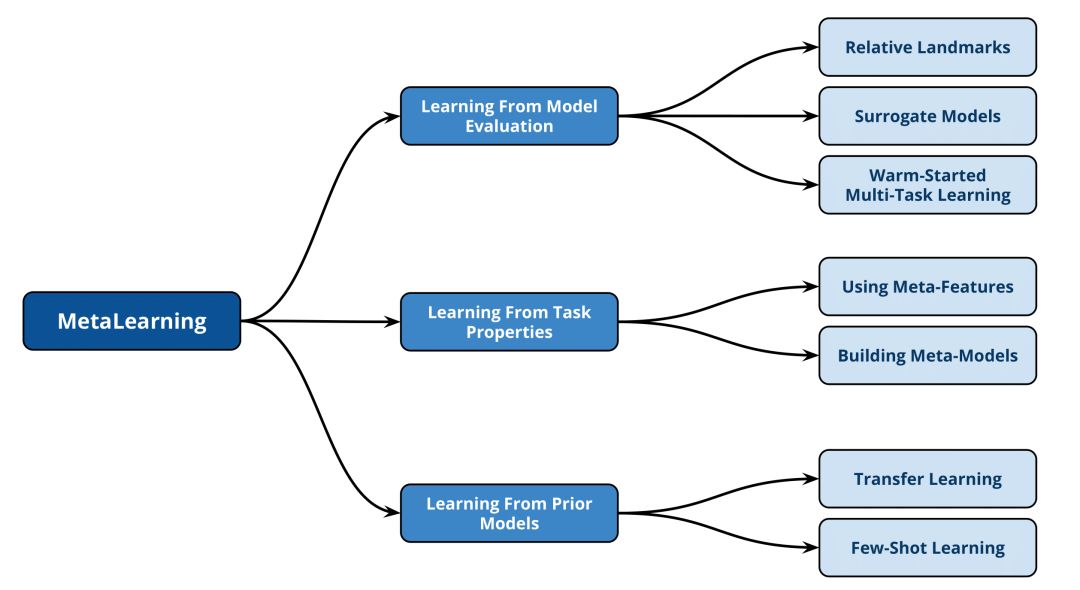

元学习可以描述为在不同类型的数据上应用不同的学习算法,从而减少学习新任务所需时间的过程

元学习分类

2018 | Meta-Learning: A Survey. | Vanschoren | CoRR |

PDF2008 | Metalearning: Applications to data mining | Brazdil et al. | Springer Science & Business Media |

PDF

从模型评价中学习

Surrogate Models

2018 | Scalable Gaussian process-based transfer surrogates for hyperparameter optimization. | Wistuba et al. | Journal of ML |

PDFWarm-Started Multi-task Learning

2017 | Multiple adaptive Bayesian linear regression for scalable Bayesian optimization with warm start. | Perrone et al. |

PDFRelative Landmarks

2001 | An evaluation of landmarking variants. | Furnkranz and Petrak | ECML/PKDD |

PDF

从任务性质中学习

使用元特征

2019 | SmartML: A Meta Learning-Based Framework for Automated Selection and Hyperparameter Tuning for Machine Learning Algorithms. | Maher and Sakr | EDBT |

PDF2017 | On the predictive power of meta-features in OpenML. | Bilalli et al. | IJAMC |

PDF2013 | Collaborative hyperparameter tuning. | Bardenet et al. | ICML |

PDF使用元模型

2018 | Predicting hyperparameters from meta-features in binary classification problems. | Nisioti et al. | ICML |

PDF2014 | Automatic classifier selection for non-experts. Pattern Analysis and Applications. | Reif et al. |

PDF2012 | Imagenet classification with deep convolutional neural networks. | Krizhevsky et al. | NIPS |

PDF2008 | Predicting the performance of learning algorithms using support vector machines as meta-regressors. | Guerra et al. | ICANN |

PDF2008 | Metalearning-a tutorial. | Giraud-Carrier | ICMLA |

PDF2004 | Metalearning: Applications to data mining. | Soares et al. | Springer Science & Business Media |

PDF2004 | Selection of time series forecasting models based on performance information. | dos Santos et al. | HIS |

PDF2003 | Ranking learning algorithms: Using IBL and meta-learning on accuracy and time results. | Brazdil et al. | Journal of ML |

PDF2002 | Combination of task description strategies and case base properties for meta-learning. | Kopf and Iglezakis |

PDF

从先验模型中学习

迁移学习

2014 | How transferable are features in deep neural networks? | Yosinski et al. | NIPS |

PDF2014 | CNN features offthe-shelf: an astounding baseline for recognition. | Sharif Razavian et al. | IEEE CVPR |

PDF2014 | Decaf: A deep convolutional activation feature for generic visual recognition. | Donahue et al. | ICML |

PDF2012 | Imagenet classification with deep convolutional neural networks. | Krizhevsky et al. | NIPS |

PDF2012 | Deep learning of representations for unsupervised and transfer learning. | Bengio | ICML |

PDF2010 | A survey on transfer learning. | Pan and Yang | IEEE TKDE |

PDF1995 | Learning many related tasks at the same time with backpropagation. | Caruana | NIPS |

PDF1995 | Learning internal representations. | Baxter |

PDF零样本学习

2017 | Prototypical networks for few-shot learning. | Snell et al. | NIPS |

PDF2017 | Meta-Learning: A Survey. | Vanschoren | CoRR |

PDF2016 | Optimization as a model for few-shot learning. | Ravi and Larochelle |

PDF

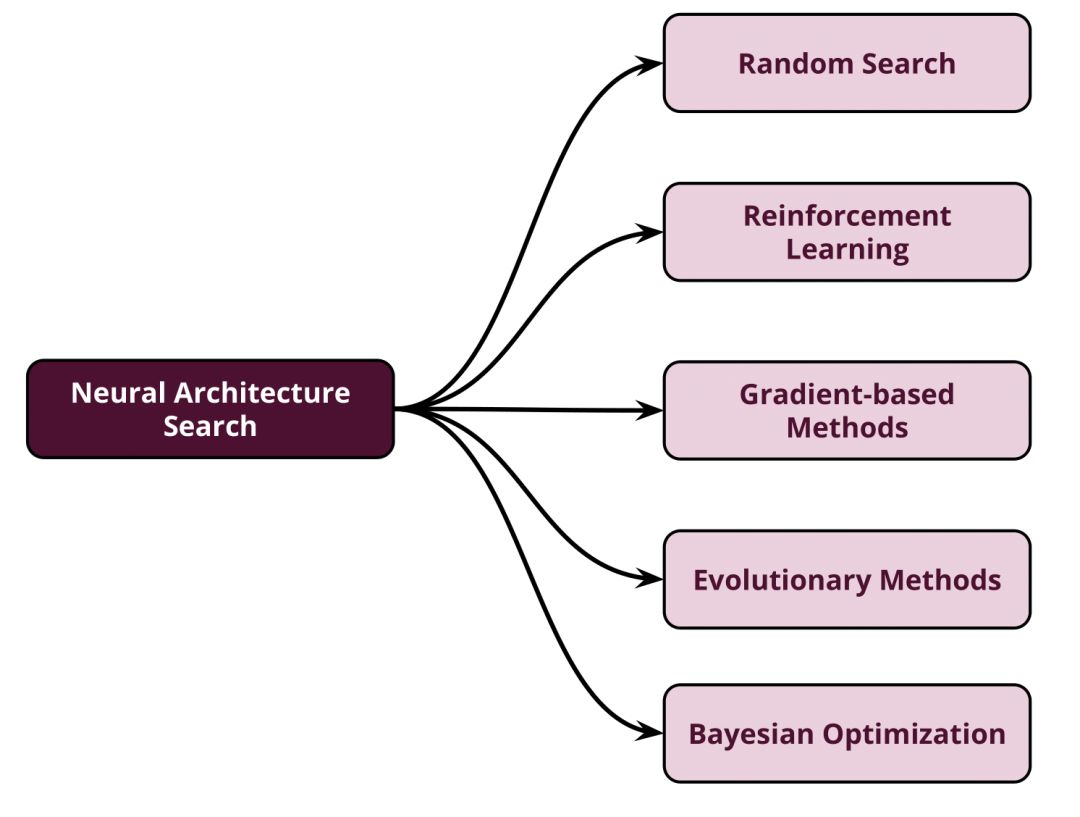

2. 神经架构搜索问题

神经结构搜索(NAS)是机器学习过程自动化的基础步骤,已成功地用于图像和语言任务的模型结构设计。

2018 | Progressive neural architecture search. | Liu et al. | ECCV |

PDF2018 | Efficient architecture search by network transformation. | Cai et al. | AAAI |

PDF2018 | Learning transferable architectures for scalable image recognition. | Zoph et al. | IEEE CVPR |

PDF2017 | Hierarchical representations for efficient architecture search. | Liu et al. |

PDF2016 | Neural architecture search with reinforcement learning. | Zoph and Le |

PDF2009 | Learning deep architectures for AI. | Bengio et al. |

PDF

随机搜索

2019 | Random Search and Reproducibility for Neural Architecture Search. | Li and Talwalkar |

PDF2017 | Train Longer, Generalize Better: Closing the Generalization Gap in Large Batch Training of Neural Networks. | Hoffer et al. | NIPS |

PDF强化学习

2019 | Neural architecture search with reinforcement learning. | Zoph and Le |

PDF2019 | Designing neural network architectures using reinforcement learning. | Baker et al. |

PDF演化方法

2019 | Evolutionary Neural AutoML for Deep Learning. | Liang et al. |

PDF2019 | Evolving deep neural networks. | Miikkulainen et al. |

PDF2018 | a multi-objective genetic algorithm for neural architecture search. | Lu et al. |

PDF2018 | Efficient multi-objective neural architecture search via lamarckian evolution. | Elsken et al. |

PDF2018 | Regularized evolution for image classifier architecture search. | Real et al. |

PDF2017 | Large-scale evolution of image classifiers | Real et al. | ICML |

PDF2017 | Hierarchical representations for efficient architecture search. | Liu et al. |

PDF2009 | A hypercube-based encoding for evolving large-scale neural networks. | Stanley et al. | Artificial Life |

PDF2002 | Evolving neural networks through augmenting topologies. | Stanley and Miikkulainen | Evolutionary Computation |

PDF基于梯度的方法

2018 | Differentiable neural network architecture search. | Shin et al. |

PDF2018 | Darts: Differentiable architecture search. | Liu et al. |

PDF2018 | MaskConnect: Connectivity Learning by Gradient Descent. | Ahmed and Torresani |

PDF贝叶斯优化

2018 | Towards reproducible neural architecture and hyperparameter search. | Klein et al. |

PDF2018 | Neural Architecture Search with Bayesian Optimisation and Optimal Transport | Kandasamy et al. | NIPS |

PDF2016 | Towards automatically-tuned neural networks. | Mendoza et al. | PMLR |

PDF2015 | Speeding up automatic hyperparameter optimization of deep neural networks by extrapolation of learning curves. | Domhan et al. | IJCAI |

PDF2014 | Raiders of the lost architecture: Kernels for Bayesian optimization in conditional parameter spaces. | Swersky et al. |

PDF2013 | Making a science of model search: Hyperparameter optimization in hundreds of dimensions for vision architectures. | Bergstra et al. |

PDF2011 | Algorithms for hyper-parameter optimization. | Bergstra et al. | NIPS |

PDF

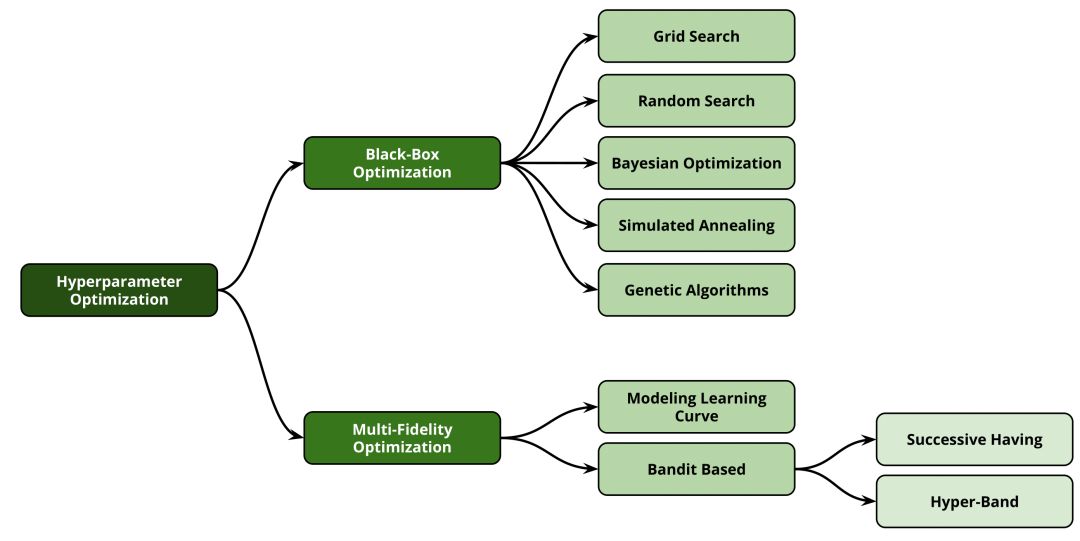

3. 超参优化

在选择了最有潜力实现输入数据集最高性能的模型流水线算法后,下一步是对该模型的超参数进行调优,进一步优化模型性能。值得一提的是,一些工具已经将离散模型管道中不同学习算法的空间民主化。因此,模型选择本身可以看作是一个分类参数,在修改超参数之前首先需要对其进行调优。

黑盒优化

网格随机搜索

2017 | Design and analysis of experiments. | Montgomery |

PDF2015 | Adaptive control processes: a guided tour. | Bellman |

PDF2012 | Random search for hyper-parameter optimization. | Bergstra and Bengio | JMLR |

PDF贝叶斯优化

2018 | Bohb: Robust and efficient hyperparameter optimization at scale. | Falkner et al. | JMLR |

PDF2017 | On the state of the art of evaluation in neural language models. | Melis et al. |

PDF2015 | Automating model search for large scale machine learning. | Sparks et al. | ACM-SCC |

PDF2015 | Scalable bayesian optimization using deep neural networks. | Snoek et al. | ICML |

PDF2014 | Bayesopt: A bayesian optimization library for nonlinear optimization, experimental design and bandits. | Martinez-Cantin | JMLR |

PDF2013 | Making a science of model search: Hyperparameter optimization in hundreds of dimensions for vision architectures. | Bergstra et al. |

PDF2013 | Towards an empirical foundation for assessing bayesian optimization of hyperparameters. | Eggensperger et al. | NIPS |

PDF2013 | Improving deep neural networks for LVCSR using rectified linear units and dropout. | Dahl et al. | IEEE-ICASSP |

PDF2012 | Practical bayesian optimization of machine learning algorithms. | Snoek et al. | NIPS |

PDF2011 | Sequential model-based optimization for general algorithm configuration. | Hutter et al. | LION |

PDF2011 | Algorithms for hyper-parameter optimization. | Bergstra et al. | NIPS |

PDF1998 | Efficient global optimization of expensive black-box functions. | Jones et al. |

PDF1978 | Adaptive control processes: a guided tour. | Mockus et al. |

PDF1975 | Single-step Bayesian search method for an extremum of functions of a single variable. | Zhilinskas |

PDF1964 | A new method of locating the maximum point of an arbitrary multipeak curve in the presence of noise. | Kushner |

PDF模拟退火

1983 | Optimization by simulated annealing. | Kirkpatrick et al. | Science |

PDF一般算法

1992 | Adaptation in natural and artificial systems: an introductory analysis with applications to biology, control, and artificial intelligence. | Holland et al. |

PDF

多精度优化

2019 | Multi-Fidelity Automatic Hyper-Parameter Tuning via Transfer Series Expansion. | Hu et al. |

PDF2016 | Review of multi-fidelity models. | Fernandez-Godino |

PDF2012 | Provably convergent multifidelity optimization algorithm not requiring high-fidelity derivatives. | March and Willcox | AIAA |

PDF建模学习曲线

2015 | Speeding up automatic hyperparameter optimization of deep neural networks by extrapolation of learning curves. | Domhan et al. | IJCAI |

PDF1998 | Efficient global optimization of expensive black-box functions. | Jones et al. | JGO |

PDF强盗算法

2016 | Non-stochastic Best Arm Identification and Hyperparameter Optimization. | Jamieson and Talwalkar | AISTATS |

PDF2016 | Hyperband: A novel bandit-based approach to hyperparameter optimization. | Kirkpatrick et al. | JMLR |

PDF

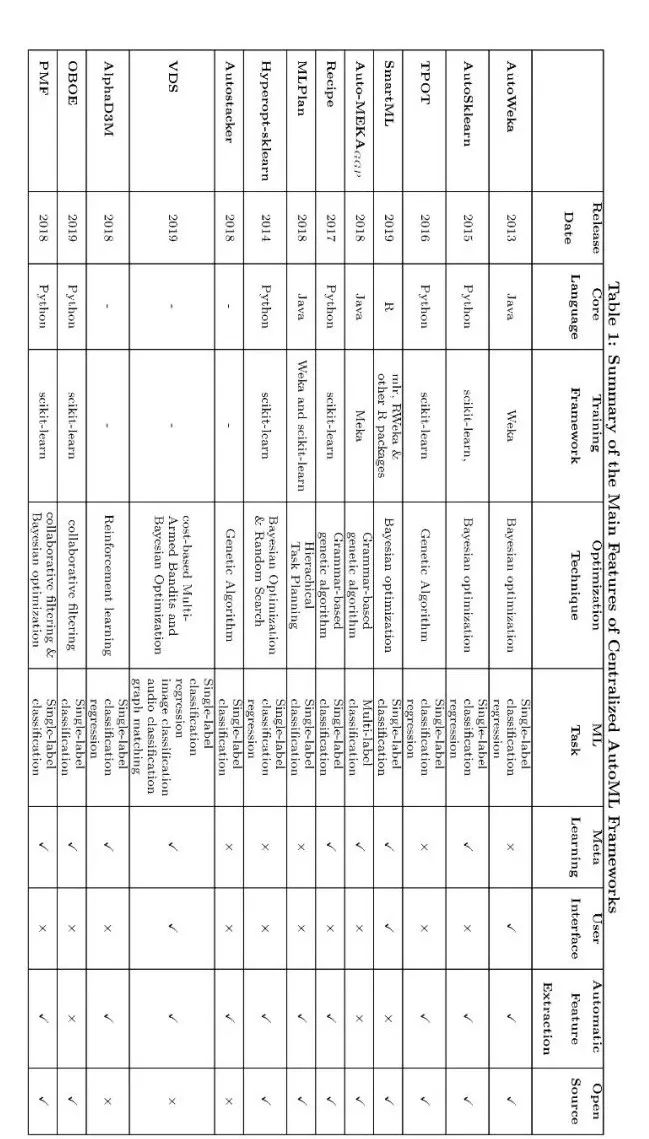

4. AutoML 工具与框架

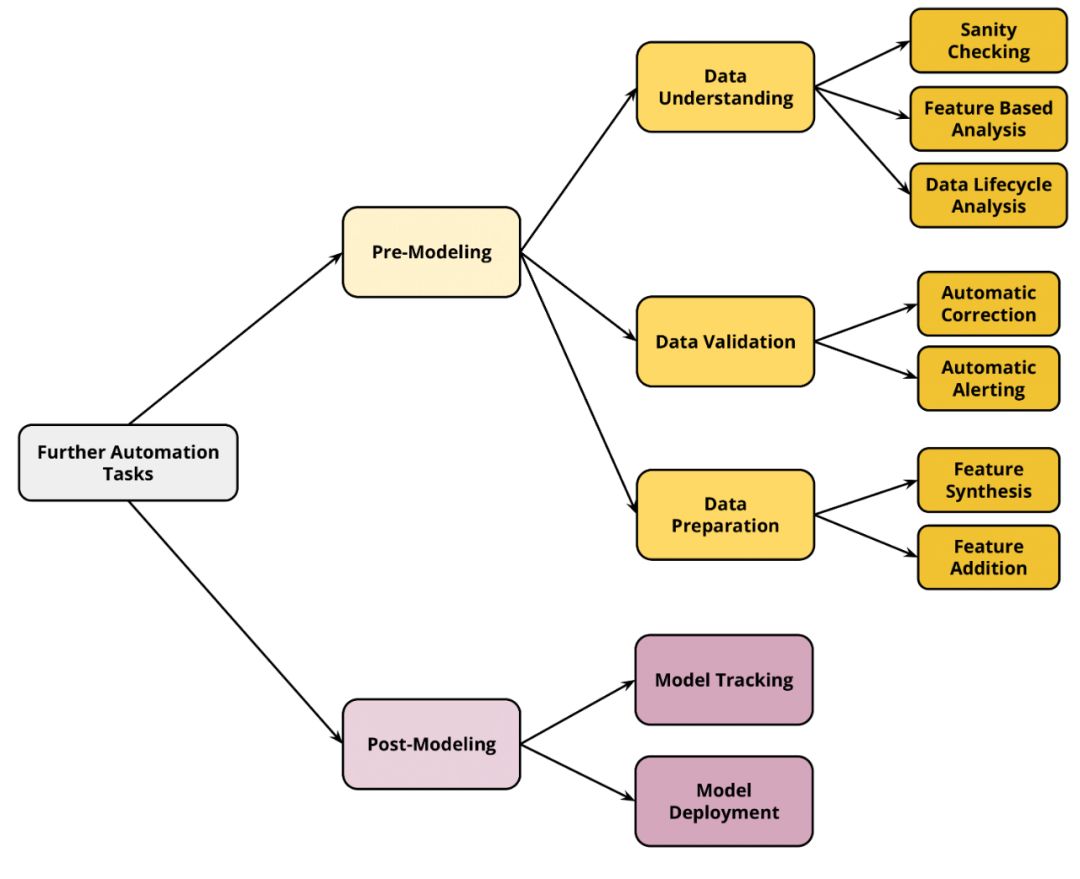

5. 预建模和建模后辅助工具

虽然目前不同的自动化工具和框架已经最小化了数据科学家在建模部分中的作用,并节省了大量工作,但是仍然有几个方面需要人工干预和可解释性,以便做出正确的决策,从而增强和影响建模步骤。这些方面属于机器学习生产流水线的两个主要构建模块:预建模和后建模。

这两个构建块的各个方面可以帮助覆盖当前自动化工具中遗漏的内容,并帮助数据科学家以一种更容易、更有组织、更有信息的方式完成他们的工作。

6. 预处理建模阶段

数据理解

2017 | Ground: A Data Context Service | Hellerstein et al. | CIDR |

PDF|URL2016 | ProvDB: A System for Lifecycle Management of Collaborative Analysis Workflows. | Miao et al. | CoRR |

PDF|Github2016 | Goods: Organizing Google’s Datasets. | Halevy et al. | SIGMOD |

PDF2016 | Visual Exploration of Machine Learning Results Using Data Cube Analysis. | Kahng et al. | HILDA |

PDF2015 | Smart Drill-down: A New Data Exploration Operator. | Joglekar et al. | VLDB |

PDF2017 | Controlling False Discoveries During Interactive Data Exploration. | Zhao et al. | SIGMOD |

PDF2016 | Data Exploration with Zenvisage: An Expressive and Interactive Visual Analytics System. | Siddiqui et al. | VLDB |

PDF|TOOL2015 | SEEDB: Efficient Data-Driven Visualization Recommendations to Support Visual Analytics. | Vartak et al. | PVLDB |

PDF|TOOL数据验证

2009 | On Approximating Optimum Repairs for Functional Dependency Violations. | Kolahi and Lakshmanan | ICDT |

PDF2005 | A Cost-based Model and Effective Heuristic for Repairing Constraints by Value Modification. | Bohannon et al. | SIGMOD |

PDF2017 | MacroBase: Prioritizing Attention in Fast Data. | Bailis et al. | SIGMOD |

PDF|Github2015 | Data X-Ray: A Diagnostic Tool for Data Errors. | Wang et al. | SIGMOD |

PDFAutomatic Correction

Automatic Alerting

数据准备

2015 | Deep feature synthesis: Towards automating data science endeavors. | Kanter and Veeramachaneni | DSAA |

PDF|Github2018 | Google Search Engine for Datasets |

URL2014 | DataHub: Collaborative Data Science & Dataset Version Management at Scale. | Bhardwaj et al. | CoRR |

PDF|URL2013 | OpenML: Networked Science in Machine Learning. | Vanschoren et al. | SIGKDD |

PDF|URL2007 | UCI: Machine Learning Repository. | Dua, D. and Graff, C. |

URL2019 | Model Chimp |

URL2018 | ML-Flow |

URL2017 | Datmo |

URL

-END-

专 · 知

专知,专业可信的人工智能知识分发,让认知协作更快更好!欢迎登录www.zhuanzhi.ai,注册登录专知,获取更多AI知识资料!

欢迎微信扫一扫加入专知人工智能知识星球群,获取最新AI专业干货知识教程视频资料和与专家交流咨询!

请加专知小助手微信(扫一扫如下二维码添加),加入专知人工智能主题群,咨询技术商务合作~

专知《深度学习:算法到实战》课程全部完成!550+位同学在学习,现在报名,限时优惠!网易云课堂人工智能畅销榜首位!

点击“阅读原文”,了解报名专知《深度学习:算法到实战》课程