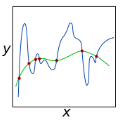

Invariant prediction uses the prediction stability of causal relationships across different environments to identify causal variables. Conversely, using causal variables gives prediction guarantees even in out-of-sample data settings. In this paper, we investigate the identification of causal-like models from in-sample data that ensure out-of-sample risk guarantees when predicting a target variable from an arbitrary set of covariates. Ordinary least squares minimizes in-sample risk but offers limited out-of-sample guarantees, while causal models optimize out-of-sample guarantees at the expense of in-sample performance. We introduce a form of \textit{causal regularization} to balance these properties. In the population setting, higher regularization yields estimators with greater risk stability, albeit with increased in-sample risk. Empirically, however, there is a further trade-off to consider, as finite in-sample data reduced the ability to correctly identify models with high out-of-sample risk guarantees. We show how in such empirical settings the optimal causal regularizer can be found via cross-validation.

翻译:不变性预测利用因果关系在不同环境下的预测稳定性来识别因果变量。反之,使用因果变量即使在样本外数据设定下也能提供预测保证。本文研究了如何从样本内数据中识别类因果模型,以确保在从任意协变量集合预测目标变量时获得样本外风险保证。普通最小二乘法最小化样本内风险,但提供的样本外保证有限;而因果模型以牺牲样本内性能为代价优化样本外保证。我们引入一种\\textit{因果正则化}形式来平衡这些特性。在总体设定中,较高的正则化会产生具有更大风险稳定性的估计量,尽管样本内风险会增加。然而,在实证中还需考虑进一步的权衡,因为有限的样本内数据降低了正确识别具有高样本外风险保证模型的能力。我们展示了在此类实证设定中,如何通过交叉验证找到最优因果正则化器。