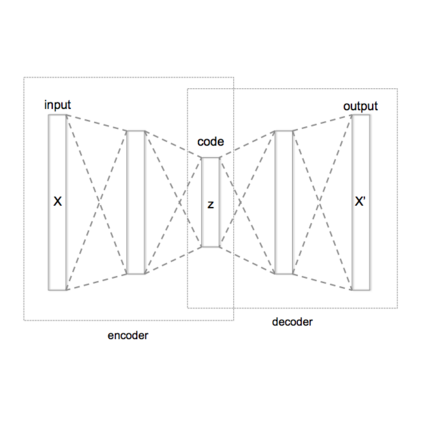

Machine Unlearning is essential for large generative models (VAEs, DDPMs) to comply with the right to be forgotten and prevent undesired content generation without costly retraining. Existing approaches, such as Static-lambda SISS for diffusion models, rely on a fixed mixing weight lambda, which is suboptimal because the required unlearning strength varies across samples and training stages. We propose Adaptive-lambda SISS, a principled extension that turns lambda into a latent variable dynamically inferred at each training step. A lightweight inference network parameterizes an adaptive posterior over lambda, conditioned on contextual features derived from the instantaneous SISS loss terms (retain/forget losses and their gradients). This enables joint optimization of the diffusion model and the lambda-inference mechanism via a variational objective, yielding significantly better trade-offs. We further extend the adaptive-lambda principle to score-based unlearning and introduce a multi-class variant of Score Forgetting Distillation. In addition, we present two new directions: (i) a hybrid objective combining the data-free efficiency of Score Forgetting Distillation with the direct gradient control of SISS, and (ii) a Reinforcement Learning formulation that treats unlearning as a sequential decision process, learning an optimal policy over a state space defined by the model's current memory of the forget set. Experiments on an augmented MNIST benchmark show that Adaptive-lambda SISS substantially outperforms the original static-lambda SISS, achieving stronger removal of forgotten classes while better preserving generation quality on the retain set.

翻译:机器遗忘对于大型生成模型(如VAE、DDPM)至关重要,旨在满足“被遗忘权”要求,并防止生成不良内容,同时避免昂贵的重新训练。现有方法(如扩散模型中的静态λ SISS)依赖于固定的混合权重λ,这并非最优,因为所需的遗忘强度在不同样本和训练阶段存在差异。我们提出自适应λ SISS,一种原则性扩展方法,将λ转化为在每个训练步骤动态推断的潜变量。一个轻量级推断网络参数化λ的自适应后验分布,其条件基于从瞬时SISS损失项(保留/遗忘损失及其梯度)中提取的上下文特征。这通过变分目标实现了扩散模型与λ推断机制的联合优化,从而显著改善了性能权衡。我们进一步将自适应λ原则扩展至基于得分的遗忘方法,并引入了得分遗忘蒸馏的多类别变体。此外,我们提出了两个新方向:(i)一种混合目标,结合了得分遗忘蒸馏的无数据效率与SISS的直接梯度控制;(ii)一种强化学习框架,将遗忘视为序列决策过程,在由模型对遗忘集当前记忆状态定义的状态空间上学习最优策略。在增强的MNIST基准测试上的实验表明,自适应λ SISS显著优于原始静态λ SISS,在更好地保留保留集生成质量的同时,实现了对遗忘类别更彻底的移除。