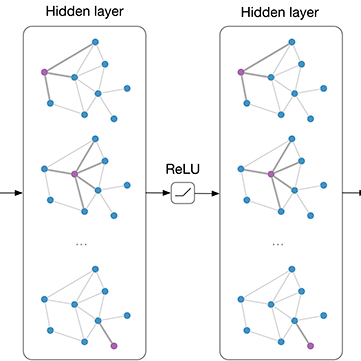

Despite the notable success of graph convolutional networks (GCNs) in skeleton-based action recognition, their performance often depends on large volumes of labeled data, which are frequently scarce in practical settings. To address this limitation, we propose a novel label-efficient GCN model. Our work makes two primary contributions. First, we develop a novel acquisition function that employs an adversarial strategy to identify a compact set of informative exemplars for labeling. This selection process balances representativeness, diversity, and uncertainty. Second, we introduce bidirectional and stable GCN architectures. These enhanced networks facilitate a more effective mapping between the ambient and latent data spaces, enabling a better understanding of the learned exemplar distribution. Extensive evaluations on two challenging skeleton-based action recognition benchmarks reveal significant improvements achieved by our label-efficient GCNs compared to prior work.

翻译:尽管图卷积网络(GCNs)在基于骨架的动作识别中取得了显著成功,但其性能往往依赖于大量标注数据,而在实际应用场景中标注数据通常稀缺。为应对这一局限,我们提出了一种新颖的标签高效GCN模型。本研究主要有两项贡献。首先,我们开发了一种新颖的采集函数,采用对抗策略来识别一组紧凑且信息丰富的样本用于标注。该选择过程平衡了代表性、多样性和不确定性。其次,我们引入了双向且稳定的GCN架构。这些增强型网络促进了环境数据空间与潜在数据空间之间更有效的映射,从而更好地理解已学习的样本分布。在两个具有挑战性的基于骨架的动作识别基准数据集上的广泛评估表明,与先前工作相比,我们的标签高效GCN模型取得了显著改进。