【Awesome】最全的机器学习可解释性资料(machine-learning-interpretability)

【导读】 大家知道机器学习和深度学习在近几年已经取得了极大的发展,应用范围十分广泛,对各行各业也都产生了深远的影响。但是现在从事机器学习和深度学习的研究人员研究的焦点逐渐变成可解释性。什么是可解释性?可解释性就是不仅仅要知道怎么做,同时也要知道为什么这么做会产生这样的结果,不论结果是否理想。今天小编就给大家分享一些机器学习可解释性资料

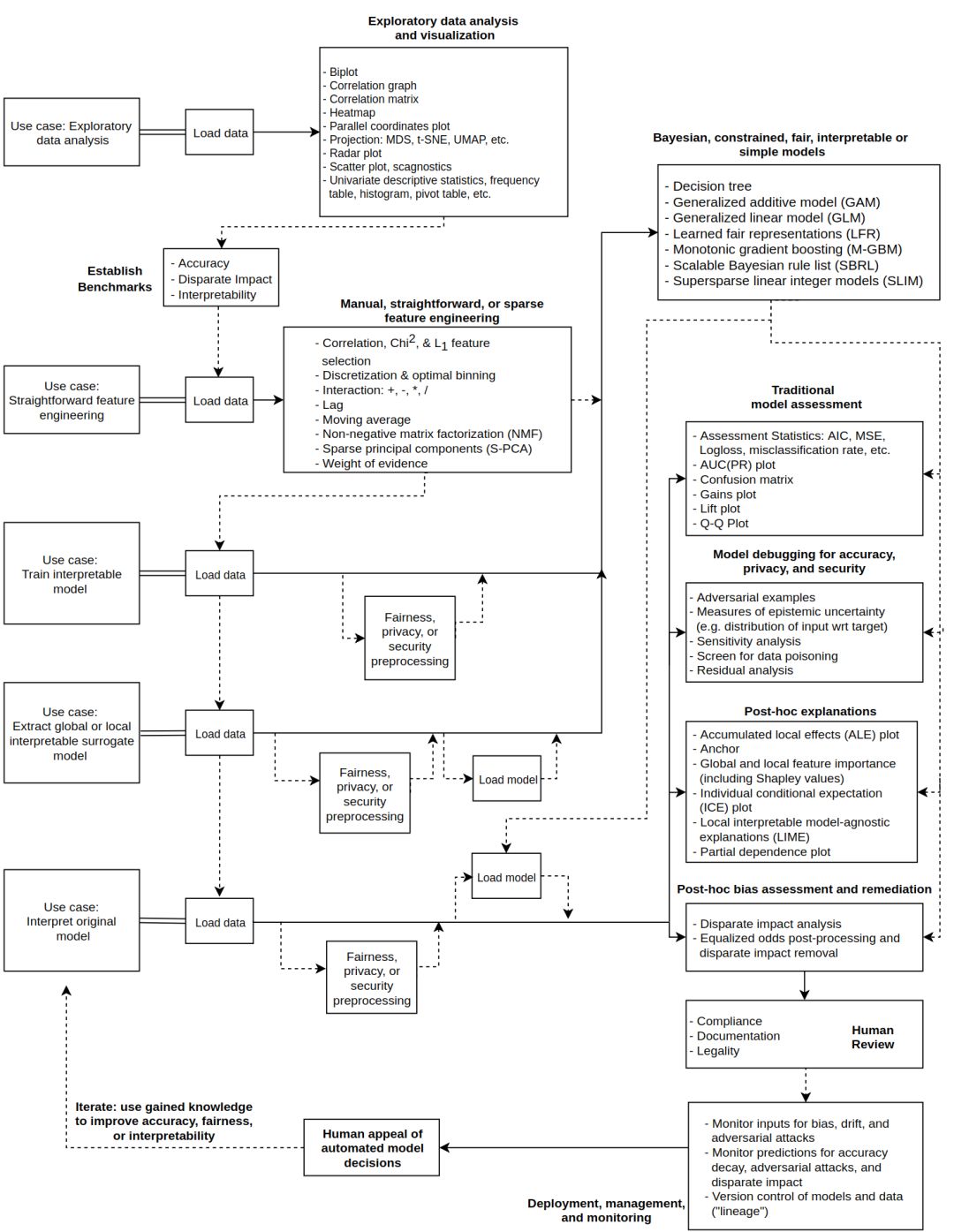

下面是一个局部的机器学习蓝图,可以从总体上帮助降低做机器学习任务的风险程度。

▌资料目录

全面的软件示例和教程(Comprehensive Software Examples and Tutorials)

可解释性或合适的增强软件包(Explainability- or Fairness-Enhancing Software Package)

Browser

Python

R

免费的书(Free Books)

其他可解释性和合适的资源和列表

论文(Review and General Papers)

教学资源(Teaching Resources)

可解释(“白盒”)或合适的建模包(Interpretable ("Whitebox") or Fair Modeling Packages)

C/C++

Python

R

▌Comprehensive Software Examples and Tutorials

Getting a Window into your Black Box Model

IML

Interpretable Machine Learning with Python

Interpreting Machine Learning Models with the iml Package

Machine Learning Explainability by Kaggle Learn

Model Interpretability with DALEX

Model Interpretation series by Dipanjan (DJ) Sarkar:

The Importance of Human Interpretable Machine Learning

Model Interpretation Strategies

Hands-on Machine Learning Model Interpretation

Partial Dependence Plots in R

Visualizing ML Models with LIME

▌Expalinability-or Fairness-Enhancing Software Packages

Browser

What-if Tool

Python

aequitas

AI Fairness 360

anchor

casme

cleverhans

ContrastiveExplanation (Foil Trees)

deeplift

deepvis

eli5

fairml

fairness

Integrated-Gradients

lofo-importance

L2X

lime

PDPbox

pyBreakDown

PyCEbox

shap

Skater

rationale

tensorflow/lucid

tensorflow/model-analysis

Themis

themis-ml

treeinterpreter

woe

xai

R

ALEPlot

breakDown

DALEX

ExplainPrediction

featureImportance

forestmodel

fscaret

ICEbox

iml

lightgbmExplainer

lime

live

mcr

pdp

shapleyR

smbinning

vip

xgboostExplainer

▌Free Books

Beyond Explainability: A Practical Guide to Managing Risk in Machine Learning Models

Fairness and Machine Learning

Interpretable Machine Learning

▌Other Interpretability and Fairness Resources and Lists

8 Principles of Responsible ML

An Introduction to Machine Learning Interpretability

Awesome interpretable machine learning ;)

Awesome machine learning operations

algoaware

criticalML

Fairness, Accountability, and Transparency in Machine Learning (FAT/ML) Scholarship

Machine Learning Ethics References

Machine Learning Interpretability Resources

MIT AI Ethics Reading Group

XAI Resources

▌Review and General Papers

A Comparative Study of Fairness-Enhancing Interventions in Machine Learning

A Survey Of Methods For Explaining Black Box Models

Challenges for Transparency

Explaining Explanations: An Approach to Evaluating Interpretability of Machine Learning

On the Art and Science of Machine Learning Explanations

On the Responsibility of Technologists: A Prologue and Primer

Please Stop Explaining Black Box Models for High-Stakes Decisions

The Mythos of Model Interpretability

The Promise and Peril of Human Evaluation for Model Interpretability

Towards A Rigorous Science of Interpretable Machine Learning

Trends and Trajectories for Explainable, Accountable and Intelligible Systems: An HCI Research Agenda

▌Review and General Papers

An Introduction to Data Ethics

Fairness in Machine Learning

Human-Center Machine Learning

Practical Model Interpretability

▌Interpretable("Whitebox") or Fair Modeing Packages

C/C++

Certifiably Optimal RulE ListS

Python

Bayesian Case Model

Bayesian Ors-Of-Ands

Bayesian Rule List (BRL)

fair-classification

Falling Rule List (FRL)

H2O-3

Penalized Generalized Linear Models

Sparse Principal Components (GLRM)

Monotonic XGBoost

pyGAM

Risk-SLIM

Scikit-learn

Decision Trees

Generalized Linear Models

Sparse Principal Components

sklearn-expertsys

skope-rules

Super-sparse Linear Integer models (SLIMs)

R

arules

Causal SVM

elasticnet

gam

glmnet

H2O-3

Penalized Generalized Linear Models

Sparse Principal Components (GLRM)

Monotonic XGBoost

quantreg

rpart

RuleFit

Scalable Bayesian Rule Lists (SBRL)

参考链接:https://github.com/jphall663/awesome-machine-learning-interpretability#comprehensive-software-examples-and-tutorials

https://github.com/h2oai/mli-resources

END-

专 · 知

专知《深度学习:算法到实战》课程全部完成!490+位同学在学习,现在报名,限时优惠!网易云课堂人工智能畅销榜首位!

欢迎微信扫一扫加入专知人工智能知识星球群,获取最新AI专业干货知识教程视频资料和与专家交流咨询!

请加专知小助手微信(扫一扫如下二维码添加),加入专知人工智能主题群,咨询《深度学习:算法到实战》课程,咨询技术商务合作~

请PC登录www.zhuanzhi.ai或者点击阅读原文,注册登录专知,获取更多AI知识资料!

点击“阅读原文”,了解报名专知《深度学习:算法到实战》课程