干货|【西瓜书】周志华《机器学习》学习笔记与习题探讨(一)续

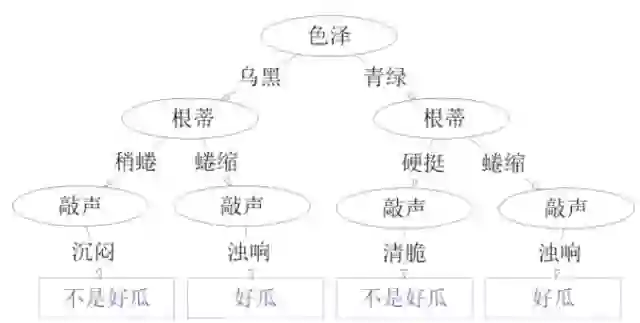

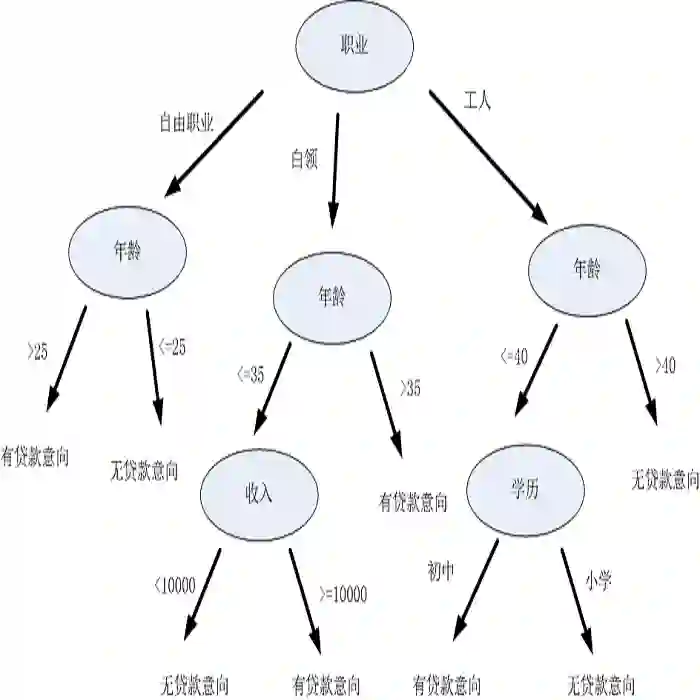

【模型】:从数据中得到的结果,如一棵判断什么是好瓜的决策树。

eg:(此为该决策树的一部分)

【模式】:局部性结果,如一条判断好瓜的规则

eg:色泽青绿、根蒂蜷缩、敲声浊响的是好瓜。

【学习算法】:在计算机上从数据中产生“模型”的算法。

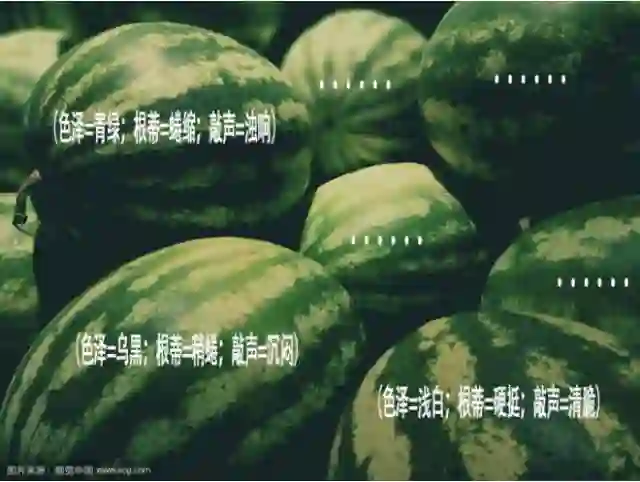

如图所示,假定我们收集了一批西瓜的数据。

【记录】:对任意一个西瓜的描述

eg:x1=(色泽=青绿;根蒂=蜷缩;敲声=浊响),x2=(色泽=乌黑;根蒂=稍蜷;敲声=沉闷),x3=(色泽=浅白;根蒂=硬挺;敲声=清脆),......

【示例、样本】:对一个西瓜的描述

eg:x1=(色泽=青绿;根蒂=蜷缩;敲声=浊响)

【数据集】:D={x1,x2,x3...xm} 一组记录的集合,一组“对西瓜的描述”的集合。(此为有m个示例的数据集,即有m个西瓜描述的集合。)

eg:D={x1=(色泽=青绿;根蒂=蜷缩;敲声=浊响),x2=(色泽=乌黑;根蒂=稍蜷;敲声=沉闷),x3=(色泽=浅白;根蒂=硬挺;敲声=清脆)...xm}

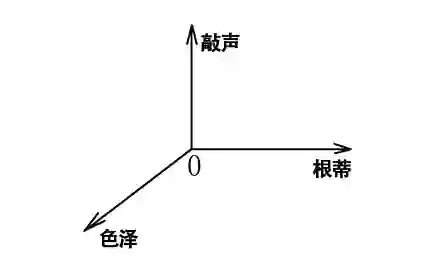

【属性、特征】: (i表示第i个样本,d表示有d个属性)

eg: :色泽, :根蒂, :敲声

【属性值】:(第一个样本的三个属性的属性值)

eg: =青绿, =蜷缩, =浊响

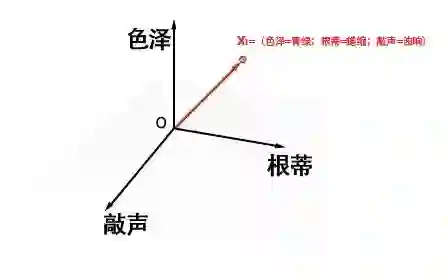

【属性空间、样本空间、输入空间】:以西瓜的三种属性为三个坐标轴,建立坐标系得到的空间。(此图系在假设属性值为连续而非离散的基础上绘制)

【特征向量】:西瓜的三个属性的属性值可以在属性空间坐标轴上找到属于自己的坐标,由此找到空间中符合三个属性值的一个坐标点。由于空间中每一个点对应一个坐标向量,故一个示例可以在d个属性围成的d维属性空间中表示成一个向量。

【学习、训练】:从数据中学得模型的过程

eg:从西瓜样本数据集中得到判断好瓜的决策树的过程

【训练数据】:训练过程中使用的数据

eg:为得到判断好瓜的决策树,使用了100000个西瓜的三个属性值的记录集合训练样本集合,这3×100000个属性值就是训练过程中使用的数据

【训练样本】:每一个样本,即训练采用的对一个西瓜的描述

eg:x1=(色泽=青绿;根蒂=蜷缩;敲声=浊响)

【假设】:学得的判断好瓜的决策树对应了某种潜在的规律,(所以学得的模型,即判断好瓜的决策树,只是一种假设)

【真相、真实】:判断好瓜决策树对应的“客观上判断好瓜的规律”(可能与学习得到的判断好瓜决策树有出入)

【学习过程】:找出或逼近真相,即让学习出来的“判断好瓜的决策树”(假设),能够更加接近现实世界中判断好瓜的客观规律。(所以有时也将模型称为学习器,看做学习算法在给定数据和参数空间上的实例化。)

但是只有瓜的属性值,而没有关于瓜好坏的最终结果,是没有办法进行监督学习的。

这意味着,每当探测一个瓜的色泽、根蒂、敲声之后,还需要把瓜切开吃一口,给出这个瓜是好是坏的最终结论。这样才能积累到判断瓜好坏的经验。只观察不检验,是无法积累经验的。

(这是否意味着监督学习某种意义上是机器进行的经验积累?)

【预测】:依靠机器学习得到的模型(如决策树),对新示例进行结果判断。

eg:通过好瓜决策树,判断老婆新买的瓜是否是好瓜。

【标记】:关于示例(对一个西瓜的描述)得到的结果的信息

eg:好瓜、坏瓜

【样例】:拥有了标记信息的示例(对一个西瓜的描述),即(xi,yi),其中i表示第i个样例

eg:(x1,y1)=((色泽=青绿;根蒂=蜷缩;敲声=浊响),好瓜)

【标记空间、输出空间】:所有标记的集合。

当标记值是离散值的时候,Y={y1,y2,y3,...yj,...yn}(此时y的序号j并不代表第几个样例,而代表标记的第几个取值,n表示标记可以取n个值)在好瓜与坏瓜的问题上,n=2,即标记可以取两个值。

eg:Y={好瓜,坏瓜}

当标记值是连续值的时候,Y={yi=f(xi)}(此时yi则代表在取序号为i的示例xi时,标记的取值示例xi做自变量的函数f(xi)),如对西瓜的色泽、根蒂、敲声进行量化的统计,则可归纳出某种函数f(xi),用以表达西瓜的成熟程度值,从而判断西瓜的好坏。

故如上可知:

【分类】:预测的是离散值。

eg:判断瓜的好坏。

【回归】:预测的是连续值。

eg:判断瓜的成熟度。

【正类】eg:好瓜。【负类】eg:坏瓜。

【多分类】eg:沙瓤瓜、水瓤瓜、半沙半水瓤瓜。。。。

转自:机器学习算法与自然语言处理