【谷歌推出TFGAN】开源的轻量级生成对抗网络库

原作 Joel Shor 机器感知高级软件工程师

编译自 谷歌开源博客

量子位 出品

一般情况下,训练一个神经网络要先定义一下损失函数,告诉神经网络输出的值离目标值偏差大概多少。举个例子来说,对于图像分类网络所定义的损失函数来说,一旦网络出现错误的分类结果,比如说把狗标记成了猫,就会得到一个高损失值。

不过,不是所有任务都有那么容易定义的损失函数,尤其是那些涉及到人类感知的,比如说图像压缩或者文本转语音系统。

GAN(Generative Adversarial Networks,生成对抗网络),在图像生成文本,超分辨率,帮助机器人学会抓握,提供解决方案这些应用上都取得了巨大的进步。

不过,理论上和软件工程上的更新不够快,跟不上GAN的更新的节奏。

△ 一段生成模型不断进化的视频

上面的视频可以看出,这个生成模型刚开始只能产生杂乱的噪音,但是最后生成了比较清晰的MNIST数字。

为了让大家更容易地训练和评价GAN,我们提供TFGAN(轻量级GAN库)的源代码。其中包含容易上手的案例,可以充分地展现出TFGAN的表现张力和灵活性。我们还附上了一个示范教程,里面提到了高级的API端口怎么样能快速地用你的数据来训练模型。

△ 对抗损失对于图像压缩的效果。

顶层是ImageNet数据集里的图,中间那层是传统损失训练出来的图像压缩神经网络压缩和解压后的效果,底层是GAN损失和传统损失一起训练的神经网络效果。可以看得出来,底层的图边缘更锐利,细节更丰富,虽然和原图还是有一定的差距。

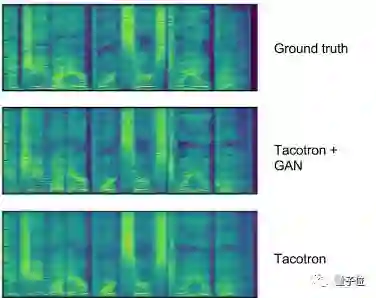

当使用端对端的语音合成TacotronTTS网络时,GAN可以增加部分真实的声音特性。如下图所示。

△ 大多文本转语音(TTS)网络产生的过平滑的声谱图

TacotronTTS可以有效减少生成音频的人工痕迹,出来的语音更真实自然(具体参考,https://arxiv.org/abs/1703.10135)。

TFGAN支持多种主流的实验方法。既有简单的可涵盖大部分GAN案例的函数(只要几行代码,开发者就可以拿自己的数据直接建模了),也有设计独立模块化的特殊GAN函数,你可以随意地组合自己需要的函数,损失、评估、特征、训练函数。

同时,TFGAN也支持搭配其他架构,或者原始的TensorFlow代码。使用了TFGAN搭建的GAN模型,以后底层架构的优化会更加方便。另外,也有大量的已经预置的损失函数或特征函数供开发者选择,不用再花大量时间自己去写。最最最重要的是代码已经被反复测试过了,开发者不用再担心GAN库数据上的错误。

最后,附TFGAN链接:

https://github.com/tensorflow/tensorflow/tree/master/tensorflow/contrib/gan

原文链接:

https://opensource.googleblog.com/2017/12/tfgan-lightweight-library-for-generative-adversarial-networks.html

☞ 【最详尽的GAN介绍】王飞跃等:生成式对抗网络 GAN 的研究进展与展望

☞ 【智能自动化学科前沿讲习班第1期】王飞跃教授:生成式对抗网络GAN的研究进展与展望

☞ 【智能自动化学科前沿讲习班第1期】王坤峰副研究员:GAN与平行视觉

☞ 【重磅】平行将成为一种常态:从SimGAN获得CVPR 2017最佳论文奖说起

☞ 【“强化学习之父”萨顿】预测学习马上要火,AI将帮我们理解人类意识

☞ 【TFGAN】谷歌开源 TFGAN,让训练和评估 GAN 变得更加简单

☞ 【NIPS 2017】清华大学人工智能创新团队在AI对抗性攻防竞赛中获得冠军

☞ 【英伟达NIPS论文AI脑洞大开】用GAN让晴天下大雨,小猫变狮子,黑夜转白天

☞ 【BicycleGAN】NIPS 2017论文图像转换多样化,大幅提升pix2pix生成图像效果