动态 | 斯坦福大学发布 StanfordNLP,支持多种语言

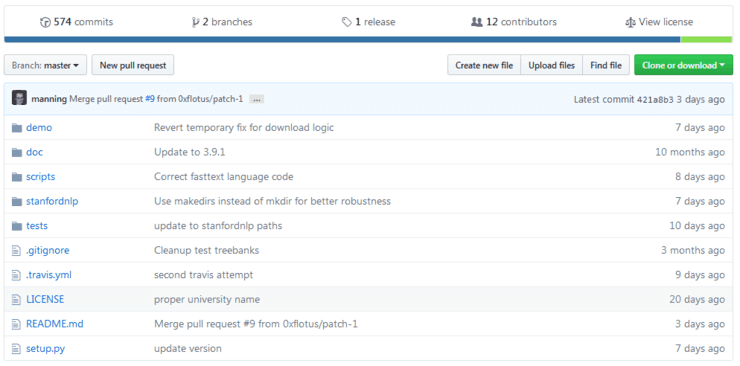

AI 科技评论按,近日,斯坦福大学发布了一款用于 NLP 的 Python 官方库,这个库可以适用于多种语言,其地址是:

https://stanfordnlp.github.io/stanfordnlp/,github

资源如下:

这是 Stanford 官方发布的 NLP 库,详细信息请访问:

https://stanfordnlp.github.io/stanfordnlp/

说明

如果在研究中使用了他们的神经管道,可以参考他们的 CoNLL 2018 共享任务系统描述文件:

@inproceedings{qi2018universal,

address = {Brussels, Belgium},

author = {Qi, Peng and Dozat, Timothy and Zhang, Yuhao and Manning, Christopher D.},

booktitle = {Proceedings of the {CoNLL} 2018 Shared Task: Multilingual Parsing from Raw Text to Universal Dependencies},

month = {October},

pages = {160--170},

publisher = {Association for Computational Linguistics},

title = {Universal Dependency Parsing from Scratch},

url = {https://nlp.stanford.edu/pubs/qi2018universal.pdf},

year = {2018}

}

但是,这个版本和 Stanford 大学的 CoNLL 2018 共享任务系统不一样。在这里,标记解析器、词性还原器、形态学特性和多词术语系统是共享任务代码系统的一个简洁版本,但是作为对比,还使用了 Tim Dozat 的 Tensorflow 版本的标记器和解析器。PyTorch 中大体上对这个版本的代码进行了复制,尽管与原始版本有一些不同。

启动

StanfordNLP 支持 Python3.6 及其以上版本。最好的办法是从 PyPI 安装 StanfordNLP,如果已经安装了 pip,那么只需要运行:

pip install stanfordnlp

这也有助于解决 StanfordNLP 的所有依赖,例如对 PyTorch 1.0.0 或者更高版本的依赖。

还有一个办法,是从 github 存储库的源代码安装,这可以使基于 StanfordNLP 的开发和模型训练具有更大的灵活性。

git clone git@github.com:stanfordnlp/stanfordnlp.git

cd stanfordnlp

pip install -e .

运行 StanfordNLP

从神经管道开始

要运行第一个 StanfordNLP 管道,只需在 python 交互式解释器中执行以下步骤:

>>> import stanfordnlp

>>> stanfordnlp.download('en') # This downloads the English models for the neural pipeline

>>> nlp = stanfordnlp.Pipeline() # This sets up a default neural pipeline in English

>>> doc = nlp("Barack Obama was born in Hawaii. He was elected president in 2008.")

>>> doc.sentences[0].print_dependencies()

最后一个命令将打印输入字符串(或文档,如 StanfordNLP 所示)中第一个句子中的单词,以及该句子中单词的索引,以及单词之间的依赖关系。输出应如下所示:

('Barack', '4', 'nsubj:pass')

('Obama', '1', 'flat')

('was', '4', 'aux:pass')

('born', '0', 'root')

('in', '6', 'case')

('Hawaii', '4', 'obl')

('.', '4', 'punct')

访问 Java Stanford CoreNLP 服务器

除了神经管道之外,这个项目还包括一个用 Python 代码访问 Java Stanford CaleNLP 服务器的官方类。

有几个初始设置步骤:

下载 Stanford CoreNLP 和需要使用的语言的模型;

将模型原型放在分发文件夹中;

告诉 python 代码 Stanford CoreNLP 的位置:

export corenlp_home=/path/to/stanford-corenlp-full-2018-10-05

我们提供了另一个演示脚本,演示如何使用 corenlp 客户机并从中提取各种注释。

神经管道训练模型

目前,CoNLL 2018 共享任务中的所有 treebanks 模型都是公开的,下载和使用这些模型的说明:

https://stanfordnlp.github.io/stanfordnlp/installation_download.html#models-for-human-languages

训练你自己的神经管道

这个库中的所有神经模块都可以使用自己的 CoNLL-U 格式数据进行训练。目前,并不支持通过管道接口进行模型训练。因此,如果要训练你自己的模型,你需要克隆这个 git 存储库并从源代码进行设置。

via:https://github.com/stanfordnlp/stanfordnlp