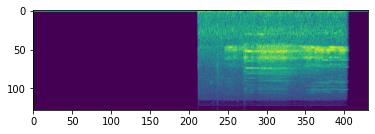

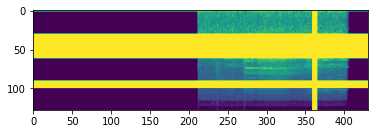

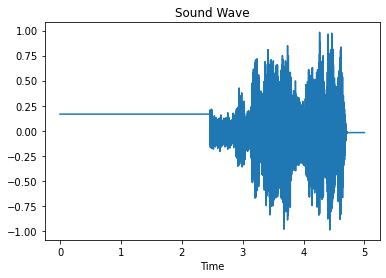

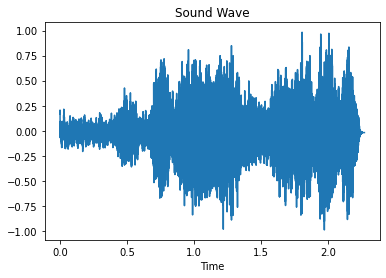

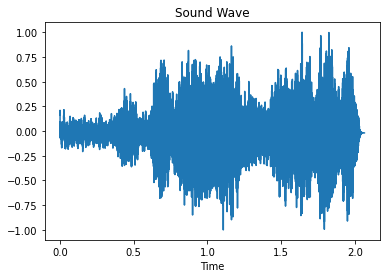

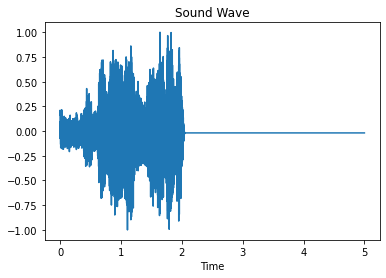

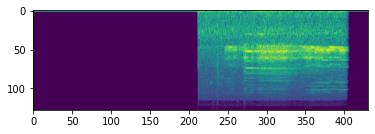

Environmental Sound Classification (ESC) is a challenging field of research in non-speech audio processing. Most of current research in ESC focuses on designing deep models with special architectures tailored for specific audio datasets, which usually cannot exploit the intrinsic patterns in the data. However recent studies have surprisingly shown that transfer learning from models trained on ImageNet is a very effective technique in ESC. Herein, we propose SoundCLR, a supervised contrastive learning method for effective environment sound classification with state-of-the-art performance, which works by learning representations that disentangle the samples of each class from those of other classes. Our deep network models are trained by combining a contrastive loss that contributes to a better probability output by the classification layer with a cross-entropy loss on the output of the classifier layer to map the samples to their respective 1-hot encoded labels. Due to the comparatively small sizes of the available environmental sound datasets, we propose and exploit a transfer learning and strong data augmentation pipeline and apply the augmentations on both the sound signals and their log-mel spectrograms before inputting them to the model. Our experiments show that our masking based augmentation technique on the log-mel spectrograms can significantly improve the recognition performance. Our extensive benchmark experiments show that our hybrid deep network models trained with combined contrastive and cross-entropy loss achieved the state-of-the-art performance on three benchmark datasets ESC-10, ESC-50, and US8K with validation accuracies of 99.75\%, 93.4\%, and 86.49\% respectively. The ensemble version of our models also outperforms other top ensemble methods. The code is available at https://github.com/alireza-nasiri/SoundCLR.

翻译:环境声音分类(ESC)是一个挑战性的研究领域。目前ESC的研究大多侧重于设计深型模型,为特定的音频数据集设计专门设计的特别结构,通常无法利用数据内在模式。但最近的研究令人惊讶地显示,从在图像网络上培训的模型中转移学习是ESC的一个非常有效的技术。在这里,我们提议了SoundCLR,这是与最新性能相结合的有效环境声音分类的受监督的对比性学习方法,通过学习将每个类的样本与其他类的样本脱钩。我们深层次的网络模型通过将对比性损失结合起来来培训,这种差异性能模型有助于分类层产生更好的概率输出,而分类层的输出则具有交叉性能损失。由于现有环境声音数据集的规模相对较小,我们建议并利用传输学习和强度数据增强管道,并在将美国的正价谱分谱分解分解模型输入模型之前,我们深层网络的对比性能输出。我们经过培训的轨迹测试显示,我们的轨迹模型可以大大改进。