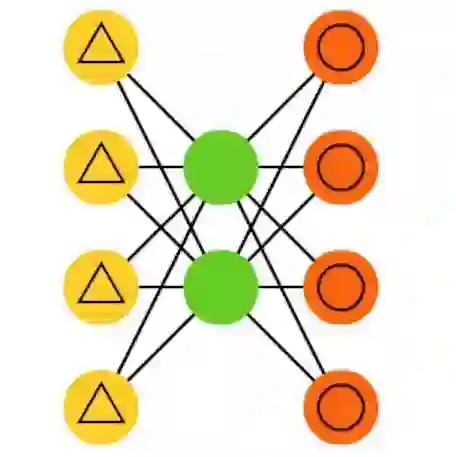

Diffusion models excel at generating high-quality, diverse samples, yet they risk memorizing training data when overfit to the training objective. We analyze the distinctions between memorization and generalization in diffusion models through the lens of representation learning. By investigating a two-layer ReLU denoising autoencoder (DAE), we prove that (i) memorization corresponds to the model storing raw training samples in the learned weights for encoding and decoding, yielding localized "spiky" representations, whereas (ii) generalization arises when the model captures local data statistics, producing "balanced" representations. Furthermore, we validate these theoretical findings on real-world unconditional and text-to-image diffusion models, demonstrating that the same representation structures emerge in deep generative models with significant practical implications. Building on these insights, we propose a representation-based method for detecting memorization and a training-free editing technique that allows precise control via representation steering. Together, our results highlight that learning good representations is central to novel and meaningful generative modeling.

翻译:扩散模型在生成高质量、多样化样本方面表现出色,但当对训练目标过拟合时,它们存在记忆训练数据的风险。我们通过表征学习的视角分析了扩散模型中记忆与泛化的区别。通过研究一个两层ReLU去噪自编码器(DAE),我们证明:(i)记忆对应于模型将原始训练样本存储在编码和解码的学习权重中,产生局部化的“尖峰”表征;而(ii)泛化则出现在模型捕捉局部数据统计规律时,产生“平衡”的表征。此外,我们在真实世界的无条件扩散模型和文生图扩散模型上验证了这些理论发现,证明了相同的表征结构也出现在具有重要实际意义的深度生成模型中。基于这些洞见,我们提出了一种基于表征的记忆检测方法,以及一种无需重新训练即可通过表征引导实现精确控制的编辑技术。总之,我们的结果表明,学习良好的表征对于实现新颖且有意义的生成建模至关重要。