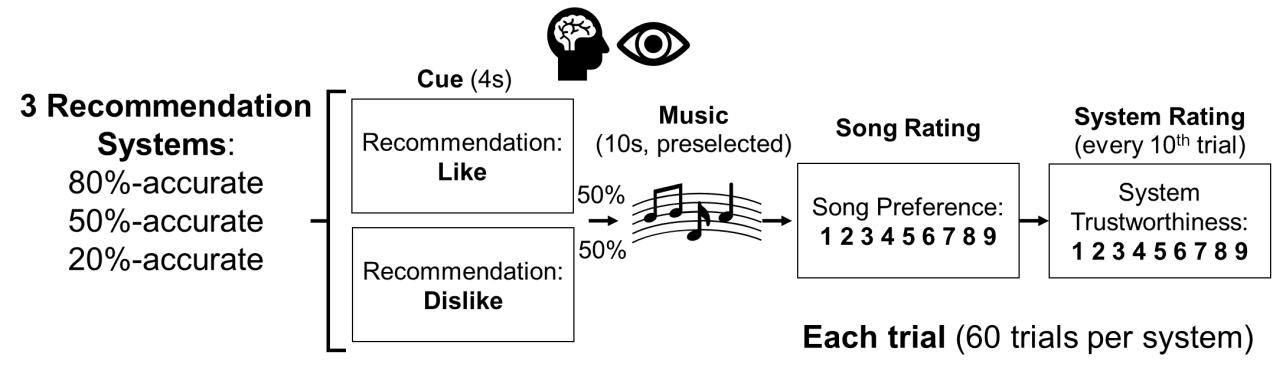

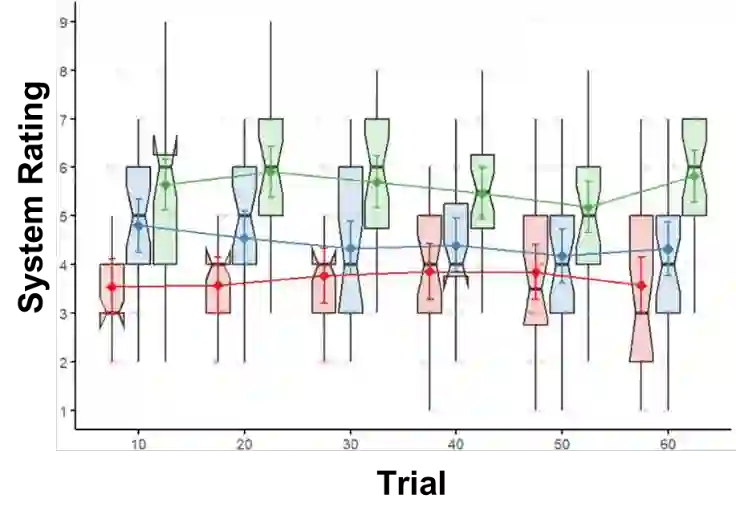

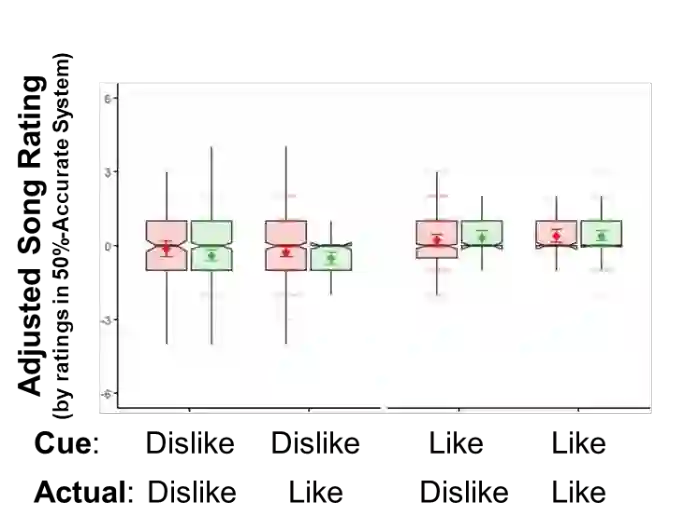

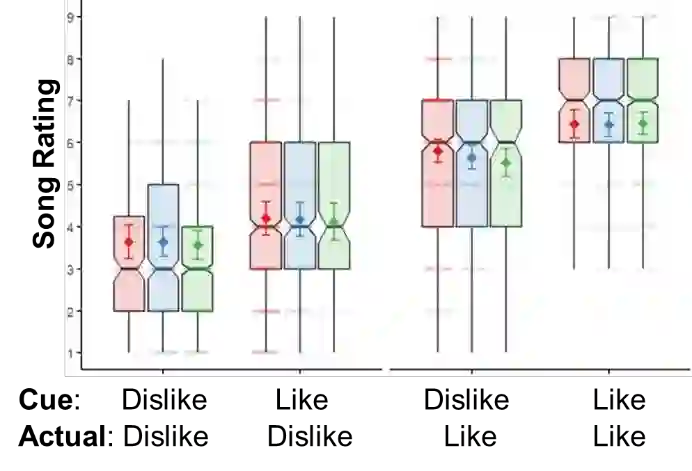

As people nowadays increasingly rely on artificial intelligence (AI) to curate information and make decisions, assigning the appropriate amount of trust in automated intelligent systems has become ever more important. However, current measurements of trust in automation still largely rely on self-reports that are subjective and disruptive to the user. Here, we take music recommendation as a model to investigate the neural and cognitive processes underlying trust in automation. We observed that system accuracy was directly related to users' trust and modulated the influence of recommendation cues on music preference. Modelling users' reward encoding process with a reinforcement learning model further revealed that system accuracy, expected reward, and prediction error were related to oscillatory neural activity recorded via EEG and changes in pupil diameter. Our results provide a neurally grounded account of calibrating trust in automation and highlight the promises of a multimodal approach towards developing trustable AI systems.

翻译:随着人们日益依赖人工智能(AI)筛选信息并做出决策,为自动化智能系统赋予适当的信任度变得愈发重要。然而,当前对自动化信任的测量仍主要依赖于主观且干扰用户的自陈报告。本研究以音乐推荐为模型,探究自动化信任背后的神经与认知过程。我们发现系统准确性直接关联用户信任度,并调节推荐线索对音乐偏好的影响。通过强化学习模型对用户奖赏编码过程进行建模进一步揭示:系统准确性、预期奖赏及预测误差与脑电图(EEG)记录的振荡神经活动及瞳孔直径变化相关。本研究为校准自动化信任提供了神经科学依据,并凸显了采用多模态方法开发可信AI系统的前景。