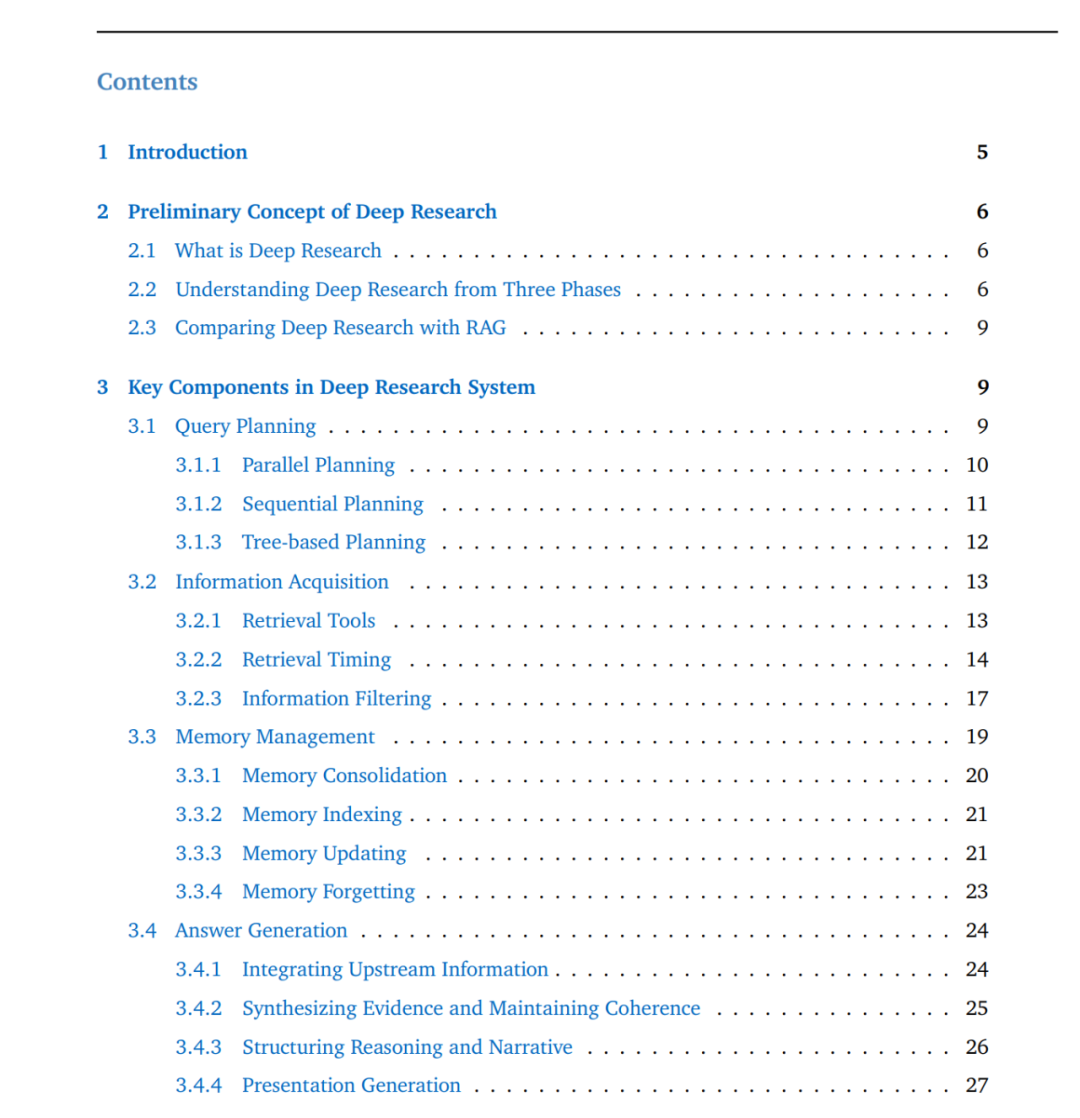

摘要: 大型语言模型(LLMs)正迅速从文本生成器演化为强大的问题求解器。然而,许多开放任务要求具备批判性思维、多来源信息整合以及可验证的输出,这些超出了单轮提示或标准的检索增强生成(RAG)所能实现的能力。近期,大量研究开始探索 Deep Research(深度研究,DR),其目标是将 LLM 的推理能力与外部工具(如搜索引擎)相结合,从而使 LLM 具备作为研究型智能体执行复杂、开放式任务的能力。 本综述系统而全面地审视了深度研究系统,包括清晰的发展路线图、基础组成模块、实践层面的实现技术、关键挑战以及未来方向。具体而言,我们的主要贡献如下: (i) 我们形式化提出了一个三阶段的发展路线图,并将深度研究与相关范式区分开来; (ii) 我们介绍了四个关键组成部分:查询规划、信息获取、记忆管理与答案生成,并为每一部分提供了细粒度的子类目体系; (iii) 我们总结了优化技术,包括提示工程、监督微调以及智能体强化学习; (iv) 我们统一整理了评测标准与开放挑战,旨在为未来发展提供指导与推动。 随着深度研究领域的快速演进,我们将持续更新本综述,以反映该领域的最新进展。

1. 引言

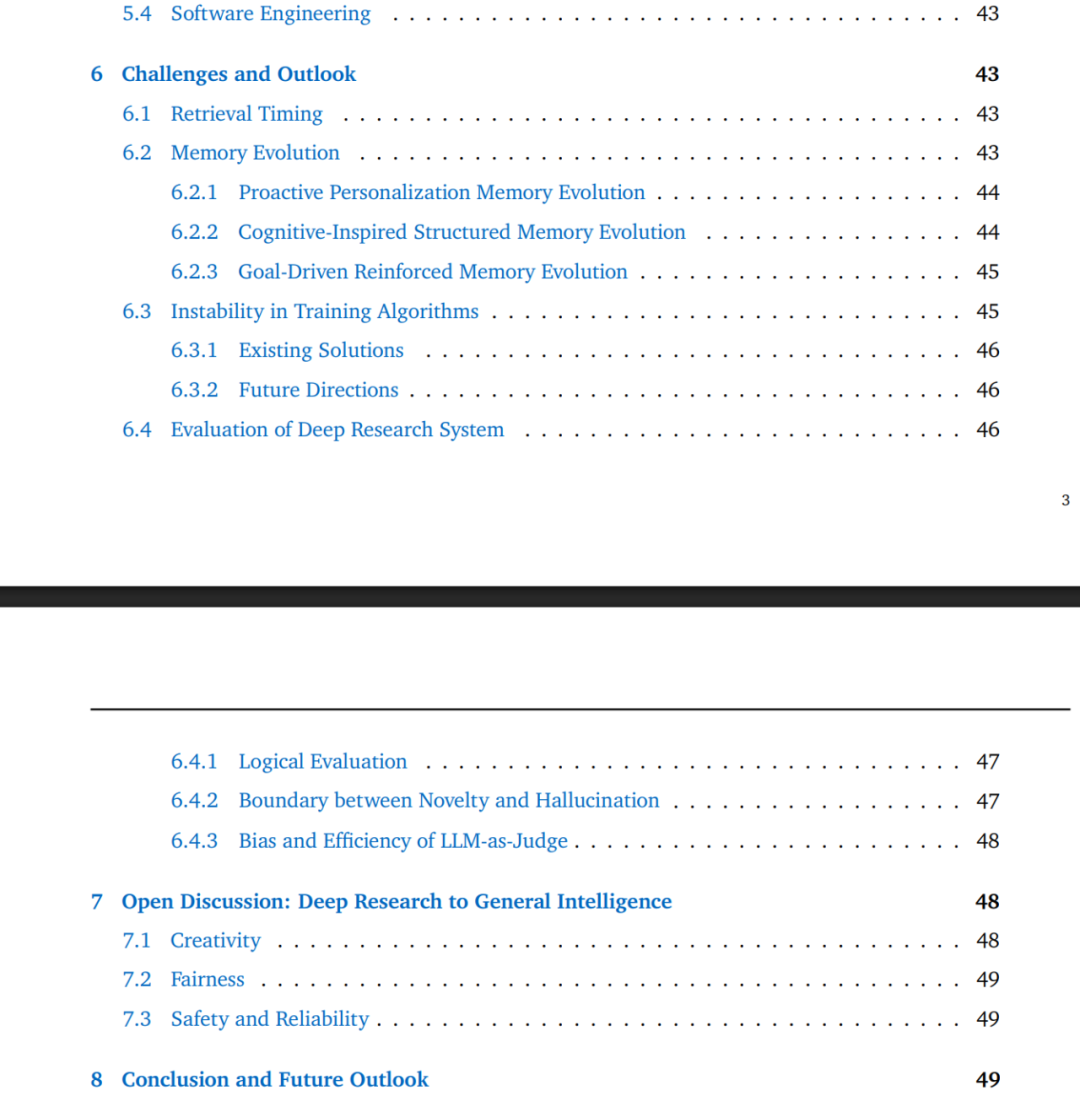

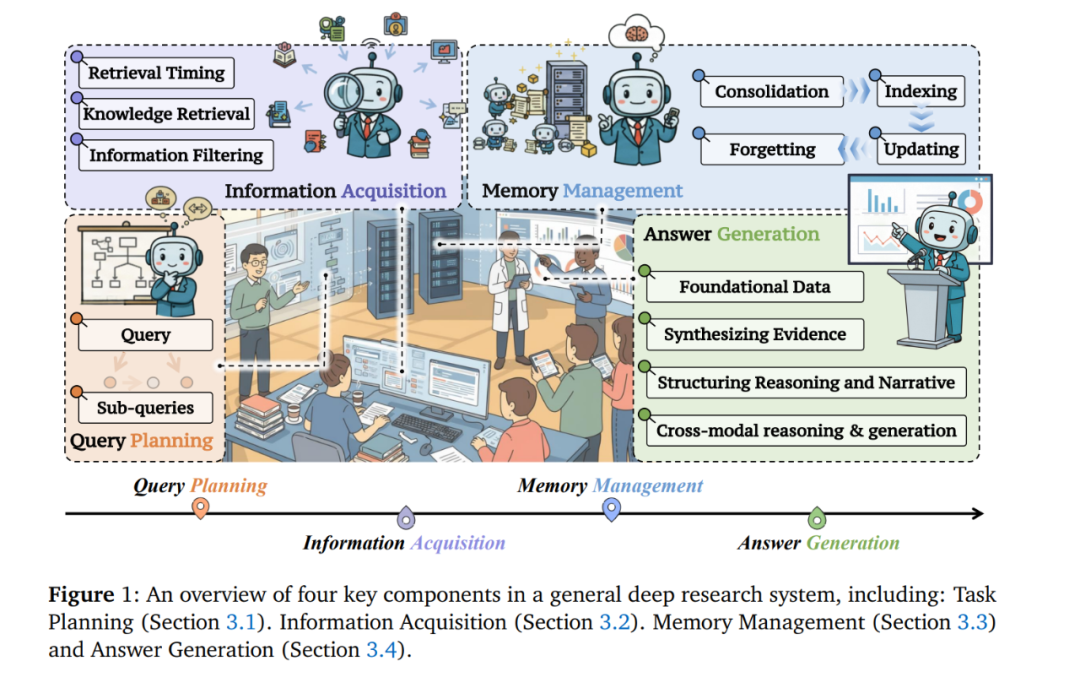

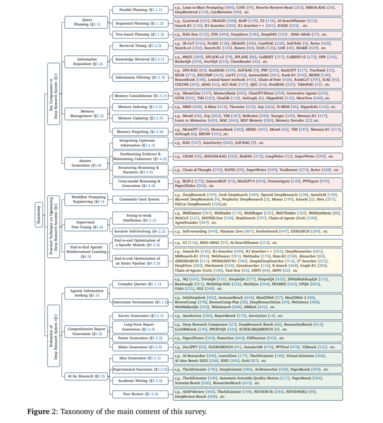

经过大规模网页语料训练的大型语言模型(LLMs)正迅速从流畅的文本生成器演化为能够在实际复杂应用中执行长程推理的自主智能体 [224, 83, 465, 288]。它们在多个领域展现出强泛化能力,包括数学推理 [112, 466]、创造性写作 [95] 以及实用的软件工程 [118, 140, 166]。许多现实世界任务本质上是开放式的,要求批判性思维、基于事实的信息,以及能够独立成文的回应。这远远超出了单轮提示或静态参数化知识所能提供的能力范围 [122, 183, 289]。为弥补这一能力缺口,**Deep Research(深度研究,DR)**范式 [237, 97, 66, 481, 125, 202] 应运而生。DR 将 LLM 纳入一个端到端的研究工作流中,该工作流迭代式地分解复杂问题、通过工具使用获取证据,并将经过验证的见解综合为连贯的长篇回答。 尽管该领域发展迅速,但仍缺乏对 DR 的关键组成、技术细节与开放挑战进行系统性分析的全面综述。现有工作 [458, 31] 多集中于相关领域的发展,如检索增强生成(RAG)与基于 Web 的智能体系统 [401, 200, 285, 456, 316]。然而,与 RAG [89, 72] 相比,DR 采用更灵活、更自主的工作流,不依赖手工构建的流水线,并旨在生成连贯且基于证据的报告。因此,对其技术图景进行清晰梳理已成为紧迫但仍具挑战性的任务。本综述通过提供对 DR 的全面综合来填补这一空白:将其核心组件映射到代表性的系统实现上,整合关键技术与评测方法,并为建立一致的基准测试和推动 AI 驱动的研究持续发展奠定基础。 在本综述中,我们提出了一个面向 DR 系统的三阶段发展路线图,展示其从智能体式信息寻求到自主科学发现等广泛应用。基于该路线图,我们总结了常见 DR 系统的任务求解工作流中的关键组成部分。具体而言,我们介绍 DR 的四个基础组件: (i) 查询规划:将初始输入查询分解成一系列更简单的子查询 [250, 426]; (ii) 信息获取:按需调用外部检索、网页浏览或多种工具 [167, 221]; (iii) 记忆管理:通过受控更新或折叠机制保证与任务求解相关的上下文 [243]; (iv) 答案生成:输出具有明确来源标注的综合性结果,例如科学报告。 这一范围区别于标准 RAG [89, 72] 技术,后者通常将检索视为启发式增强步骤,而不具备灵活的研究工作流或更广泛的行动空间。我们同时介绍如何优化 DR 系统以有效协调这些组件,并将现有方法划分为三类: (i) 工作流提示(workflow prompting); (ii) 监督微调(SFT); (iii) 端到端强化学习(RL)。 本文的结构安排如下:第 2 节给出 DR 的明确定义及其边界;第 3 节介绍 DR 的四个关键组成部分;第 4 节介绍构建 DR 系统的技术细节;第 5 节总结重要的评测数据集与资源;第 6 节讨论未来方向中的挑战。 综上,本综述的主要贡献如下: (i) 我们形式化了 DR 的三阶段路线图,并清晰地区分其与标准检索增强生成等相关技术的差异; (ii) 我们介绍了 DR 系统的四个关键组件,并为每一组件提供细粒度的子类目体系,以全面呈现研究循环; (iii) 我们总结了构建 DR 系统的详细优化方法,为工作流提示、监督微调与强化学习提供实践性洞见; (iv) 我们整合评测标准与开放挑战,旨在支持可比性报告并引导未来研究。

Deep Research 是什么?

Deep Research(DR)旨在赋予大型语言模型(LLMs)一个端到端的研究工作流,使其能够作为智能体,以最少的人类监督生成连贯且基于来源证据的报告。此类系统自动化整个研究循环,涵盖规划、证据获取、分析与报告撰写。 在 DR 框架下,LLM 智能体负责规划查询、从异构来源(如网页、工具、本地文件)获取并过滤证据、维护和更新工作记忆,并综合生成具有可验证性且带有明确引用的回答。下面,我们正式介绍一个三阶段的发展路线图,用以刻画快速演进、以能力为导向的 DR 研究图景,并将其与传统 RAG 范式进行系统对比。

2.2 从三个阶段理解 Deep Research

我们将 DR 视为一种能力演进轨迹,而非价值层级。以下三个阶段描绘了系统可可靠执行的能力从“精确证据获取”到“可读分析整合”,再到“形成可辩护洞见”的逐步扩展。

Phase I:智能体式检索(Agentic Search)

第一阶段的系统主要擅长寻找正确的来源并提取答案,几乎不进行综合。这类系统通常会对用户查询进行重写或分解以提升召回率,检索并重排序候选文档,应用轻量过滤或压缩,并生成带有明确引用、简洁而准确的答案。核心强调点是:忠实于检索内容与可预测的运行效率。 典型应用包括开放域问答 [227, 165]、多跳问答 [425, 344, 265] 以及其他信息寻求任务 [271, 444, 333, 70, 215],这些任务的“真值”通常局限于少量可检索来源。 评测重点包括: * 检索 recall@k * 答案精确匹配 * 引文正确性 * 端到端延迟

体现了该阶段对每 token 的准确性与操作效率的关注。

Phase II:综合式研究(Integrated Research)

第二阶段的系统跳脱单点事实提取,能够生成连贯、结构化的报告,整合来自多个异构来源的证据,并处理冲突与不确定性。研究循环在此阶段变得显式迭代:系统规划子问题、从多种原始内容(如 HTML [323]、表格 [44, 226]、图表 [208, 208])检索与抽取关键证据,最终综合为叙事性报告。 典型应用包括市场与竞争分析 [469, 347]、政策简报 [356]、满足复杂约束的行程规划 [331],以及其他长程问答任务 [66, 434, 378, 49]。 评测重点从短文本的表层匹配转向长文本质量,包括: * 细粒度事实性 [43, 216] * 引文可验证性 [310, 86] * 结构连贯性 [21] * 关键点覆盖度 [379]

Phase II 以适度增加的计算与复杂度换取显著提升的清晰度、覆盖度与决策支持能力。

Phase III:全栈式 AI 科学家(Full-stack AI Scientist)

第三阶段代表着 DR 的更广阔、更具野心的发展方向,旨在让智能体推进科学理解与创造,而不仅仅是信息整合。在此阶段,DR 智能体不仅要汇聚证据,还需能够: * 生成假设 [490] * 执行实验验证或消融研究 [223] * 批判已有论点 [498] * 提出新的观点 [386]

典型应用包括论文审稿 [506, 248, 498]、科学发现 [460, 292, 291] 与实验自动化 [362, 472]。 评测重点包括: * 发现的创新性与洞见性 * 论证结构的连贯性 * 结论的可复现性(包括是否能够从引用来源或代码重新推导结果) * 不确定性校准与透明性

2.3 Deep Research 与 RAG 的对比

许多现实任务本质上是开放式的,需要批判性思维、基于事实的信息,以及可独立成文的回答。这些需求暴露出现有方法(包括传统 RAG 或简单扩大 LLM 参数规模)难以解决的核心局限。以下总结了三类关键挑战:

• 与数字世界的灵活交互

传统 RAG 工作流基于静态检索,依赖预先索引的语料库 [232, 225]。然而现实任务通常要求主动与动态环境交互,如搜索引擎、Web API、代码执行器等 [487, 223, 362]。 DR 系统扩展了这一范式,使 LLM 能够执行多步、工具增强的交互,从而获取最新信息、执行操作并在数字生态中验证假设。

• 自主工作流的长程规划

研究型任务通常包含多子任务协作 [378]、任务上下文管理 [411],以及中间过程的迭代优化 [290]。 DR 通过闭环控制与多轮推理支持智能体实现自主规划、修正与优化,以达成长程目标。

• 面向开放任务的可靠语言接口

LLM 在开放式任务中容易产生幻觉与不一致性 [109, 471, 123, 13, 52]。 DR 系统通过可验证机制,将自然语言输出与真实证据对齐,从而构建更可靠的人类—智能体交互接口。

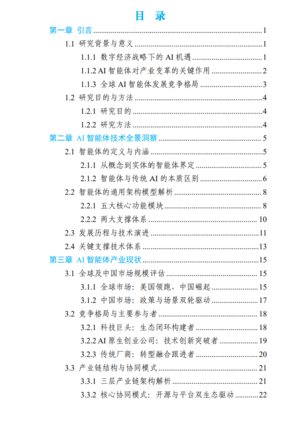

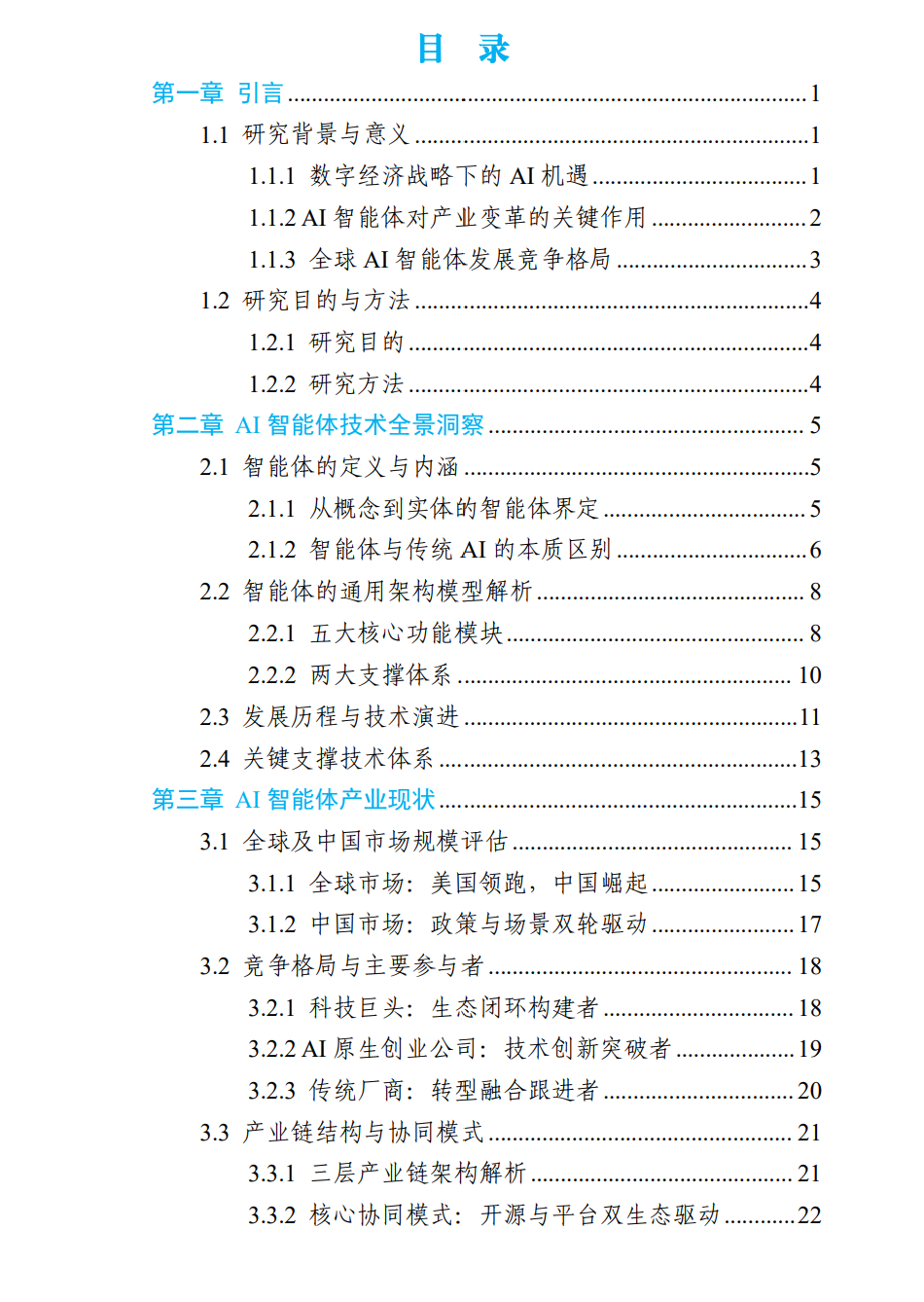

近年来,以大模型为代表的新一代人工智能技术实现爆发式突破,其在自然语言处理、多模态交互等领域的能力跃迁,正深刻重构产业发展逻辑,成为推动经济高质量发展的核心驱动力。在此技术浪潮中,AI智能体(AIAgent)作为大模型的原生应用形态,凭借自主感知、规划决策、工具调用与持续学习的核心能力,完成了从技术概念到产业实践的关键跨越。与传统AI工具相比,AI智能体打破了人机交互依赖明确指令的局限,构建起数字世界与物理世界的智能连接桥梁,有效破解了大模型“有脑无手”的落地困境,成为释放人工智能全产业链价值的关键载体。本报告立足“人工智能+”行动深入实施的战略背景,系统梳理AI智能体的技术体系、产业应用现状与生态格局,深入剖析其驱动产业变革的核心机制,全面研判发展面临的瓶颈与突破方向,最终形成兼具理论深度与实践价值的研究结论,为政产学研用各界协同推进AI智能体创新发展、加速新质生产力培育提供决策参考。

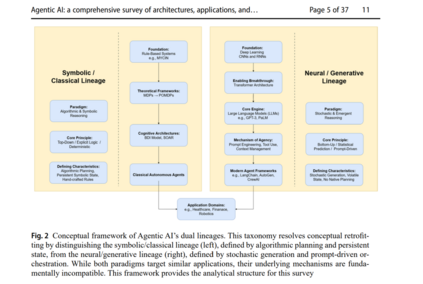

Agentic AI(智能体化人工智能)代表了人工智能领域的一场变革性转向。然而,由于其发展速度迅猛,当前学界对其概念的理解仍相对碎片化,常常将现代神经系统与过时的符号模型混为一谈——这一现象被称为“概念性回溯(conceptual retrofitting)”。本综述旨在打破这一混乱,通过提出一个全新的“双范式框架”,将智能体系统划分为两条截然不同的谱系:符号/经典范式(依赖算法规划与持久状态)与神经/生成式范式(依赖随机生成与提示驱动的编排)。

基于对 2018–2025 年间 90 篇研究的 PRISMA 系统综述方法,我们围绕该框架从三个维度展开全面分析: (1) 各范式的理论基础与架构原则; (2) 在医疗、金融与机器人等领域的具体实现,展示应用约束如何决定范式选择; (3) 不同范式特有的伦理与治理挑战,揭示风险模式与缓解策略的差异性。

我们的分析表明,范式选择具有战略性:符号系统在安全关键领域(如医疗)中占据主导,而神经系统则更适用于数据丰富、需要高度适应性的场景(如金融)。此外,我们识别出关键研究缺口,包括:符号系统在治理模型上的显著不足,以及构建混合神经–符号架构的迫切需求。

最终,本研究提出了一条战略路线图,指出智能体化 AI 的未来不在于某一范式的单独取胜,而在于两者的有机融合,以构建既具适应性又具可靠性的系统。此项工作为未来在智能体系统的研究、开发与政策制定方面提供了必备的概念工具包,以推动稳健且可信赖的混合智能系统的发展。

**关键词:**智能体化 AI · 人工智能 · 系统性综述 · 神经架构 · 符号 AI · 多智能体系统 · AI 治理 · 神经–符号 AI

Introduction(引言)

人工智能(AI)领域正经历一场范式转移:从构建被动的、任务特定的工具,转向工程化能够展现真正“能动性(agency)”的自主系统。现代智能体化 AI 系统(Wissuchek and Zschech 2025;Viswanathan et al. 2025)具备主动规划、上下文记忆、复杂工具使用,以及基于环境反馈自适应行为等能力。这类系统不再只是问题求解器,而是协作伙伴,能够动态感知复杂环境、推理抽象目标,并自主编排一系列行动——无论是独立运行还是作为复杂多智能体生态系统的一部分(Xie et al. 2024;Du et al. 2025)。 为了建立清晰且精确的概念基础,我们首先区分该领域的核心概念。AI 智能体(或单智能体系统)指为完成某项目标而设计的自包含自治系统。它主要以独立方式运行,虽然可能与工具或 API 交互,但其能动性体现为自治性、主动性,以及能够独立完成任务的能力。 例如,一个基于大型语言模型(LLM)的单智能体若被赋予任务“为一个新的移动应用撰写完整的项目提案”,它将会自主拆解任务、开展研究、撰写各部分内容,并完成最终文档的格式化。 相比之下,智能体化 AI(Agentic AI)是一个更广泛的领域与架构范式,旨在构建能够展现能动性的系统。关键在于,它通常涉及多智能体系统(MAS)的编排,其中多个专门化智能体协同工作,通过协调与通信来解决单一智能体无法胜任的复杂问题。 例如,一个用于执行相同任务的智能体化 AI 系统将部署一组专业智能体:由项目管理智能体负责将任务拆分为子目标,研究智能体收集市场数据,写作智能体撰写内容,而质量保障智能体对结果进行审查。他们之间的协作流程正是智能体化 AI 的典型体现。 总结而言,可以将 AI 智能体视为一个功能强大的“单个工作者”,而智能体化 AI则代表一种利用能动性的原则,通常通过设计并管理整支智能体团队来实现。 然而,这一快速演进也带来了概念上的碎片化与时代错置。先前研究指出的关键问题是概念性回溯(conceptual retrofitting)——即错误地使用经典符号框架(如 BDI 模型(Archibald et al. 2024)或 PPAR 感知–规划–行动–反思循环(Zeng et al. 2024;Erdogan et al. 2025))来描述基于大型语言模型(LLM)的现代系统(Plaat et al. 2025),而这些系统在根本上依赖随机生成与提示驱动的编排。这类做法模糊了 LLM 智能体的真实操作机制(Gabison and Xian 2025;Wang et al. 2024;Zhao et al. 2023;Chen et al. 2024),并人为制造了不同架构范式之间的虚假连续性。

已有多篇综述对智能体化 AI 的部分方面进行了探讨,但大多数研究要么范围有限,要么聚焦于单一技术层面、应用领域或高层概念,未能呈现该领域的全貌,也未有效回应概念性回溯的核心挑战。表 1 对这些综述的关注点、贡献与局限性进行了总结。 为解决这些问题,本文首先建立清晰的历史语境(如图 1 所示),展示 AI 的演化历程可分为五个彼此重叠但相对独立的时代:

1. 符号 AI 时代(1950s–1980s)(Liang 2025)

该时代奠定了 AI 的最初愿景,以逻辑与显式知识为基础。MYCIN、DENDRAL 等专家系统(Swartout 1985)依赖手工构建的符号规则,体现了一种自上而下、演绎式的“纯符号范式”。

2. 机器学习(ML)时代(1980s–2010s)(Thomas and Gupta 2020;Nithya et al. 2023;Trigka and Dritsas 2025)

这一转变阶段摆脱了完全硬编码的逻辑,转向从数据中学习。尽管仍高度依赖人工设计特征,但统计学习模型(如 SVM、决策树)推动了分类、推荐等应用发展,为后续深度学习奠定基础。

3. 深度学习时代(2010s–至今)(Hatcher and Yu 2018;Alom et al. 2019;Dong et al. 2021;Khoei et al. 2023;Chhabra and Goyal 2023)

深度神经网络的普及使得系统能够自动学习层级表征,这一时代革新了视觉、语音与文本的感知能力。然而,这些模型仍主要作为强大的模式识别器,而非自治智能体。

4. 生成式 AI 时代(2014–至今)

GAN 的突破与 Transformer 架构(2017)推动了 LLM(如 GPT、BERT)的快速发展,使 AI 从感知迈向生成,能够合成连贯的文本、代码与媒体,为现代智能体化 AI 提供了核心底座——通用、强大的统计推理引擎。

5. 智能体化 AI 时代(2022–至今)

这一前沿阶段聚焦于利用 LLM 的生成能力实现行动与自治。此时代的典型系统包括 AutoGPT 等能够通过规划与工具使用来追求目标的智能体(Durante et al. 2024;Masterman et al. 2024;Piccialli et al. 2025),以及向多智能体系统演化的高级框架,如 CrewAI 与 AutoGen(Acharya et al. 2025;Viswanathan 2025;Plaat et al. 2025;Schneider 2025;Hosseini and Seilani 2025)。与符号范式中的算法推理不同,这一阶段的能动性源自生成式模型的随机编排机制。

这一历史脉络揭示了一个关键事实:智能体化 AI 并非符号 AI 的线性延伸,而是建立在完全不同的神经架构基础之上。为此,我们提出一个全新的概念框架(图 2),以明确区分智能体化 AI 的符号谱系与神经谱系,从而避免概念性错置,并提供统一的理论视角。

本文的四项核心贡献如下:

提出全新的双范式分类法

引入并应用一个新的分析框架(图 2),明确区分符号与神经谱系,避免概念性回溯并实现精准分类。 1. 架构澄清

阐明现代神经框架的运行原理,如提示链式推理与对话编排机制,而非符号式规划。 1. 实证映射

基于 PRISMA 方法系统性调研 90 篇文献,并使用双范式框架对其进行分类,分析研究趋势并基于正确标准评估其架构。 1. 治理锚定

将伦理、责任与对齐挑战嵌入到各范式的技术背景中,确保在正确的技术语境下讨论安全问题。

本文的结构如下:第 2 节提出理论框架与双范式分类法;第 3 节详述系统性方法;第 4 节基于范式分析呈现文献研究结果;第 5 节讨论启示、局限与未来方向;第 6 节总结主要贡献。

自主武器系统(AWS)的发展——有时也带有“致命性”标签,缩写为LAWS——多年来一直处于激烈讨论之中。众多政治、学术或法律机构及行为体都在辩论这些技术带来的后果和风险,特别是其伦理、社会和政治影响,许多声音呼吁严格监管甚至全球禁止。尽管这些武器备受公众关注且被认为影响重大,但“AWS”这一术语具体指代哪些技术以及它们具备何种能力,却往往出人意料地不明确。AWS可以指无人机、航空母舰、无人空中/地面/海上载具、机器人及机器人士兵,或计算机病毒等网络武器。

这种不确定性之所以存在,尽管(或许正是因为)已有大量定义试图从功能上(例如“一旦激活,自主武器‘无需操作员进一步干预即可选择和攻击目标’”:美国国防部,2023年:第21页)或概念上(源自对自主系统、人工智能或机器学习的理论化)来明确该术语。定义仍为不同类型的技术留下了广阔空间,并且结合关于人工智能的更广泛讨论,也为未来发展的潜力和预测提供了可能。除了术语的模糊性,这些系统在何种意义上以及在多大程度上可被称为“自主”的本质也依然含糊不清。尽管自动化能力的发展无疑在推进(Scharre, 2018; Schwarz, 2018; Packer and Reeves, 2020),人类能动性和干预方式的程度不断降低,但完全超越人类控制、因此被许多人担忧的完全自主武器,在很大程度上仍是一种概念上的可能性,而非实际的军事现实。

这些模糊性导致了巨大的意义空白,而这些空白又往往被想象所填充——这是新技术,特别是人工智能的常见做法(Suchman, 2023)。潜在的现实可以扮演重要角色,因为它们是将专业知识传递到社会其他领域(包括新闻、政策制定、研究、教育和民主决策过程)的工具。因此,关于AWS功能及其后果的看法,受到军事、国家和技术未来想象的启发和塑造。这些想象包括地缘政治情景、伦理问题、国家政策或科幻小说。在安全与军事政策中,这些不同现实之间的相互联系甚至被用作一种方法论——例如,“红队演练”——这意味着应用对潜在未来的创造性虚构描述来为实际决策提供信息(The Red Team, 2021)。另一种应用是兵棋推演,这是一种预见未来军事场景的方法,其起源至少可追溯至19世纪,但已适应当代技术和媒体环境,包括虚拟现实和使用大语言模型的基于人工智能的模拟(Goecks and Waytowich, 2024)。

自主武器的前提,被视为占据着一个自身特有的混合空间,这促使我们探索随之而来的无数现实。本书的基本原理认为,只有承认实际技术发展与其相关的愿景和虚拟场景之间持续而复杂的动态互动,才能理解所讨论的这些现实。正是在这种不确定性——想象、可能性和虚构在此交织——的背景下,自主武器变得极具影响力。它们激发出情感、话语、鼓动、(反)行动、投资、竞争、政策或技术与军事蓝图。

关于自主武器主题的出版物通常侧重于其法律、政治或伦理影响(例如,Bhuta等人,2016;Krishnan,2016),这是评估这些技术的第一层级。也有一些著作讨论了其独特的表征(Graae and Maurer, 2021),以及我们见证和体验它们的方式(Bousquet, 2018; Richardson, 2024)。这些著作的基础也基于前面概述的不同现实。本书引入另一种分析自主武器现实的方法,提出一种第二层级的方法:例如,一个伦理问题不仅仅被框定为伦理问题本身,即沿着提出以下规范性问题的思路:“自动化杀人机器会引发哪些道德问题?” 在本书建议的方法中,伦理问题反而被理解为一个促成因素,它有助于在大众文化、政治、新闻或研究中构建、传播和维持对致命性AWS的特定理解。简言之,伦理话语共同创造了其对象的现实。因此,本书所采取的视角将AWS的不同现实置于前台,进而旨在为现有的辩论揭示其(常常是隐含的)基本假设。

本书这篇引言性章节首先勾勒了军事装备日益自动化的技术和政治发展进程。这些发展在理论上被阐述为既具构成性又具述行性,以涵盖全球范围内在理论和实践中对AWS的动态变化和不同理解。随后,本章就这些现实提出了六点思考,有助于界定和巩固AWS的动态含义,这些含义往往在公众、军事和监管领域受到极大关注。章节最后概述了全书的结构并简要总结了各章的贡献。

全书各部分及章节内容

全书结构分为三个独立部分,分别探讨自主武器的当前现实。每个部分都从特定的视角范式分析自主武器:1. 叙事与理论,2. 技术与物质性,以及 3. 政治与伦理。每个部分的开篇由一位艺术家及其对自主武器的构想引入。这种划分基于对跨越这些领域所阐发的不同意义的分析,这些意义构成了AWS的现实,并强有力地影响着如何感知和对待这项技术。

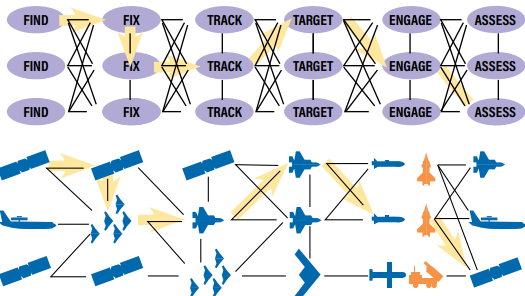

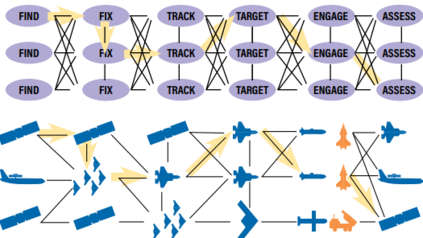

俄罗斯乌克兰战争重塑了当代对战争如何进行与维持的理解。本文认为,现代战争的决定性特征不仅是杀伤链(即连接探测、决策与摧毁的序列)的压缩,更是其在持续干扰下的多样性与韧性。基于乌克兰在整合无人系统、数字化战场管理工具和人工智能方面的经验证据,本分析展示了技术加速如何能在带来短期战术优势的同时,暴露出长期的结构性脆弱。俄罗斯广泛使用的电子战、混合攻击和适应性对抗措施表明,杀伤链优势既充满争夺又十分脆弱。来自以色列、叙利亚和伊朗的对比案例表明,当耐力、后勤和工业产能仍具决定性时,自主性与速度并不能保证战略成功。对北约而言,研究结果强调威慑可信度将取决于由韧性指挥网络、可持续供应体系和政治凝聚力所支撑的分布式、持久性杀伤链架构。文章的结论是,决定未来战争节奏的将是耐力,而非速度。

在乌克兰,从探测目标到实施打击的时间已从数小时缩短至数秒。这种由无人机、商业航天系统和日益普及的人工智能所驱动的压缩,揭示了现代冲突的真正重心:对杀伤链优势的争夺。杀伤链被定义为从探测、决策到摧毁的端到端过程,它是所有现代作战的基础。在此框架下,胜利更少取决于火力,而更多取决于连接传感器与射手的链路的速度、韧性与多样性。

乌克兰利用无人系统和数字化战场管理工具来加速其远程杀伤链。俄罗斯则试图通过电子战、网络行动和对基础设施的混合攻击来破坏它们。双方都在不断调整以重获节奏并剥夺对手的优势。其结果是,这场冲突不仅展示了杀伤链压缩的战略重要性,也揭示了其局限性。包括能源、物流和通信网络在内的民用基础设施,已作为维持战争努力的平行杀伤链而出现。这种压缩与干扰的二元性已成为21世纪战争的一个决定性特征。

本文认为,乌克兰战争带来的决定性启示是,战争的未来将更少取决于杀伤链的压缩,而更多取决于维持这些系统的韧性与多样性。杀伤链优势将属于那些能够在军事和民事领域重建、适应并承受持续干扰的行为体。战术速度必须与结构韧性相辅相成。讨论将通过五个部分展开:(1)界定杀伤链理论的演变并阐释其背景;(2)分析乌克兰战场上的压缩、干扰与多样化;(3)评估人工智能赋能作战与自主性的局限;(4)比较乌克兰经验与其他冲突;(5)概述对北约及其伙伴的战略启示。

利害关系十分严峻。假设未能内化乌克兰的教训,那么,将在未来的冲突中处于结构性劣势——杀伤链更慢、更易受混合干扰的打击、更难以维持长期战斗。对手已经在试验人工智能赋能的目标识别、自主集群和对关键基础设施的破坏。如果不做出调整,就可能将主动权让给那些优先考虑节奏而非合法性、优先考虑胁迫而非正当性的行为体。乌克兰的启示并非简单地认为无人机至关重要或网络战表现不佳;而是认识到现代战争是跨越军事和民事领域的速度、韧性与适应能力的竞赛。秩序的稳定将取决于是否能在其对手定义交战规则之前,获得杀伤链优势。

杀伤链的演变与定义

“杀伤链”这一概念根植于冷战后期,当时美国试图通过技术和信息优势来抵消苏联的数量优势。20世纪70年代末,美国国防部制定了后来被称为“抵消战略”的计划,其核心是“突击破坏者”概念:即使用远程精确制导弹药和实时目标指示,在敌方装甲部队抵达前线之前将其摧毁。这是一项将信息优势转化为杀伤力的战略尝试,为后来成为网络中心战的理论奠定了基础。

到1991年海湾战争时,这些理念已发展为“震慑”学说,强调快速、精确打击对敌方战斗意志产生的心理和系统性效果。在后9/11时代,同样的原则在“发现、锁定、终结”的反恐行动框架下,以更小的规模得到应用,其杀伤链从探测到交战被压缩到几分钟之内,以摧毁恐怖主义网络。每一次演变都反映了相同的逻辑:技术加速将取代数量规模,而信息速度将带来决策优势。

美国空军在21世纪初正式将这一过程编码为“发现、锁定、跟踪、定位、交战、评估”循环,该循环至今仍是联合目标锁定理论的核心。随着时间的推移,这一概念已从战术领域扩展到战役和战略层面的关联。在战术层面,杀伤链的运作以秒或分钟计,例如在反恐突袭或无人机打击中。在战役层面,它们跨越数小时或数天,在整个战区协调多种火力与情报、监视和侦察资产。在战略层面,杀伤链的展开可能需要数周或数月,将国家情报、后勤和工业动员整合到战役规划中。

贯穿这些层面的一个统一见解是,杀伤链的有效性不仅取决于速度,还取决于连接性和韧性。乌克兰的经验代表了这一演变的最先进体现:一个实时、多领域的生态系统,其中商业、军事和民用资产持续互动以产生作战节奏。然而,这也暴露了该范式的局限性。当快速的决策周期未能产生战略成果时,冲突就会演变为消耗战,其中耐力、生产能力和适应性比速度更为重要。

乌克兰战场的压缩、干扰与多样化

乌克兰战争已成为21世纪数据最丰富、技术最活跃的冲突。西方精确制导系统、商业情报监视侦察资产和国内创新的整合,使基辅得以将其远程杀伤链压缩到前所未有的水平。兰德公司报告称,炮击的平均“传感器到射手”周期从2022年的30分钟缩短到2024年的不到1分钟,而对于第一人称视角无人机辅助的接战,甚至短至30秒。

这种压缩基于三项创新:(1)广泛使用第一人称视角无人机进行实时侦察和打击协调;(2)Delta、“克里帕瓦”、GIS Arta等数字化指挥控制工具的普及,整合了战场情报;(3)依赖商业卫星通信和影像,特别是通过“星链”和卡佩拉太空公司的卫星。乌克兰的“无人机军团”计划已培训超过1万名操作员,并计划到2025年中部署约5万架无人机,这标志着民用技术与军事实践前所未有的融合。

消耗仍然严重。皇家联合军种研究所估计,乌克兰每月损失8000至1万架无人机,主要归因于俄罗斯的电子战。然而,这种损失率被快速的本地制造、开源设计和众包维修中心所抵消。乌克兰模式表明,杀伤链优势既依赖于技术先进程度,也同样依赖于工业适应能力。

俄罗斯试图通过系统性干扰来抵消乌克兰的速度优势。其电子战部队(估计沿前线部署了60套主要系统)对GPS和无人机控制频率实施了干扰,降低了情报监视侦察数据流的效率,并瞄准了指挥控制节点。俄罗斯的适应措施相当显著,包括部署“山雀”和“极点-21”电子战系统、“海鹰-30”等人工智能辅助的情报监视侦察无人机,以及广泛使用“柳叶刀”巡飞弹。每一轮压缩都会引发一轮干扰的对抗循环,导致速度带来的回报递减。

近期研究表明,战争的未来不仅取决于压缩,还取决于多样化——即生成并保护多种模块化杀伤链的能力,这些杀伤链能够动态重构以应对攻击。美国和盟国防务界内的“马赛克战争”框架提出了仿照生物韧性建立的“异构、分布式杀伤链”模型。乌克兰的去中心化指挥模式已经反映了这一原则:分层的情报监视侦察网络、冗余的指挥控制节点和多平台协调形成了一个杀伤路径的网状结构。

混合行动与民用杀伤链

对杀伤链优势的争夺延伸至战场之外。俄罗斯的混合战略旨在削弱维持军事节奏的民用基础设施。能源电网、海底电缆、物流走廊和卫星网络都已成为目标。这些构成了“民用杀伤链”,其完整性决定了一个国家维持战争的能力。

在2023年至2025年间,欧洲记录了超过40起与俄罗斯代理势力有关的物理或网络破坏行为。诸如2025年挪威布雷芒厄尔大坝的网络入侵、与电缆干扰相关的瑞典哥得兰岛临时停电,以及对波罗的海海底基础设施的破坏等事件,都展示了一种连贯的破坏模式。此外,在伪造的自动识别系统信号下运作的俄罗斯油轮“影子船队”,模糊了商业与军事领域的界限,造成了持续的海上不稳定。这些行动反映出莫斯科长期以来的信念,即非军事措施可以达成战略效果。

这种方法反映了俄罗斯“主动防御”的条令概念,该概念认为早期破坏对手(军事和民用)系统具有决定性意义。针对欧洲关键基础设施的混合行动,旨在提高支持乌克兰的成本、削弱其凝聚力并侵蚀其韧性。由此产生的环境表明,威慑现在不仅需要保护提供火力的杀伤链,同样需要保护支撑能源、物流和信息生态系统的杀伤链。

人工智能、自主性与节奏合法性困境

人工智能已成为乌克兰指挥和目标锁定系统不可或缺的一部分。“德尔塔”平台利用机器学习整合传感器数据以确定目标优先级。“克罗帕瓦”系统实现火力协调自动化,减少决策延迟。人工智能驱动的图像识别协助处理无人机画面和卫星影像,从而实现更快、更明智的交战决策。

然而,人工智能的整合仍然是部分的。乌克兰的系统保留了人为监督,以确保遵守国际人道法。俄罗斯的方法则更为宽松,在其“柳叶刀”无人机中尝试自主目标锁定,并将人工智能辅助制导集成到其情报、监视与侦察网络中。这种差异反映了一个核心的战略分歧:威权国家倾向于将节奏置于合法性之上,而民主国家则必须在速度与合法性之间取得平衡。

对比经验强化了这种困境。在叙利亚,俄罗斯部队利用人工智能支持的情报、监视与侦察和巡飞弹对非正规部队实施精确打击,展现了高节奏但有限的识别区分能力。在以色列,“火力工厂”人工智能系统在加沙行动中实现了前所未有的打击协同,将杀伤链压缩至十分钟以内。相比之下,伊朗在乌克兰使用“沙希德-136”无人机则显示出相反的情况:低成本、低速、可消耗的系统,为持久力而非节奏进行了优化。这些案例共同表明,人工智能赋能的速度加速提供了战术优势,但不必然带来战略成功。

自主性也引入了人力因素。随着乌克兰面临日益严峻的人口结构限制,无人和半自主系统的扩展代表着一种战略适应,旨在保持战斗力,尽管人员可用性在下降。然而,这种替代只是局部的。可损耗自主系统的使用抵消了人力限制并延长了持久力,但并未消除对人员占领和防御地盘的需求。

战略限制与局限:消耗、升级与持久力

来自乌克兰及可比冲突的经验证据表明,战术速度不能保证战略成功。快速压缩能带来局部优势,但无法决定消耗战争的结果。兰德公司的分析指出,俄罗斯的后勤能力和生产深度使其能够承受损失,而乌克兰在节奏上取得的优势仅带来了微小的领土收益。正如约瑟夫·奈所指出的,网络和人工智能效应已被证明是传统持久力的“放大器,而非替代品”。

此外,升级风险限制了节奏优势可利用的程度。如果俄罗斯面临战场崩溃,使用战术核武器的可能性依然存在。北约缺乏对等的非战略性核选项,这使威慑复杂化并增加了升级风险。杀伤链加速通过缩短决策时间线,可能无意中压缩了升级阶梯,迫使战略困境在数分钟而非数小时内得到解决。

乌克兰战争也表明,高科技冲突可能比预期持续更久。通过精确打击和自动化取得决定性结果的预期被证明是错误的。相反,工业产能、适应性和社会韧性决定了持久力。对北约的启示在于,杀伤链优势必须与长期维持能力和政治凝聚力相结合。

比较可复制性与经验教训

虽然乌克兰提供了无与伦比的经验洞察,但其经验并非普遍适用。本土国防工业和安全边界使以色列得以整合人工智能与自动化;而乌克兰则缺乏这些条件。相比之下,叙利亚的环境使俄罗斯能够在低风险条件下进行试验,而无需面对对等级别的干扰。伊朗的无人机生产模式展示了可扩展性,但在面对先进电子战时则不具备生存能力。

乌克兰的独特优势在于其开源创新生态系统。民用技术专家、志愿者开发人员和公开来源情报社群实时协作以调整系统。“DeepStateMap”和“Molfar Intelligence”等平台模糊了情报与行动主义的界限,创造了一种社会性杀伤链整合形式。该模式反映了一种持续适应的国家能力——这是未来威慑战略的一个关键变量。

战略影响与对北约的政策建议

乌克兰战争揭示了西方防务态势中的结构性脆弱。现代冲突的决定性优势不在于平台数量,而在于杀伤链架构的完整性与适应性。对北约而言,适应这种环境需要围绕四个相互关联的重点重新调整其力量设计:速度、韧性、多样化和持续保障。

- 在监督下制度化人工智能赋能的速度优势

乌克兰的经验证实,人工智能可以加速指挥与控制流程。然而,缺乏监督的自动化会带来升级和错误风险。北约应建立一个操作性框架,使人工智能能够管理目标发现、数据融合和优先级排序,同时保留人类操作员的交战决策权。这种“人在回路之上”的结构既能保持速度,又不会削弱法律和政治问责制。为将此能力制度化,盟军转型司令部应领导一项关于人工智能赋能目标锁定的常设计划。联合演习应测试各国系统间的算法协调、互操作性和决策延迟。在此规模的整合需要共享数据标准、共同的测试制度以及从战术到战略层级的明确问责链。

- 加固与分布式指挥控制网络

乌克兰冲突的每个阶段都表明,电子战和网络干扰能够分割指挥网络。北约不能假设其系统在持续攻击下仍能保持协调一致。因此,盟国应寻求冗余、去中心化的指挥控制结构,使其在脱离上级梯队时仍能自主运行。这包括使用商业卫星、跨域路由协议和适用于降级环境的低带宽战场通信,构成预先配置的后备网络。作战条令应向任务式指挥原则演变,赋予下属单位在通信中断期间的决策权。分布式而非集中化,是对抗频谱拒止和精确打击的唯一可持续防御方式。

- 恢复工业产能与持续保障能力

消耗战的结果取决于工业速度。北约现有的国防工业基础缺乏灵活应变的能力。盟国应建立一个“集体生产框架”,明确关键制造依赖性,并在成员国间分配产能激增的责任。库存管理必须从库存盘点转向产能评估——即评估弹药、无人机和传感器在火力下的替换速度。这将需要一个由预先商定的生产共享协议和融资机制构成的和平时期网络。这不是回归冷战时期的动员,而是对威慑的重校准,以反映工业而非数量的竞争。

- 防御民用杀伤链

俄罗斯针对欧洲能源、物流和信息基础设施的混合战役表明,民用系统已成为战场的延伸。因此,北约的威慑框架必须将这些“民用杀伤链”视为战略资产。盟国应为成员国设定可执行的韧性基准(例如,电网冗余、海底电缆保护、以及针对网络物理攻击的预先安排恢复机制)。这些标准应通过北约-欧盟合作框架下的集体韧性审计进行监督。此领域的威慑将更少来自拒止,而更多来自展现出的快速重建能力。

- 在加速决策环境中管控升级

更快的决策周期伴随着相应的升级风险。如果俄罗斯面临战场崩溃,有限使用核武器仍是一个可能的选择。因此,北约的威慑规划必须纳入时间性升级控制,即确保压缩的杀伤链不会挤占政治决策窗口。这需要现代化核协商机制,使其能在高节奏下运作。决策模拟应测试升级阈值在信息降级和时间约束下如何保持。整合常规速度管理与核信号传递,对于防止无意的危机升级至关重要。

- 重建人力与政治韧性

技术并未取代人类意志的核心地位。乌克兰经验表明,战术系统的重要性低于组织的适应能力和领导层的持久耐力。相应地,北约应投资于人力资本,优先发展认知准备、分布式领导和政治凝聚力。公共传播策略应强调威慑依赖于集体韧性,而非瞬时精确。随时间推移维持民主意志,依然是北约相对于专制对手的比较优势。

结论

乌克兰战争生动展示了现代军队如何在压力下适应。它表明,杀伤链优势是必要的,但不足以确保胜利。技术加速提供了暂时优势;而战略成功取决于持久耐力与恢复能力。

乌克兰的战地创新展示了当商业、军事和民用系统整合时,适应性强的民主国家所能取得的成就。然而,它也暴露了持久的制约:压缩的杀伤链放大了遭受干扰的成本,而韧性成为现代战争的限速因素。俄罗斯尽管遭遇战术挫折却仍能坚持,表明工业和社会耐力能够抵消技术不对称。

本文的核心论点是,杀伤链优势衡量的不是速度,而是系统韧性——即在遭受干扰后维持决策和打击能力的能力。未来的冲突将青睐那些能够维持多重、相互重叠的杀伤链(军事、工业、信息和社会)的行为体,使其能够利用敌方弱点并达成战略目标。胜利将不属于最快的网络,而属于最持久的系统。

对北约而言,这些观察构成了明确的战略要务:盟国必须设计能够承受持续压力的分布式、冗余、持久的杀伤链架构。人工智能将推动这场变革,但其成功同样取决于后勤、人力和政治凝聚力。北约的威慑可信度将不取决于其打击速度,而取决于其在遭受干扰后维持作战的能力。因此,乌克兰的核心教训是结构性的:二十一世纪的威慑将取决于整个杀伤链生态系统的韧性。北约的任务是在下一次冲突检验其韧性之前,将这种韧性制度化。

NeurIPS 是关于机器学习和计算神经科学的国际会议,宗旨是促进人工智能和机器学习研究进展的交流。NeurIPS 2025 会议将于12月2日至12月7日在圣地亚哥会议中心召开。

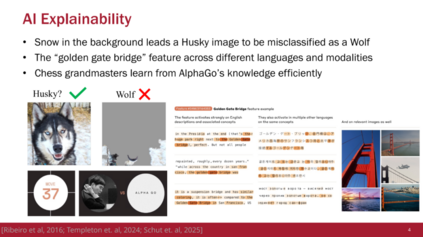

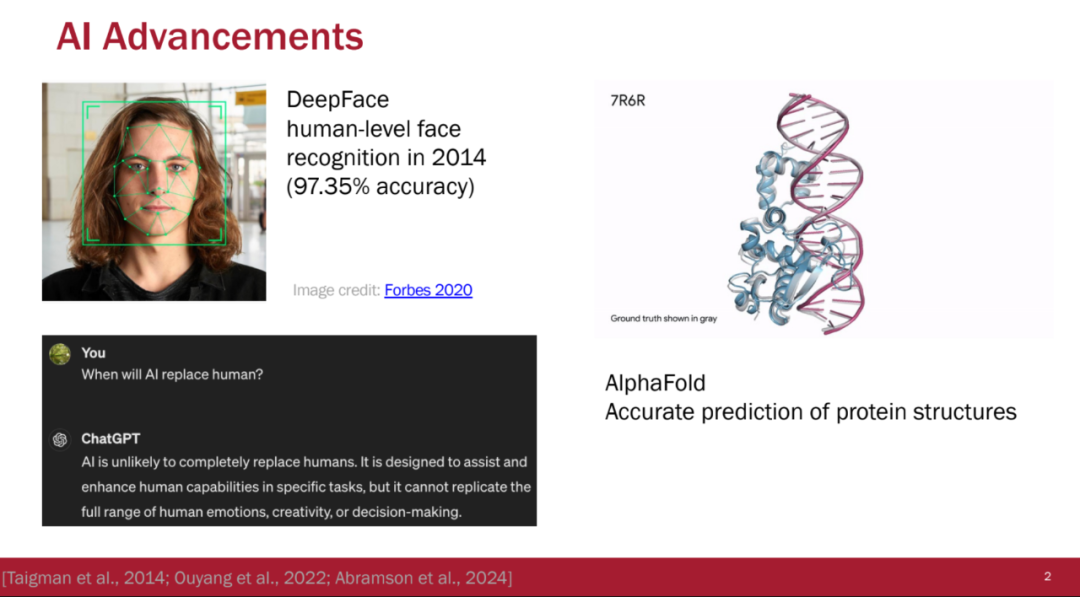

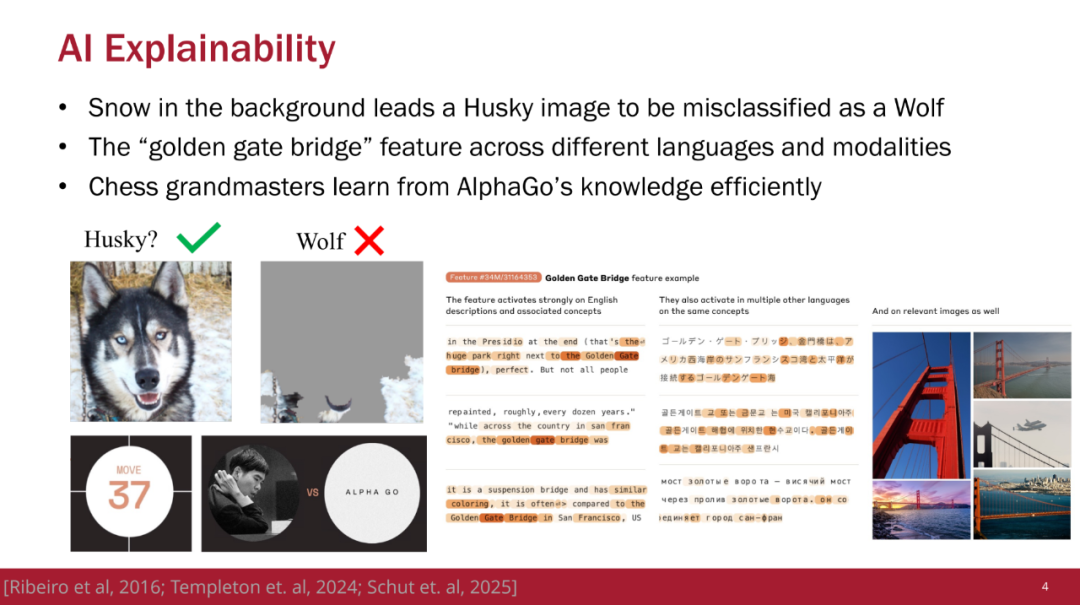

理解 AI 系统行为已成为确保安全性、可信性以及在各类应用中有效部署的关键。 为应对这一挑战,三个主要研究社区提出了不同的可解释性方法: * 可解释人工智能(Explainable AI) 聚焦于特征归因,旨在理解哪些输入特征驱动了模型决策; * 数据中心人工智能(Data-Centric AI) 强调数据归因,用于分析训练样本如何塑造模型行为; * 机制可解释性(Mechanistic Interpretability) 研究组件归因,旨在解释模型内部组件如何对输出作出贡献。

这三大方向的共同目标都是从不同维度更好地理解 AI 系统,它们之间的主要区别在于研究视角而非方法本身。 本教程首先介绍基本概念与历史背景,阐述可解释性为何重要,以及自早期以来该领域是如何演进的。第一部分技术深度解析将涵盖事后解释方法、数据中心解释技术、机制可解释性方法,并通过一个统一框架展示这些方法共享的基本技术,如扰动、梯度与局部线性近似等。 第二部分技术深度解析则聚焦于内生可解释模型(inherently interpretable models),并在可解释性的语境下澄清推理型(chain-of-thought)大语言模型与自解释型 LLM 的概念,同时介绍构建内生可解释 LLM 的相关技术。我们还将展示可使这些方法易于实践者使用的开源工具。 此外,我们强调了解释性研究中前景广阔的未来研究方向,以及其在更广泛的 AI 领域中所引发的趋势,包括模型编辑、模型操控(steering)与监管方面的应用。通过对算法、真实案例与实践指南的全面覆盖,参与者将不仅获得对最先进方法的深刻技术理解,还将掌握在实际 AI 应用中有效使用可解释性技术的实践技能。

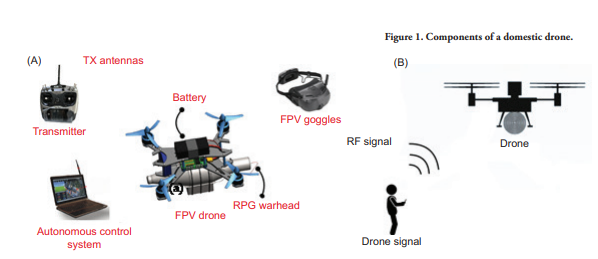

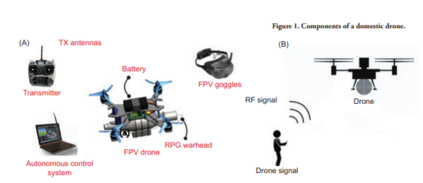

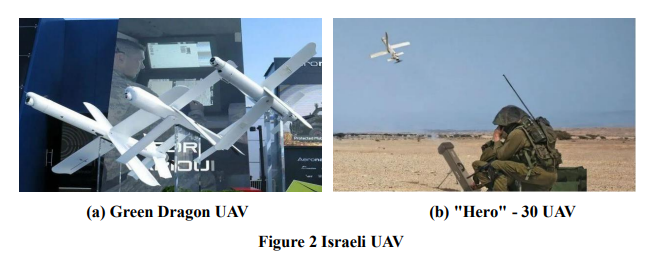

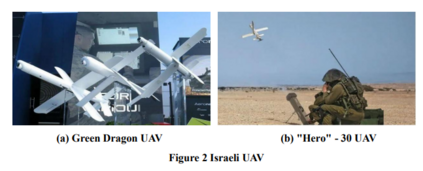

低成本、精密型无人机近期的快速增长,连同相关的技术挑战,是作战战术中的一个显著特征。随着俄罗斯和乌克兰双方愈发依赖这些成本效益高但工艺粗糙的无人机,他们塑造了一个以高效率和低成本为特色的新战场。俄乌双方均发射无人机以攻击对方。无人机或许能以较低效率定位目标,但仍可造成重大破坏。无人机能够摧毁并碳化单价约400万美元的坦克。然而,无人机的单位成本不超过1000美元。目前市场上大多数反无人机设备(包括激光器、高功率微波武器和射频干扰器)的局限性和低效性,在无人机日益普及的背景下已显而易见。本案例研究表明,现有的反无人机技术无法有效压制双方使用的神风敢死队无人机和武器化无人机。由于这些失败,俄乌两军采用了新技术,例如金属网格和尼龙网屏障,这些措施在一定程度上能有效摧毁和拦截无人机。本文通过案例研究,呈现了自2022年2月以来交战双方对无人机的依赖以及所采用的反无人机战术。本研究调查了当前双方可用于减轻武器化无人机战场影响的解决方案,对其进行了评估,并论证了这些方案固有的缺陷如何推动针对武装无人机的新策略和对抗措施的研发。

本文结构如下:第1部分为引言。第2部分论述无人机在俄罗斯-乌克兰冲突中的重要性。第3部分阐述现有反制措施在摧毁无人机方面的局限性。第4部分介绍在此冲突中出现的新型反无人机解决方案。第5部分重点呈现研究结果,阐明新反制措施及创新方法的优势与不足。最后,第6部分为结论。

无人机蜂群正逐渐成为集电子对抗、信息攻防与火力打击于一体的综合性新型武器平台,已成为未来战争的重要形态,也催生了反无人机蜂群系统的快速发展。针对无人机技术发展迅速并成为战场重要威胁的问题,本文分析了典型无人机蜂群系统级目标的特点,研究了防空导弹、高炮/弹炮结合、高能激光与高功率微波等反制无人机蜂群的主要手段,并对反制性能进行了对比分析。提出了一种基于无人机搭载微后坐力自动枪的新型空中反无人机拦截系统,研究了其体系架构、作战概念与流程、涉及的关键技术与创新点,为加强反无人机系统能力提供了技术参考。

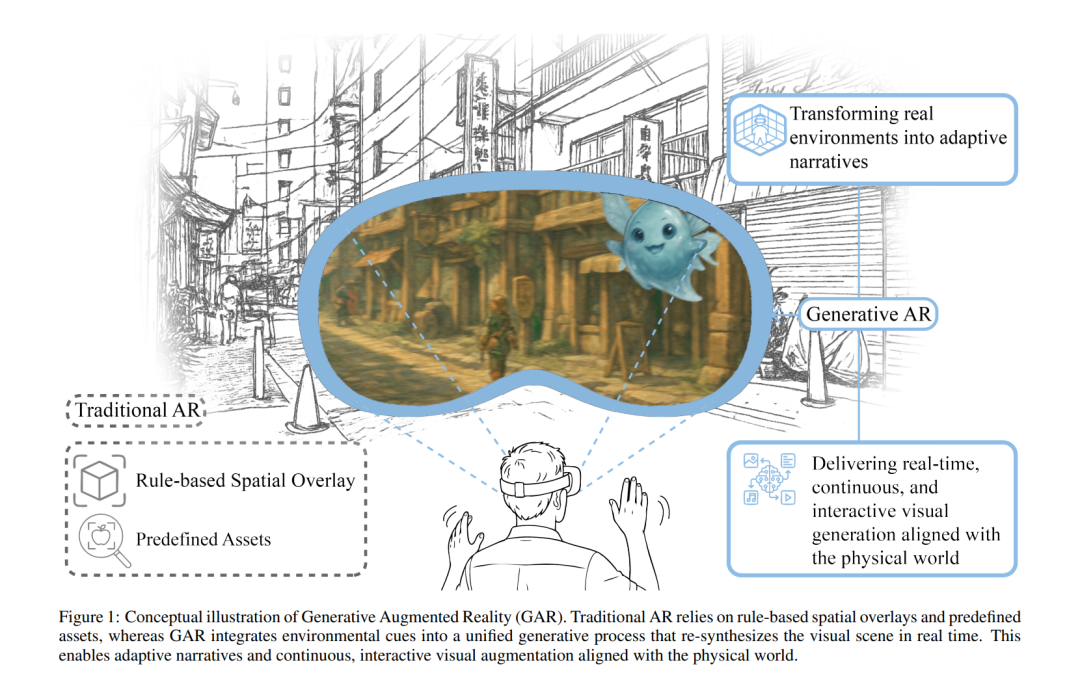

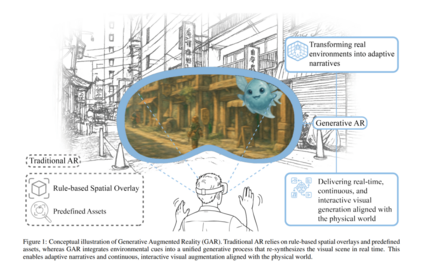

本文提出了**生成式增强现实(Generative Augmented Reality, GAR)这一下一代范式,将“增强”从传统 AR 引擎的世界组合(world composition)重新定位为一种世界再合成(world resynthesis)过程。GAR 以一个统一的生成式主干(unified generative backbone)取代传统 AR 引擎的多阶段模块,使环境感知、虚拟内容与交互信号能够作为条件输入,被联合编码(jointly encoded)**到连续视频生成过程中。 我们形式化地讨论了 AR 与 GAR 之间的计算对应关系,回顾了使实时生成式增强成为可能的技术基础,并概述了利用其统一推理模型的潜在应用前景。我们将 GAR 视为未来的 AR 范式,能够在真实感、交互性与沉浸感方面提供高保真体验,同时也带来了关于技术、内容生态系统,以及伦理与社会影响等方面的新研究挑战。 增强现实(Augmented Reality, AR)的出现源于长期以来人们希望将数字内容与基于用户真实世界感知与行动的物理环境相融合的目标。早期的相关形式包括 Thomas 和 David(1992)在飞机装配任务中叠加数字指令的研究,以及 Milgram 和 Kishino(1994)提出的“现实—虚拟(Reality–Virtuality)连续统”概念,这些工作将 AR 置于虚拟现实与物理现实之间的一种中间融合形态。随着感知、空间追踪以及实时渲染技术的进步 [Azuma, 1997a],能够使数字内容与真实物理场景对齐成为可能,AR 逐渐演化为一种技术框架,使用户能够将虚拟元素作为其周围环境的一部分进行感知和交互,并广泛应用于工业指导、教育、导航与交互媒体等领域。 然而,随着技术进步不断提升 AR 对内容保真度、交互精确性及自然响应性的要求,传统 AR 架构背后的组合式范式暴露出固有局限。现有系统通常依赖显式建模的资产(assets)、预定义的交互规则以及确定性的图形管线。这种结构使得合成高保真交互变得困难,例如流体材料行为、复杂机械动力学,甚至生物体的响应性。此外,扩展到更广阔、更具表现力的内容空间往往会增加内容创作负担并降低系统稳定性:生成高保真 3D 资产需要大量人工投入,但即便是精心制作的资产,其行为表现力仍然有限,使得真正响应式或逼真的交互难以实现。 与此同时,生成式模型的快速发展,尤其是基于扩散模型的视频生成模型 [Ho et al., 2022; Kong et al., 2024],引入了一种构建视觉体验的全新方式。这类模型能够在高层条件(如文本意图 [Luo et al., 2023]、运动提示 [Bai et al., 2025]、参考帧 [Hu, 2024] 或行为信号 [Guo et al., 2025])的驱动下,生成时间连贯、语义扎实的视频内容,覆盖并超越物理世界与想象世界的场景。与其将场景视为增强的固定背景,生成式视频模型将“现实”表示为一种可学习、可扩展的过程,其中物理一致性与时间演化在统一的潜空间中表达。随着此类模型逐步迈向实时推理 [Yin et al., 2025] 与可控流式生成 [Lin et al., 2025b],它们将计算重点从“叠加内容”转移至“在交互驱动下生成世界的演化”。 本文从概念与技术两方面,对生成式增强现实(Generative Augmented Reality, GAR)作为下一代空间计算(spatial computing)的计算框架,进行前瞻性综述。我们的主要贡献包括: • 形式化传统 AR 组合式管线向生成式世界再合成的计算转变,并从感知基础、控制流、资产管理与渲染机制等方面给出对比性表述。 • 综述支撑 GAR 的关键技术,包括流式视频生成模型、计算效率与质量优化、多模态控制机制以及资产管理方法。 • 分析 GAR 的未来应用图景,以及其在空间体验、具身创造、动态故事生成、协作式世界构建与混合现实生态系统方面的潜在变革能力。

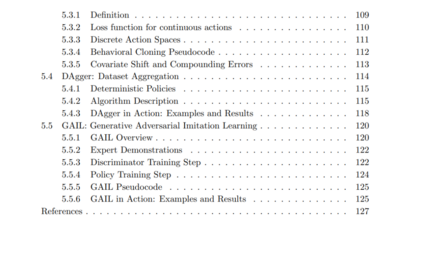

具身智能体(如机器人和虚拟角色)必须持续选择动作以有效完成任务,从而求解复杂的序列决策问题。由于手动设计此类控制器十分困难,基于学习的方法逐渐成为有前景的替代方案,其中最具代表性的是深度强化学习(Deep Reinforcement Learning, DRL)和深度模仿学习(Deep Imitation Learning, DIL)。DRL 依靠奖励信号来优化行为,而 DIL 则利用专家示范来指导学习。 本文档在具身智能体的背景下介绍 DRL 与 DIL,并采用简明而深入的文献讲解方式。文档是自包含的,会在需要时引入所有必要的数学与机器学习概念。它并非旨在成为该领域的综述文章,而是聚焦于一小部分基础算法与技术,强调深度理解而非广泛覆盖。内容范围从马尔可夫决策过程(MDP)到 DRL 中的 REINFORCE 与近端策略优化(Proximal Policy Optimization, PPO),以及从行为克隆(Behavioral Cloning)到数据集聚合(Dataset Aggregation, DAgger)和生成对抗模仿学习(Generative Adversarial Imitation Learning, GAIL)等 DIL 方法。 关键词: 深度强化学习,深度模仿学习,马尔可夫决策过程,REINFORCE,近端策略优化(PPO),行为克隆(BC),数据集聚合(DAgger),生成对抗模仿学习(GAIL)。 具身智能体(例如机器人与虚拟角色)必须持续决定应采取何种动作,以有效执行任务。这一过程本质上是一个序列决策问题:智能体需要在时间维度上不断选择动作来控制自身的执行器,使其能够移动、感知并操控环境,最终完成所分配的任务。 手动设计此类序列决策机制众所周知地困难重重。其挑战包括:构建能够解释智能体高维、多模态感知数据的特征提取器,以及设计从这些特征到执行器指令的最优非线性映射。在许多情况下,控制器还必须具备记忆能力,并主动管理感知过程本身,从而进一步增加了手工工程的复杂性。 一种强有力的替代方案是依赖机器学习。基于学习的方法能够端到端构建控制策略,同时学习感知特征提取器以及从这些特征到执行器命令的映射。为此,这些方法需要一种反馈信号,用以指示智能体在执行任务(无论是行走、物体操作、导航,或其他技能)时表现的优劣。此类反馈通常由人类专家提供。 在某些情境中,人类专家能够直接评估智能体的行为,为期望的动作给予正奖励、为不期望的动作给予负奖励。深度强化学习(Deep Reinforcement Learning)算法利用这一奖励信号来训练端到端控制策略。在其他情境中,专家通过示范来展示如何完成任务。智能体行为与专家示范之间的差异构成了强有力的学习信号,深度模仿学习(Deep Imitation Learning)算法便利用该信号训练端到端控制器。

1.1 概述

本文件旨在向读者介绍应用于具身智能体的深度强化学习(DRL)与深度模仿学习(DIL)。文档首先介绍马尔可夫决策过程(MDP)的形式化框架,并描述精确与近似的求解方法。在近似方法中,本文件将介绍经典算法 REINFORCE [21],并重点讨论近端策略优化(Proximal Policy Optimization, PPO)[19],这是当前最广泛使用且在控制具身智能体方面非常有效的强化学习算法之一。 随后,文档将过渡到深度模仿学习,介绍三类基础方法:(a)行为克隆(Behavioral Cloning)[14, 1];(b)其交互式扩展数据集聚合(Dataset Aggregation, DAgger)[15];(c)生成对抗模仿学习(Generative Adversarial Imitation Learning, GAIL)[6]。

1.2 目标读者

本文件面向具有大学程度数学与计算机科学背景、希望学习应用于具身智能体的深度强化学习与深度模仿学习的学生与研究人员。尽管假设读者具备基本数学基础,本文件仍包含一章用于回顾理解后续内容所必需的核心数学原理。 不要求机器学习的先验知识,因为所有必要概念将在其出现时引入。本文件旨在自包含:从数学到机器学习相关概念,均以循序渐进、教学友好的方式在需要时呈现。

1.3 内容范围

本文件源于作者编写的课程讲义,并采用简洁而深入(depth-first)的文献处理方式。其目的并非构成该领域的综述,而是刻意聚焦在一小部分基础算法与技术上,优先强调深入理解,而非广泛覆盖。 这一选择基于如下理念:对核心方法的深刻理解,比起对大量现有与未来变体的表层性浏览,更能为独立学习更广泛内容打下坚实基础。

1.4 阅读指南

如果读者需要回顾概率论、信息论与微积分的基本原理,应从第 2 章(数学基础)开始。之后,可根据兴趣沿两条主要路径阅读文档,如下所述。 * 若读者对深度强化学习感兴趣,建议继续阅读第 3 章(马尔可夫决策过程)和第 4 章(深度强化学习)。若同时希望了解深度模仿学习,可继续阅读第 5 章(深度模仿学习)。这类读者也可以跳过第 5.1 节,该节提供了对第 3、4 章关键思想的简要回顾。 * 若读者主要对深度模仿学习感兴趣,而不关心深度强化学习,则可以直接阅读第 5 章(深度模仿学习)。如前所述,该章包含理解模仿学习算法所需的关键概念回顾。

1.5 延伸阅读

文档所述内容可由相关资源进一步补充,以帮助读者获得更深入的理论与实践理解。 对于深度强化学习,Stable Baselines3(SB3)[17] 提供了广泛使用的强化学习算法的可靠 PyTorch 实现,是优秀的实验平台。此外,OpenAI 的教育资源 [13] 结合理论与实践,具有很高的学习价值。Sutton 与 Barto 的教材 [20] 依旧是该领域奠基且高度推荐的参考文献,系统介绍了强化学习原理与方法。 对于深度模仿学习,多篇综述可用于扩展视野与理解历史背景 [7, 23, 22],同时也有聚焦虚拟角色领域的特定综述 [12, 9]。实践资源方面,imitation 库 [2] 与 ML-Agents [8] 提供了基于 PyTorch 的多种现代模仿学习算法的实现。

**

**

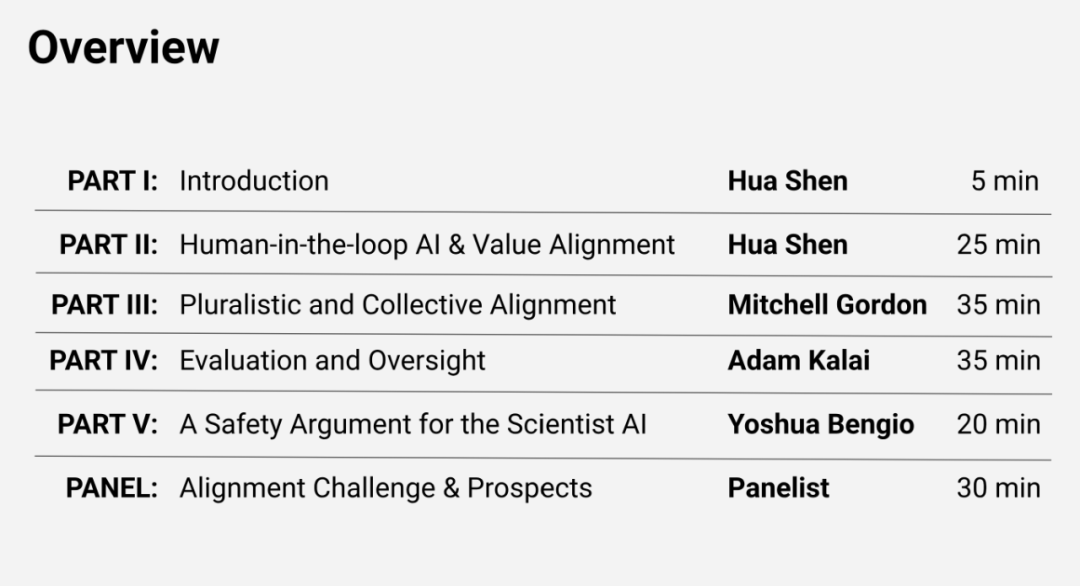

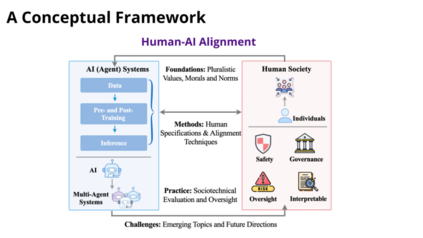

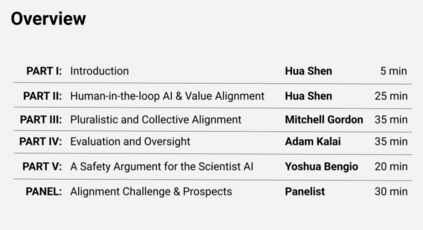

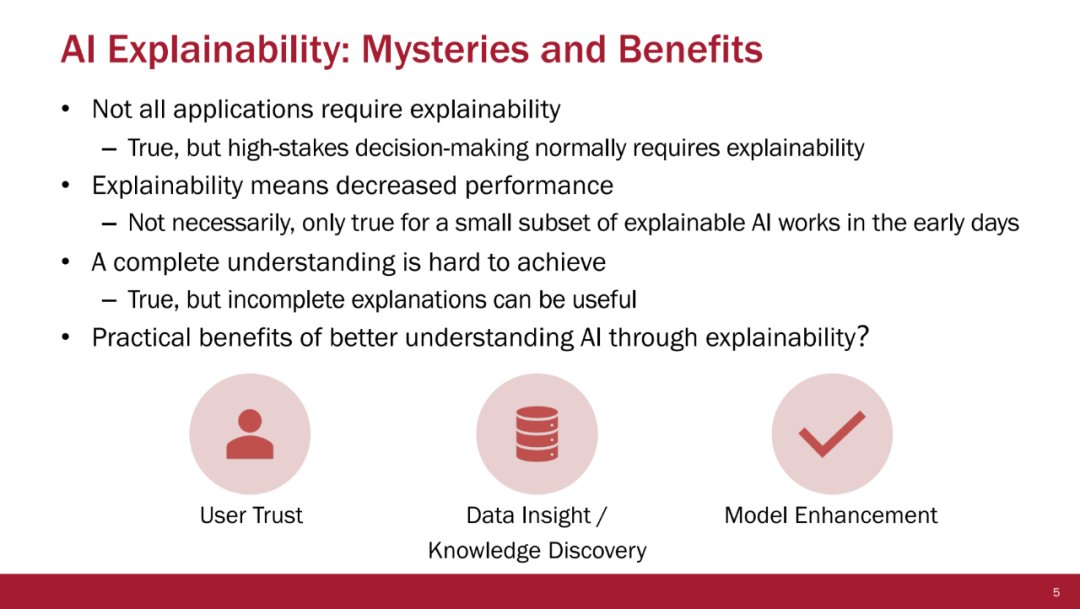

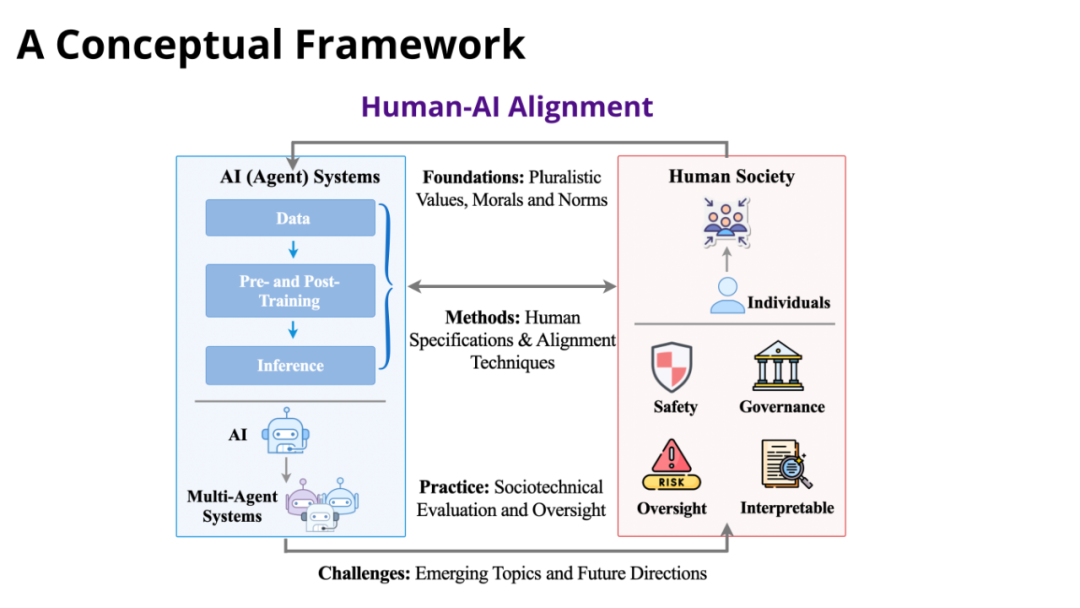

随着通用人工智能系统的迅速发展,使这些技术与人类价值、伦理与社会目标保持对齐已成为一项紧迫任务。传统方法通常将对齐视为一种静态的、单向的过程,而本教程将其重新定位为一种动态的、双向的关系:人在其中与 AI 系统不断相互适应。我们提出了一个结构化的人类–AI 对齐框架,并系统性地探讨如何在整个对齐流程中增强人类能动性。 本教程围绕三个核心领域展开:基础(AI 应与哪些价值对齐?)、方法(如何在系统各阶段赋予人类更大的对齐主导权?)、以及实践(AI 部署会带来哪些社会技术影响?)。课程最终将以一个跨学科专家小组讨论作为总结,四位领先学者将围绕新兴的挑战与未来研究方向展开对话。 本教程旨在为参与者提供关键的概念基础、实用的方法论,以及对不断演进的对齐生态的批判性视角。包括幻灯片、代码资源与录制内容在内的全部材料都将在我们的教程网站上公开获取。

https://hai-alignment-course.github.io/tutorial/

1 描述(Description)

通用人工智能的快速发展带来了一个迫切需求:使这些系统与人类价值、伦理原则以及社会目标保持对齐。该挑战被称为 AI 对齐(AI alignment)[1],它对于确保 AI 系统既能有效运作,又能在最小化风险的同时最大化社会收益具有关键意义。传统上,AI 对齐常被视为一种静态的、单向的过程,旨在引导 AI 系统实现期望结果并避免不良后果[2]。然而,这种单向视角已难以满足需求,因为 AI 系统正以动态且难以预测的方式与人类交互,形成反馈循环,影响着 AI 的行为与人类的反应[3]。这种不断演化的互动关系要求我们从根本上转向一种认识——即人类与 AI 之间关系的双向性与适应性[4]。 尽管以往的对齐教程主要将 AI 对齐视为一种满足人类与机构预期的静态拟合过程,本教程则将对齐重新定义为人类与 AI 之间持续演化的互动过程。为阐明人类与 AI 在对齐中的动态角色,我们提出了一个人类–AI 对齐(Human-AI Alignment)概念框架(见图 1),并系统性地解释人类如何能够在对齐流程的各个阶段获得更强的作用能力。具体而言,本教程围绕三个核心问题展开探讨: 1. 基础(Foundations)——人类期望 AI 与哪些价值与规范对齐? 1. 方法(Methods)——如何在构建对齐 AI 的过程中赋能人类? 1. 实践(Practice)——AI 对人类与社会的社会技术影响是什么?

同时,为激发讨论并推动未来研究方向,本教程也将通过综合讨论的形式系统探讨第四部分: 4. 挑战(Challenges)——由三位主讲人与四位跨领域讨论嘉宾,从新兴议题与开放问题出发,对人类–AI 对齐的未来展开深入讨论。

目标(Goals)

本教程旨在通过以下四大目标为受众带来价值: 1. 全面概览(Comprehensive Overview):提供一个系统化的人类–AI 对齐整体视角,突出人类在对齐流程中的持续参与。 1. 知识与理解(Knowledge and Understanding):提供与人类价值、对齐技术以及 AI 社会影响相关的系统知识。 1. 实践技能(Practical Skills):通过交互式代码笔记本与动手练习,使参与者能够掌握可操作的工具,并在多类 AI 系统中实现基本的对齐策略。 1. 促进讨论(Facilitate Discussion):推动对未来挑战、开放问题与新兴机会的批判性讨论,为参与者未来的研究工作提供灵感。

重要性与影响(Importance and Impacts)

由于当前对齐框架难以充分应对现存的对齐挑战,对掌握人类–AI 对齐全景(包括技术基础与社会技术影响)的专业人才需求正不断上升。本教程旨在弥补这一缺口,使参与者能够在对齐研究、政策制定以及实际部署中发挥有意义的作用。 通过兼顾概念框架、技术方法与批判性讨论,本教程确保参与者能够全面理解当前对齐研究的真实状态,而不会将对齐视为一个已经解决的问题。互动式专家讨论环节进一步培养了受众分析快速演进领域所需的批判性视角与判断能力,使其能够在未来推动人类–AI 对齐方向的深化与创新。

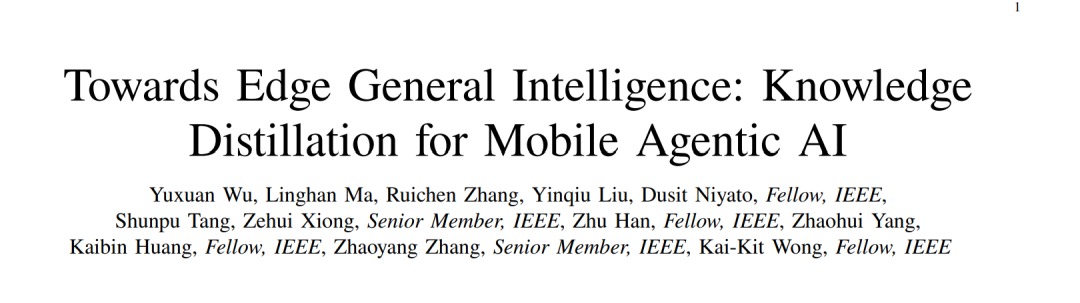

摘要——边缘通用智能(Edge General Intelligence, EGI)代表了移动边缘计算的一种范式转变,即智能体能够在动态且资源受限的环境中自主运行。然而,将先进的智能体 AI 模型部署在移动端和边缘设备上仍面临显著挑战,主要源于有限的计算能力、能耗预算与存储资源。为应对这些限制,本文综述探讨了将知识蒸馏(Knowledge Distillation, KD)融入 EGI 的方法,并将 KD 定位为实现无线边缘高效、通信感知与可扩展智能的关键推动力。特别地,我们强调了为无线通信和移动网络专门设计的 KD 技术,如信道感知的自蒸馏、跨模型信道状态信息(Channel State Information, CSI)反馈蒸馏,以及鲁棒的调制/分类蒸馏。此外,我们回顾了天然适用于 KD 与边缘部署的新型架构,如 Mamba、RWKV(Receptance、Weight、Key、Value)以及跨架构蒸馏,它们能够增强模型的泛化能力。随后,我们讨论了多类应用,其中基于 KD 的架构在视觉、语音与多模态任务中实现了 EGI。最后,我们指出了 KD 在 EGI 中的关键挑战与未来方向。本文旨在为研究者在 EGI 时代探索面向移动智能体 AI 的 KD 驱动框架提供全面参考。

关键词——边缘通用智能(EGI)、移动智能体 AI、知识蒸馏(KD)、无线边缘智能

I. 引言

A. 背景

移动边缘计算市场正在经历显著增长,预测显示其市场规模将从 2024 年约 16.5 亿美元增长至 2032 年超过 135 亿美元¹。推动这一增长的动力主要来自对低时延计算以及更高质量体验(QoE)的需求,而移动与物联网设备的数量正快速攀升。这样的发展格局催生了一场重要的技术变革,即所谓的“智能体化(agentification)”,通过集成大型语言模型(LLMs)和其他先进 AI 模块,使边缘设备具备自主能力。这一转变将传统被动的边缘节点转化为主动的移动智能体 AI 系统,使其能够感知环境、进行推理,并在无须人工干预的情况下执行复杂的多步骤任务。 这一智能体中心的范式具有多重优势。移动智能体 AI [1] 能够实现更高程度的自动化与个性化,因为智能体可以学习用户偏好并在设备本地实时适应动态场景。本地计算显著降低了时延,并通过减少对集中式云服务器的依赖提升了数据隐私。此外,这些智能体可以主动预判问题、优化流程,并在不同系统之间协同操作,从而提升运行效率、加速问题解决、增强服务灵活性。通过在网络边缘嵌入复杂的认知能力,移动智能体 AI 为更加智能、响应迅速且安全的应用铺平了道路,这是迈向 EGI 的关键步骤。EGI 被定义为:边缘设备在严格的资源与时延约束条件下,能够执行类似云端 AI 的通用推理与问题求解能力 [2]。 EGI 的实现依赖于移动智能体 AI 的部署。LLMs 为这些智能体提供了认知引擎,在规划、推理和工具使用方面展现出卓越能力。然而,LLMs 巨大的计算、存储与能耗需求与移动端和边缘设备的资源受限特性根本不兼容 [3]。这种“部署鸿沟”是实现 EGI 愿景的首要障碍。 为弥合这一差距,知识蒸馏(Knowledge Distillation, KD)作为一种压缩大型模型的关键方法应运而生。KD 指的是训练一个较小的“学生”模型去模仿一个更大、更强的“教师”模型的行为。这使得学生模型能够在紧凑的模型规模中保留教师的高级能力,从而适用于资源受限的边缘硬件部署 [4]。

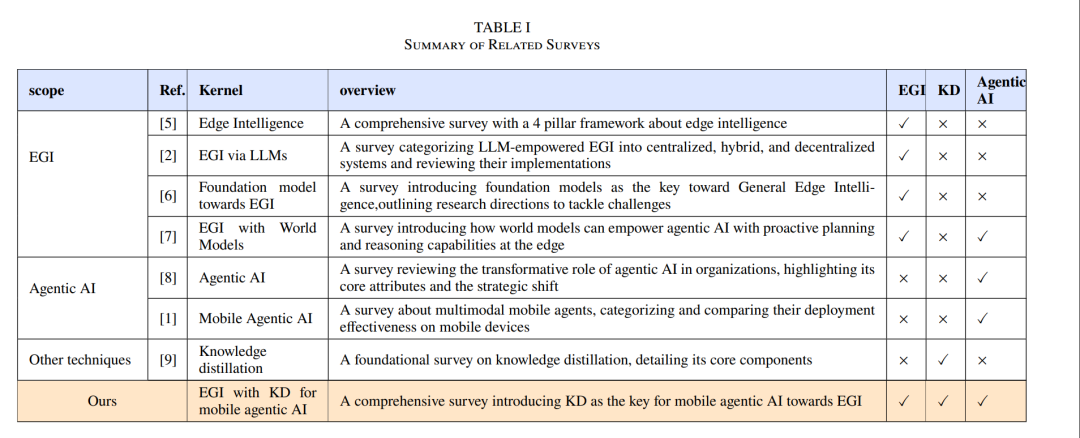

B. 与相关综述的比较及本文贡献

利用 KD 推进智能体 AI 并实现其在 EGI 中的部署,已展现出巨大潜力,可显著降低计算开销并增强其在动态无线环境中的适应性。本文旨在提供关于 KD 基础、其在智能体 AI 中的应用,以及支撑 EGI 部署的新型技术的全面综述。表 I 展示了相关综述的深入比较,重点强调 KD、智能体 AI,以及它们在 EGI 中的实现方式。

现有综述主要关注 KD 的发展与优化。例如,Gou 等人 [9] 提供了 KD 的基础性综述,详细介绍了知识类型、训练策略和师生架构等核心组成部分。 此外,也有若干综述研究智能体式 AI 的应用。Hosseini 等人 [1] 回顾了智能体式 AI 在组织中的变革性作用,强调其核心特性以及从“人类辅助 CoPilot”向“自主 Autopilot”模型的战略性迁移。Wu 等人 [8] 则综述了多模态移动智能体的领域,将其方法分为基于提示与基于训练两类,并比较了它们在移动设备上的部署效果。 与此同时,越来越多的研究展示了智能体式 AI 在推动 EGI 发展中的重要性。Xu 等人 [5] 提供了关于边缘智能的全面综述。随着强大 LLMs 的发展,EGI 的研究在最近几年迅速增长。Chen 等人 [2] 提供了基于 LLM 的 EGI 分类框架,将其分为集中式、混合式和去中心化系统,并回顾了其实现方式。He 等人 [6] 提出,将基础模型集成至边缘系统是迈向 EGI 的关键方向,并给出了未来研究挑战。围绕智能体认知核心,Zhao 等人 [7] 则分析了世界模型如何为智能体 AI 提供主动规划与推理能力,使其能够在边缘侧运行。 然而,现有研究缺乏对适用于 EGI 约束条件的系统化 KD 方法的深入探讨,同时也缺乏对智能体 AI 在边缘环境中所面临的资源限制与模型适应性挑战的全面分析。本文填补这一空白,对先进的模型自适应技术(如无线蒸馏 [10]–[13]、以及为边缘而设计的新型架构 [14]–[17])进行系统性讨论。此外,我们展示通过 KD 如何有效适配多种 EGI 部署场景,如自动驾驶车辆 [18], [19]、无人机(UAV)[20], [21]、机器人 [22], [23],以及其他物联网应用 [24]–[26]。 本文的主要贡献总结如下: * 我们针对无线通信场景提供了 KD 技术的专门回顾,强调 KD 如何提升信道估计、反馈压缩以及无线边缘的资源高效模型部署能力。

我们系统综述现有 KD 技术及其优势,强调其与新型架构集成的潜力,并进一步全面讨论在 EGI 中结合 KD 与这些架构所带来的机遇。

我们分析现有模型的局限性,并基于这些不足,介绍超越 Transformer 的新型架构,以及与知识蒸馏结合的调优技术,以适配边缘设备部署需求。

我们进一步从技术与伦理两个维度分析当前 EGI 面临的挑战,并提出潜在的未来发展趋势与解决方案。

C. 本文结构

**

**

本文结构如图 1 所示。 第 II 节介绍 EGI、移动智能体 AI 与知识蒸馏的基本概念,并进一步阐述三者之间的相互关系。随后,第 III 节系统阐述了将 KD 融入 EGI 的新型架构与无线蒸馏方法,并展示现有模型取得的研究进展。第 IV 节描述 KD 在 EGI 中的作用及其在多个特定领域的应用。最后,第 V 节总结本综述的经验与目前领域面临的挑战,并从技术与宏观层面提出未来研究方向。

Agentic AI 提供了一个自主的“感知–规划–行动–记忆”循环,而 EGI 则将这一范式扩展到网络边缘,使其能够在严格资源约束下获得广义认知能力。二者共同勾勒出智能化、可适应与自主的边缘系统统一愿景。

A. 智能体式 AI 的兴起与边缘通用智能的愿景

1) 移动智能体式 AI:

智能体式 AI 指能够在最少人工监督下,通过感知、推理、规划和行动来实现目标的自主系统 [8]。这些系统在一个连续循环中运行,通常称为 智能体循环(agentic loop),由四个核心模块组成:感知(Perception)、规划(Planning)、行动(Action)和记忆(Memory) [8]。

• 感知(Perception):

感知模块整合多模态信息,以形成对环境的连贯理解。如图 2 所示,该过程从物理传感器或数字通道采集数据开始。随后对原始数据进行处理,以提取关键特征并识别相关实体及其属性。

• 规划(Planning):

规划模块负责制定一系列行动以实现高层目标,通常利用 LLM 作为认知核心。如图 2 所示,智能体规划由静态、预定义的动作序列向动态规划演进——智能体会根据环境反馈持续更新计划,从而确保强健的适应性。

• 行动(Action):

智能体执行所选动作,与环境交互并对其产生影响。动作种类包括模仿人类使用图形用户界面(GUI)进行点击或输入 [27](见图 2),或通过 API 调用进行更深层的系统集成,如修改设备设置或自动化应用导航。在多智能体系统中,通信本身也是关键行动,使智能体能够协调并分配任务 [27]。

• 记忆(Memory):

记忆模块使智能体能够跨时间保留信息,提供连贯交互所需的上下文,并支持持续学习。如图 2 所示,记忆通常分为两层: * 短期记忆: 维护当前会话的上下文,通常由 LLM 的上下文窗口管理; * 长期记忆: 跨会话存储知识,如已学习的事实和经验,通常由外部向量数据库实现。智能体通过相似度检索访问该知识库,这是检索增强生成(RAG)的核心机制。

此外,**协作(Coordination)与适应(Adaptation)**是智能体式 AI 处理动态环境中长时任务的关键能力 [28], [29]。 * 协作通过动态多智能体协同实现,使多个智能体交互以达成共享目标 [29]; * 适应则是智能体从环境中学习并实时调整行为以追求高层目标的能力 [28],其根本依赖于集成记忆系统与强化学习 [30]、终身学习 [31] 等机制。

强健的协作协议与记忆驱动的适应性之间的协同,使现代智能体式 AI 与众不同,为更鲁棒、更自主的系统铺平道路。

当其部署在无线与边缘计算环境中时,智能体循环的每一部分都必须在有限计算、存储与通信带宽的约束下运行。这一挑战推动了通信协议设计的重大转变:从追求原始吞吐量转向 语义效率(semantic efficiency) 的范式,即优先传输简洁且高价值的信息,而非冗长原始数据 [32]。 面向 6G 的新兴框架提出“内容感知”网络,可智能地优先处理语义关键数据,模糊应用层与网络层的界限,使网络从被动管道转变为信息交换的主动参与者 [32]。

2) 边缘通用智能(EGI):

EGI [2] 是一种变革性范式,旨在使边缘设备具备通用的认知能力,使其能够在动态环境中自主地感知、推理与行动。EGI 的终极愿景是在网络边缘实现人工通用智能(AGI):系统不仅能够理解、推理、规划和从经验中学习,且具备接近甚至超过人类水平的认知能力。 与传统边缘智能主要依赖静态、任务特定模型不同,EGI 强调: * 通用性(versatility)

可适应性(adaptability)

自主认知推理(autonomous cognitive reasoning) [5]

为此,EGI 依赖基础模型,使设备能够在无需频繁再训练的情况下执行多类任务,并在实时中动态适应上下文与环境变化。

这种智能根植于“知识”这一更深层概念,它不仅包括事实信息,还包括复杂推理模式、上下文理解与在大规模数据中学习到的决策能力。EGI 的架构在“知识如何分布”上形成一个连续谱:

• 集中式架构:

由云端强大的 LLM 提供全部知识,负责复杂推理与规划;边缘设备仅承担数据采集与命令执行。

• 去中心化架构:

通过在每个边缘设备上部署中等规模或小型语言模型(SLM),使其拥有自身知识库,实现本地推理与点对点协作 [33]。

• 混合式架构:

在二者之间取得平衡: * 本地 SLM 提供关键的、常用的低时延知识; * 云端 LLM 处理大范围、更深层的认知任务。

EGI 的潜在应用场景广泛且具有颠覆性,将重塑多个领域的人机交互方式,包括自动驾驶、工业自动化、智慧城市与个性化医疗等。 **

**

**

**

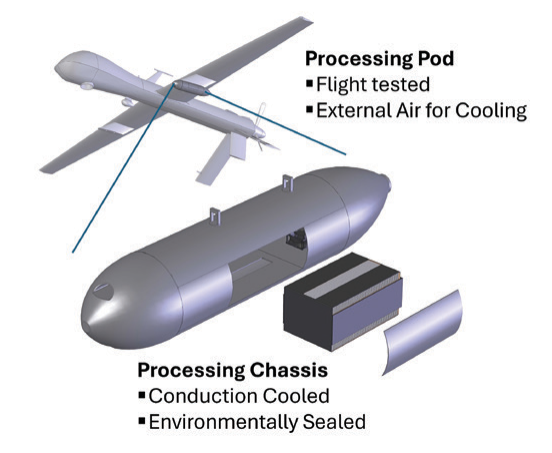

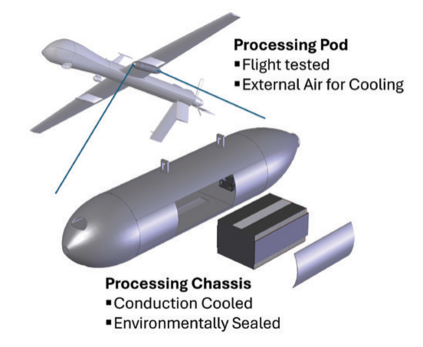

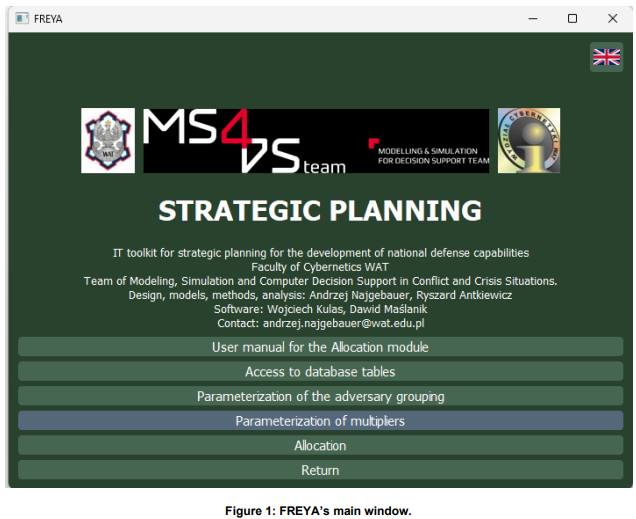

大规模冲突威胁日益增长,强调利用新能力(包括新兴与颠覆性技术(EDT))规划多域作战(MDO)需要通过实验和战争游戏来测试其有效性,以提升部队战备状态。2024年10月23日至25日,在拉斯佩齐亚北约海事研究与实验中心(CMRE)组织并实施了一场关于“EDT支持下的多域作战集体防御规划流程”的兵棋推演。本文总结了该推演结果,并指出了利用建模与仿真(M&S)、推演及人工智能来完善集体防御作战规划流程的一些新实验方向。此次推演旨在全面测试EDT在需要触发《华盛顿条约》第五条的大规模冲突中对实施多域作战的影响。推演具有实验性质,设有3个参演团队(一方红色,两方蓝色——创新与传统型),独立于演习控制组(EXCON)之外,针对同一红色方行动计划制定蓝色方响应部队的战略决策。参演人员为来自波兰及北约总部的经验丰富的规划人员。推演内容为对比两支蓝色部队的行动计划效果差异:一支是配备最新军事技术作战手段并在全作战域行动的创新型部队,另一支是在基础作战域行动且以动能行动为主的传统型部队。推演准备了第二阶段,假定红蓝双方在5-7年后使用未来部队,各有EDT和常规两种选项。红色方攻击想定与第一阶段类似但其力量升级,蓝色方则以其未来部队进行响应。参演人员行动(即行动计划)的效果通过我们自主研发的软件应用进行模拟和计算,以冲突参数形式呈现——包括双方伤亡(按不同兵种细分)、部队推进速度、突入防御纵深、行动持续时间以及行动结束的不同效果(达成目标、部队停滞、超过可承受损失等)。所获结果证实了该方法在研究EDT对多域环境下作战进程影响的有效性,并使专业军事人员(战略与作战规划人员)能够参与一项新颖的“盟军司令部转型”(ACT)实验,了解现代作战规划方法及研究这些作战效果。本文阐述了一些理论要点、一套仿真与分析工具集,并对实验结果进行了概要描述。

美国总统特朗普于周四晚间公布了其国家安全战略,重点在于加强美国在西半球的军事存在、平衡全球贸易、加强边境安全以及赢得对欧洲的文化战争。这份全面的战略文件通常在新政府上任第一年内发布,旨在阐明总统的外交政策重点,并为可能的资金投向提供指引。这份33页的文件建立在特朗普“美国优先”意识形态的基础之上,同时也首次明确提及总统意图效仿门罗主义,呼吁美国在西半球占据主导地位。“在经历多年忽视后,美国将重新主张并执行门罗主义,以恢复美国在西半球的优势地位,并保护国土及在整个地区关键地域的通行权,”文件中写道。该文件并未明确表明美国将从全球撤退,但它确实呼吁盟友间增加责任分担,提升美国的经济利益和关键供应链的准入,并“释放”美国能源生产能力。

引言——何为美国战略?

为确保美国在今后数十年内始终保持世界最强、最富、最有力和最成功的国家地位,需要一个关于如何与世界互动的连贯、聚焦的战略。而要确立正确的战略,全体美国人民都需要确切了解试图达成什么目标以及为何如此。所谓“战略”,是一个具体、现实的计划,旨在阐明目标与手段之间的本质联系:它始于对期望达成的目标以及现有(或能够切实创造的)可用工具的准确评估。

一项战略必须进行评估、筛选和优先排序。并非每个国家、地区、议题或事业——无论其价值如何——都能成为美国战略的焦点。外交政策的目的是保护核心国家利益;这也是本战略的唯一焦点。冷战结束以来的美国战略皆有所不足——它们要么是愿望或理想最终状态的清单罗列;要么未能清晰界定究竟想要什么,而是陈述些模糊的陈词滥调;还常常误判了真正应该追求的目标。

2. 美国面前的问题

美国面前的问题是:

-

美国应该追求什么?

-

可用的手段是什么?

-

如何将目标与手段结合成一个可行的国家安全战略?

特朗普在加勒比地区的军事行动可能升级

特朗普在加勒比海针对涉嫌毒品走私船只的、为期两个多月的军事行动,可能会获得更多支持,因为该国家安全战略呼吁美国将全球军事存在重新调整至美洲,“并远离那些对美国国家安全相对重要性在近几十年或近些年已下降的地区”。特朗普将美国在加勒比地区的军事行动定性为与毒品卡特尔的“武装冲突”,并特别指出被美国以贩毒罪名起诉的委内瑞拉强人尼古拉斯·马杜罗为主要威胁,称美国可能很快会发动“地面行动”。

尽管未明确提及委内瑞拉,但该国家安全战略呼吁“进行有针对性的部署”以确保美国边境安全并“击败贩毒集团”,“在具有重要战略意义的地点建立或扩大准入”。该战略还聚焦特朗普利用关税主导该地区的做法。但他是否拥有此项权力,正是最高法院一桩待决案件的核心。“美国将优先考虑商业外交,以加强自身的经济和产业,将关税和对等贸易协定作为有力工具,”文件中写道。

尽管文件未明确点名大国在拉丁美洲的渗透,但该国家安全战略表示,美国应利用其在金融和技术领域的杠杆作用,使地区国家远离对手,并强调依赖这些国家在“间谍活动、网络安全、债务陷阱等方面”的威胁。

特朗普批评欧洲未能妥善处理对俄关系

该国家安全战略批评欧洲在与俄罗斯关系恶化问题上“缺乏自信”——但并未提及俄罗斯总统弗拉基米尔·普京在2014年和2022年决定对乌克兰行动,或其在该大陆的破坏活动、干预选举和煽动不稳定行为。该国家安全战略称,美国是唯一能够在欧洲和俄罗斯之间进行调解的力量,以“在欧亚大陆重建战略稳定条件,并降低俄罗斯与欧洲国家之间发生冲突的风险”。

该文件进一步宣称,美国需要“促进欧洲的伟大”,呼应了副总统万斯今年二月在德国发表的演讲。“华盛顿不再假装不会干预欧洲内部事务了,”欧洲对外关系委员会高级政策研究员帕维尔·泽卡在一篇分析文章中写道。“它现在将这种干预定性为一种善举(‘我们希望欧洲保持欧洲特性’)和美国战略必需的事项。重点是什么?‘在欧洲国家内部培育对欧洲当前发展轨迹的抵制’。”

此项工作的触发点源于开源信息中关于陆军于2025年10月9日就战术战场空间(TBS)内的反无人机系统(C-UAS)网格发布信息请求(RFI)的消息。本文试图解释与上述C-UAS网格相关的各种细节。

新兴的战术战场空间(TBS)概念

工作首先阐述了新兴的TBS概念。为此,参考了陆军领域一个非常常见的术语——战术作战区域(TBA)。该术语指的是战斗中发生敌对双方战术级交战的纵深区域。TBA是机械化部队之间机动与反机动、坦克对战以及地面部队为塑造前线战局所采取的战术行动的见证。

除了地面部队,TBA内还充斥著坦克、机械化步兵、炮兵系统、防空炮与导弹系统、战场监视与目标捕获网格、电子战(EW)与网络战资源、工兵、通信资源、网络管理单元等等。传统上且多年来,对TBA的认知一直是二维的,即存在于已接战的纵深地带的长宽范围内的区域。这一认知正逐渐显得不够充分和完整,因为它关乎陆军。更相关的概念是TBS,它包含了TBA及其正上方的空域。

战术作战区域(TBA)中的无人机作战

以往对TBA的二维认知之所以不完整,其原因在于TBA可视域内无人机与反无人机作战的出现,以及攻击直升机作为陆军在第三维度的延伸组成部分的融合。这一点将进一步阐述。有记录的首例蜂群无人机攻击发生于2018年1月5日,当时13架DIY无人机袭击了俄罗斯在叙利亚西部的两个资产,即赫迈米姆空军基地和塔尔图斯海军基地。自此以后,小型无人机作为在TBA可视域内执行空中威胁的有效空中威胁平台的出现便一发不可收拾。小型无人机改变了TBA内可视域空战的性质,这主要是因为这些空中威胁平台能够很大程度上避开传统防空雷达传感器的探测。这一点将进一步解释:大多数小型无人机的雷达截面积(RCS)很小。从根本上说,RCS(以平方米表示)是目标对于典型雷达系统可见度的度量。RCS越小,雷达探测到无人机的难度就越大。当前的小型无人机机群的RCS大约在0.01-0.4平方米之间(单旋翼 – 0.01-0.03平方米,四旋翼无人机 0.01-0.10平方米,六旋翼无人机 -0.04-0.32平方米)。将这些数值与典型攻击机的RCS值进行比较:F-16 – 5平方米,F-18 – 1平方米,SU-35 – 1-3平方米,F-35隐形战机 – .0015平方米)。影响是什么?与主战防空武器系统相关的传统传感器无法探测到小型无人机,因此无法引导雷达控制的枪炮和导弹对它们进行射击。需要什么来探测这些目标呢?需要大量全新的基于光电(EO)/射频(RF)/红外(IR)/声学技术的传感器,或能够探测无人机的特定雷达,即无人机探测雷达(DDR)。

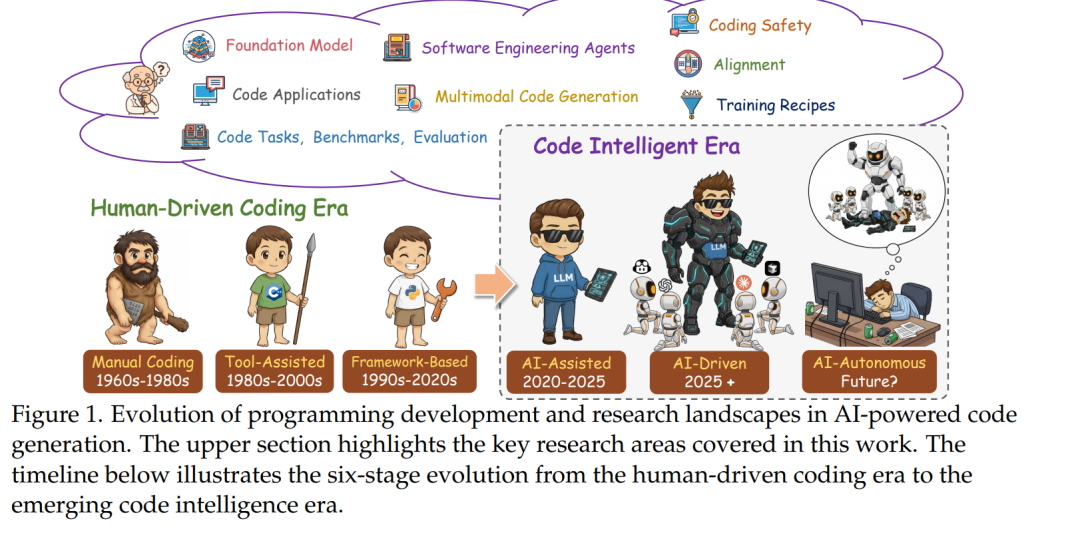

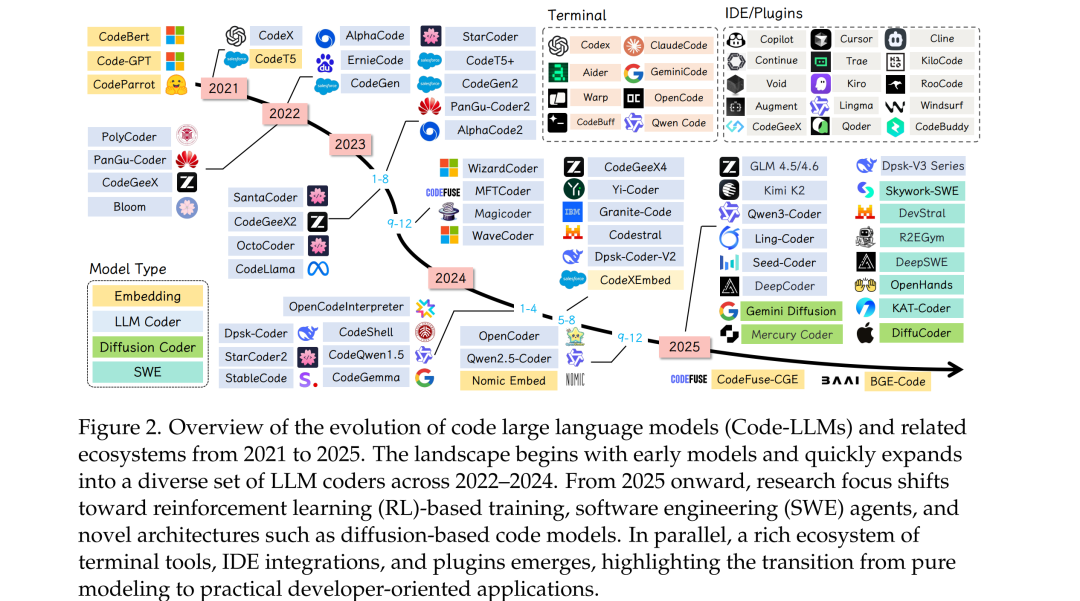

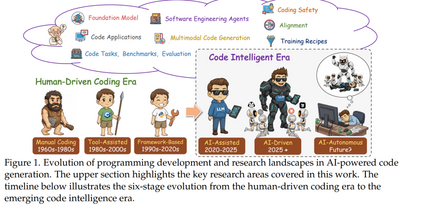

大型语言模型(Large Language Models,LLMs)通过实现从自然语言描述到可执行代码的直接转换,从根本上重塑了自动化软件开发范式,并推动了包括 GitHub Copilot(Microsoft)、Cursor(Anysphere)、Trae(字节跳动)以及 Claude Code(Anthropic)等工具的商业化落地。尽管该领域已从早期的基于规则的方法演进至以 Transformer 为核心的架构,但其性能提升依然十分显著:在 HumanEval 等基准测试上的成功率已从个位数提升至 95% 以上。 在本文中,我们围绕代码大模型(code LLMs)提供了一份全面的综合综述与实践指南,并通过一系列分析性与探测性实验,系统性地考察了模型从数据构建到后训练阶段的完整生命周期。具体而言,我们涵盖了数据整理、高级提示范式、代码预训练、监督微调、强化学习,以及自主编码智能体等关键环节。 我们系统分析了通用大语言模型(如 GPT-4、Claude、LLaMA)与代码专用大语言模型(如 StarCoder、Code LLaMA、DeepSeek-Coder 和 QwenCoder)的代码能力,并对其所采用的技术手段、设计决策及相应权衡进行了深入评估。 进一步地,本文明确指出了学术研究与实际部署之间的研究—实践鸿沟:一方面,学术研究通常聚焦于基准测试与标准化任务;另一方面,真实世界的软件开发场景则更加关注代码正确性、安全性、大规模代码库的上下文感知能力,以及与现有开发流程的深度集成。基于此,我们系统梳理了具有应用潜力的研究方向,并将其映射到实际工程需求之中。 最后,我们通过一系列实验,对代码预训练、监督微调与强化学习进行了全面分析,涵盖尺度定律(scaling law)、训练框架选择、超参数敏感性、模型结构设计以及数据集对比等多个关键维度。

1 引言

大型语言模型(Large Language Models,LLMs)[66, 67, 192, 424, 435, 750, 753, 755, 756] 的出现引发了自动化软件开发领域的范式转变,从根本上重塑了人类意图与可执行代码之间的关系 [1306]。现代 LLM 在多种代码相关任务中展现出卓越能力,包括代码补全 [98]、代码翻译 [1158]、代码修复 [619, 970] 以及代码生成 [139, 161]。这些模型有效地将多年积累的编程经验提炼为可遵循指令的通用工具,使不同技能水平的开发者都能够基于 GitHub、Stack Overflow 及其他代码相关网站中的数据进行使用和部署。 在众多 LLM 相关任务中,代码生成无疑是最具变革性的方向之一。它使自然语言描述能够直接转化为可运行的源代码,从而消解了领域知识与技术实现之间的传统壁垒。这一能力已不再局限于学术研究,而是通过一系列商业化与开源工具成为现实,包括:(1) GitHub Copilot(Microsoft)[321],在集成开发环境中提供智能代码补全;(2) Cursor(Anysphere)[68],一款支持对话式编程的 AI 原生代码编辑器;(3) CodeGeeX(智谱 AI)[24],支持多语言代码生成;(4) CodeWhisperer(Amazon)[50],与 AWS 服务深度集成;以及 (5) Claude Code(Anthropic)[194] / Gemini CLI(Google)[335],二者均为命令行工具,允许开发者直接在终端中将编码任务委托给 Claude 或 Gemini [67, 955],以支持智能体化(agentic)的编码工作流。这些应用正在重塑软件开发流程,挑战关于编程生产力的传统认知,并重新界定人类创造力与机器辅助之间的边界。 如图 1 所示,代码生成技术的演进轨迹揭示了一条清晰的技术成熟与范式变迁路径。早期方法受限于启发式规则和基于概率文法的框架 [42, 203, 451],在本质上较为脆弱,仅适用于狭窄领域,且难以泛化到多样化的编程场景。Transformer 架构的出现 [291, 361] 并非简单的性能提升,而是对问题空间的根本性重构:通过注意力机制 [997] 与大规模训练,这类模型能够捕获自然语言意图与代码结构之间的复杂关联。更为引人注目的是,这些模型展现出了涌现式的指令遵循能力,这一能力并非显式编程或直接优化的目标,而更像是规模化学习丰富表征的自然结果。这种通过自然语言使非专业用户也能生成复杂程序的“编程民主化”趋势 [138, 864],对 21 世纪的劳动力结构、创新速度以及计算素养的本质产生了深远影响 [223, 904]。 当前代码 LLM 的研究与应用格局呈现出一种通用模型与专用模型并行发展的双轨结构,二者各具优势与权衡。通用大模型,如 GPT 系列 [747, 750, 753]、Claude 系列 [66, 67, 192] 以及 LLaMA 系列 [690, 691, 979, 980],依托包含自然语言与代码在内的超大规模语料,具备对上下文、意图及领域知识的深度理解能力。相较之下,代码专用大模型(如 StarCoder [563]、Code LLaMA [859]、DeepSeek-Coder [232]、CodeGemma [1295] 和 QwenCoder [435, 825])则通过在编程导向数据上的聚焦式预训练与任务特定的架构优化,在代码基准测试中取得了更优性能。诸如 HumanEval [161] 等标准化基准上从个位数到 95% 以上成功率的跃迁,既体现了算法层面的创新,也反映了对代码与自然语言在组合语义与上下文依赖方面共性的更深刻理解。 尽管相关研究与商业应用发展迅速,现有文献中仍然存在创新广度与系统性分析深度之间的显著鸿沟。现有综述多采取全景式视角,对代码相关任务进行宽泛分类,或聚焦于早期模型阶段,未能对最新进展形成系统整合。尤其值得注意的是,最先进系统所采用的数据构建与筛选策略仍缺乏深入探讨——这些策略在数据规模与数据质量之间进行权衡,并通过指令微调方法使模型行为与开发者意图对齐。相关对齐技术包括引入人类反馈以优化输出、高级提示范式(如思维链推理与小样本学习)、具备多步问题分解能力的自主编码智能体、基于检索增强生成(Retrieval-Augmented Generation, RAG)的事实约束方法,以及超越简单二值正确性、从代码质量、效率与可维护性角度进行评估的新型评价框架。 如图 2 所示,Kimi-K2 [957]、GLM-4.5/4.6 [25, 1248]、Qwen3Coder [825]、Kimi-Dev [1204]、Claude [67]、DeepSeek-V3.2-Exp [234] 以及 GPT-5 [753] 等最新模型集中体现了上述创新方向,但其研究成果分散于不同文献之中,尚缺乏统一、系统的整合。表 1 对多篇与代码智能或 LLM 相关的综述工作进行了比较,从八个维度进行评估:研究领域、是否聚焦代码、是否使用 LLM、是否涉及预训练、监督微调(SFT)、强化学习(RL)、代码 LLM 的训练配方,以及应用场景。这些综述覆盖了通用代码生成、生成式 AI 驱动的软件工程、代码摘要以及基于 LLM 的智能体等多个方向。尽管多数工作关注代码与应用层面,但在技术细节覆盖上差异显著,尤其是强化学习方法在现有综述中鲜有系统讨论。 为此,本文对面向代码智能的大语言模型研究进行了全面而前沿的系统综述,对模型的完整生命周期展开分析,涵盖从初始数据构建、指令调优到高级代码应用与自主编码智能体开发等关键阶段。 为了从代码基础模型出发,系统性地覆盖智能体与应用层面,本文进一步提供了一份贯通理论基础与工程实现的详细实践指南(见表 1)。本文的主要贡献包括: 1. 提出统一的代码 LLM 分类体系,系统梳理其从早期 Transformer 模型到具备涌现式推理能力的指令调优模型的发展脉络; 1. 系统分析从数据构建与预处理、预训练目标与架构创新,到监督指令微调与强化学习等高级微调方法的完整技术流水线; 1. 深入探讨定义当前最优性能的前沿范式,包括提示工程技术(如思维链推理 [1174])、检索增强生成方法,以及能够执行复杂多步问题求解的自主编码智能体; 1. 批判性评估现有基准与评测方法,讨论其优势与局限,并重点分析从功能正确性扩展到代码质量、可维护性与效率评估所面临的挑战; 1. 综合分析最新突破性模型(如 GPT-5、Claude 4.5 等),提炼新兴趋势与开放问题,为下一代代码生成系统的发展提供方向; 1. 通过大规模实验,从尺度定律、训练框架、超参数、模型架构与数据集等多个维度,对代码预训练、监督微调与强化学习进行系统性分析。

强化学习(Reinforcement Learning,RL)是一种通过与环境交互来学习最优序列决策的机器学习(Machine Learning,ML)技术。近年来,RL 在众多人工智能任务中取得了巨大成功,并被广泛认为是迈向通用人工智能(Artificial General Intelligence,AGI)的关键技术之一。在这一背景下,受约束强化学习(constrained RL)逐渐发展成为一个重要研究方向,旨在解决在多样化应用场景中、在约束条件下优化决策行为的挑战。本论文提出了一系列新的理论基础与实用算法,以推动受约束强化学习领域的发展。 论文首先研究了平均回报准则下的受约束马尔可夫决策过程(Constrained Markov Decision Processes,CMDPs),并提出了平均受约束策略优化(Average-Constrained Policy Optimization,ACPO)算法。ACPO 结合灵敏度理论(sensitivity theory)与信赖域优化(trust-region optimization)技术,不仅在复杂环境中相较于现有最先进方法展现出更优的经验性能,还提供了坚实的理论保证。随后,论文将受约束强化学习扩展至情节式(episodic)设置,提出了 e-COP 算法,这是首个专门针对有限时域 CMDP 的策略优化框架。e-COP 基于一种新的情节式策略差分引理(policy difference lemma),在保持算法简洁性与可扩展性的同时,具备稳健的理论保证。其在安全约束基准任务中的成功表现,凸显了其在更广泛应用中的潜力,例如基于人类反馈的强化学习(Reinforcement Learning from Human Feedback,RLHF)。 针对 AGI 背景下 RLHF 重要性的不断提升,亟需能够将人类偏好反馈有效融入 RL 算法的方法。鉴于人类反馈往往具有噪声性,论文提出了 warmPref-PS,一种后验采样(posterior sampling)算法,旨在在线性多臂老虎机环境中,将来自不同能力水平评审者的离线偏好数据整合进在线学习过程。该算法显著降低了遗憾(regret),并验证了通过建模评审者能力来实现自适应数据采集与模型微调在 RLHF 场景中的有效性。进一步地,论文深入探讨了基于偏好的强化学习(Preference-based RL,PbRL),该范式以二元轨迹比较而非显式奖励作为学习信号。通过利用离线数据集并建模评审者能力,论文提出了 PSPL 算法,该算法同时对奖励模型与转移动态进行后验采样。论文给出了 PSPL 的贝叶斯简单遗憾(simple regret)理论界,并通过实验结果验证了其在识别最优策略方面的正确性与鲁棒性。 最后,论文将上述优化方法与 RLHF 的理论成果落地到实际应用中,从受约束优化的视角研究大语言模型(Large Language Models,LLMs)的多目标对齐问题:在最大化主目标的同时,约束次级目标不低于可调阈值。由此提出了一种迭代算法 MOPO,该算法具有闭式更新形式,能够扩展至数十亿参数规模的 LLM,并且对超参数选择具有较强鲁棒性。 通过上述一系列贡献,本论文在多种范式下统一了受约束强化学习的研究框架,从策略优化到偏好对齐,不仅深化了对受约束决策问题的理论理解,也显著提升了其实践有效性。

普通容器用内核提供的namespace和cgroup做资源限制和隔离(从机器上圈了一部分资源给容器用),在安全性上存在不足: 容器内的进程在宿主机上可以看到 容器和宿主机共用内核,可以对宿主机进行破坏 FC安全容器安全加固策略(核心是限制代码破坏范围): 安全容器提供基于虚拟机级别的隔离 函数调度尽可能调度到同一台神龙服务器 加固安全策略:端口封禁、命令行封禁等 组件裁减:精简不必要驱动和内核接口,启动速度更快、资源占用更少 实例回收:销毁重建,避免残留/tmp目录、日志、环境变量、进程等

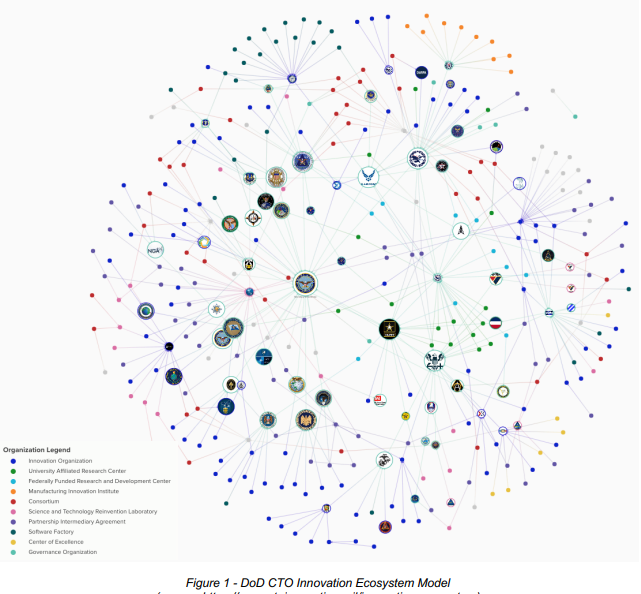

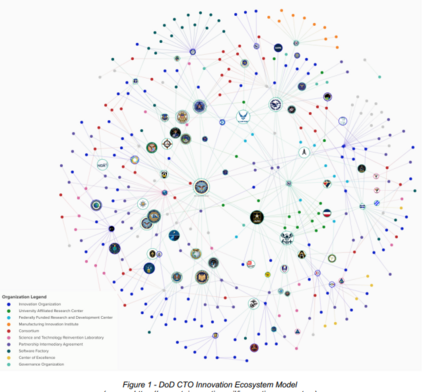

2022年《美国国防战略》强调,当今安全环境最大的战略挑战与快速变化的军事能力和新兴技术相关。正是通过创新,军队的技术优势才得以维持。国防创新指的是旨在开发和实施变革性技术、战略及组织实践的一系列广泛实验活动,以提升军队能力或降低军事行动成本。

美国防部(DoD)依赖一个由政府部门、私营企业、学术界和研究机构组成的庞大互联网络来完成这些活动。这一国防创新生态系统在过去十年中迅速发展,但如今构成该生态系统的许多组织是为满足特定需求而彼此独立建立的。这种增长导致了一个庞大生态系统的形成,但其组织方式未能以维持军事技术优势所需的速度最优地支持创新,尤其是在新技术快速商业化的背景下。

本研究通过对公开数据源进行全面审查,构建了国防创新生态系统的组织网络模型。随后,利用该模型,基于五种中心性指标(包括度中心性、加权度中心性、特征向量中心性、中介中心性和接近中心性)进行了组织网络分析。此分析随后被用于更新模型可视化。最后,对网络模型进行了模块化评估,以考察一种可能跨越现有组织界限的层级重组方案。本研究旨在更好地理解国防创新生态系统的现状,进而就国防部如何演进该生态系统以满足未来需求提供一个视角。

第1章:本章介绍论文主题,包括背景信息、研究范围描述、研究方法以及本文探讨的研究问题。本章阐述了本研究的重要性、及时性和相关性。

第2章:本章包括对相关文献的回顾,涵盖对国防战略中创新的历史分析、对国防部内创新活动演变的探讨,以及对构成国防创新生态系统的各种国防创新组织(DIO)的探究。

第3章:本章概述国防部创新组织层级结构,包括政府所有和政府资助的研发中心、创新组织、软件工厂、其他交易授权(OT)联盟模式以及支持性的治理与监督组织。此外,本章还描述了数据来源、组织模型开发过程、范围界定决策以及数据组织概述。本章使读者能透彻理解支持模型开发的数据,并开始初步的数据可视化探索。

第4章:本章概述组织网络分析方法并呈现其结果。该分析作为回答研究问题的基础,包括以下中心性指标:度中心性、加权度中心性、特征向量中心性、中介中心性和接近中心性。本章概述各项中心性指标,继而讨论各项分析的结果及汇总结果,以确定模型内的重要节点。最后,本章详述模块化评估的发现。

第5章:本章应用组织网络分析的结果来优化模型可视化。这些可视化包括调整模型显示,根据选定的中心性指标改变节点大小。此外,本章对模块化评估进行了可视化,以说明底层节点连接结构与现有组织层级之间的重叠情况。

第6章:本章总结为解答研究问题所进行的研究,讨论研究局限性,并对未来研究提出建议。

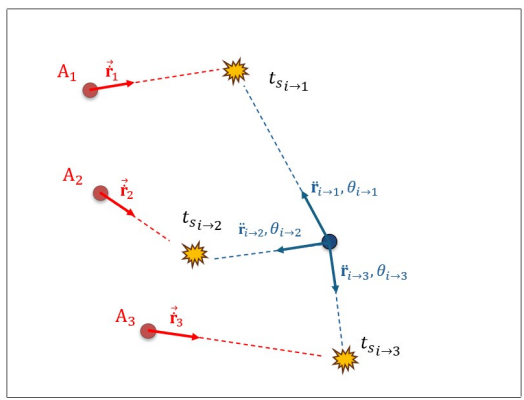

无人技术的采用推动了机器人集群系统的多学科研究,尤其在军事领域。受生物集群解决问题能力的启发,这些系统具有通过局部交互产生全局行为的优势,减少了对集中控制的依赖。在机器人集群中创造涌现行为的传统方法需要可预测和可控的集群,这些集群需具备明确的局部规则并对所有智能体有完全的了解。在反集群作战中,集群系统需要一个稳健且能适应动态环境的全局策略,并尽可能少地依赖完全信息。本研究探讨了一个逆问题:设计局部规则来近似通常基于每架无人机完全信息和通信的涌现行为。目标是创建去中心化的区域,其中防御方无人机利用一个经过大量仿真数据训练的神经网络模型。这些数据提取自涉及三名攻击者和一名防御者的交战场景,被组织成代表不同特征的各种输入集。训练后的回归分析确定了能产生相较于先知算法最优防御方航向角的特征集。结果表明,神经网络模型在优化较短交战时间方面比先知算法更有效,验证了用训练好的网络替代传统算法的可行性。

图. 潜在拦截特征示意图。呈现3名攻击方与1名防御方的交战场景。基于既定防御策略,防御方可从3种潜在拦截轨迹中择一执行。

前述先前工作的方法引出一个问题:能否通过纯粹去中心化且不要求集群内每个个体智能体相互知晓的局部规则,来实现日益复杂的全局行为?这个问题呈现了一个逆问题,即交战中期望的集群全局行为是预先已知的。在输入特定的敌方全局结构(例如,一群按照特定战术负责摧毁或压制防御方高价值单元(HVU)的攻击无人机集群)的情况下,需要确定一组局部规则来近似期望的防御方全局策略以对抗敌方影响。使用上述方法解决逆问题可能很困难,并暗示需要某种形式的集中任务分配。

机器学习方法,如深度学习和强化学习,可能更适合此类任务。强化学习涉及使用人工神经网络,其中一个智能体基于如何最大化长期奖励来采取行动以寻求最优解。Huttenrauch等人提出了强化学习方法,其中智能体的决策仅基于局部信息,而Q函数则拥有全局环境信息。该方法的另一个例子提出使用集中式规则来训练将管理集群中智能体行为的分布式策略。李等人通过将预定的集中式规则建模为可微分深度神经网络来实现这一点。集群中的每个智能体都有一套相同但分布式的规则集,类似于文献中提出的隐式协调方法。

这些方法确实解决了集群中的去中心化行为问题,但它们没有解决上述的逆问题。

本论文试图通过使用监督式深度神经网络来近似最优解,以解决这个逆问题。目标是创建局部的去中心化集群,其中一架防御方无人机使用一个神经网络模型,该模型经过针对n架攻击无人机的基于智能体的仿真数据的大量训练。将评估防御方在使用神经网络算法(与初始瞄准算法相对,本论文中称为先知算法)时的有效性。该神经网络模型将使用现有文献中开发的先知算法从仿真中提取的数据进行训练。通过使用一个见识过各种全局攻击方策略的神经网络模型,本论文旨在创建一个不严重依赖与其他智能体通信、并依靠神经网络模型来确定最优策略的去中心化瞄准模型。