【跟踪Tracking】15篇论文+代码 | 中秋快乐~

【导读】中秋断更?不存在的。小编为大家整理了15篇GitHub上超100 stars的跟踪方向代码实现及相应论文,请各位读者笑纳。中秋快乐~

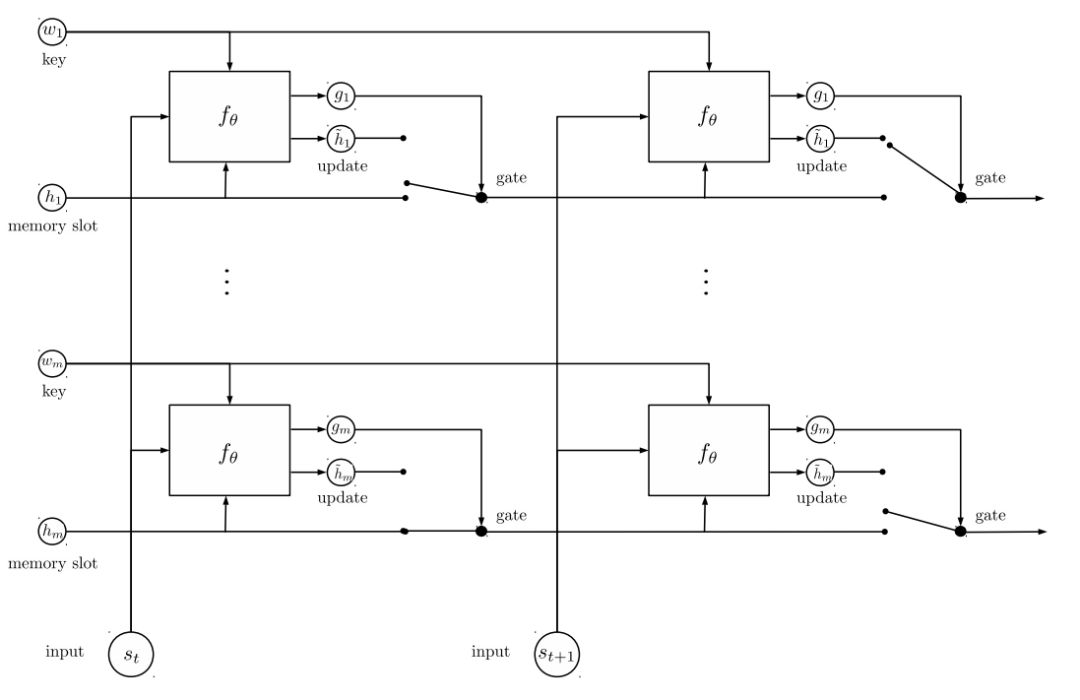

Tracking the World State with Recurrent Entity Networks

It is equipped with a dynamic long-term memory which allows it to maintain and update a representation of the state of the world as it receives new data. The EntNet sets a new state-of-the-art on the bAbI tasks, and is the first method to solve all the tasks in the 10k training examples setting.

代码:https://github.com/facebook/MemNN

论文:https://arxiv.org/abs/1612.03969v3

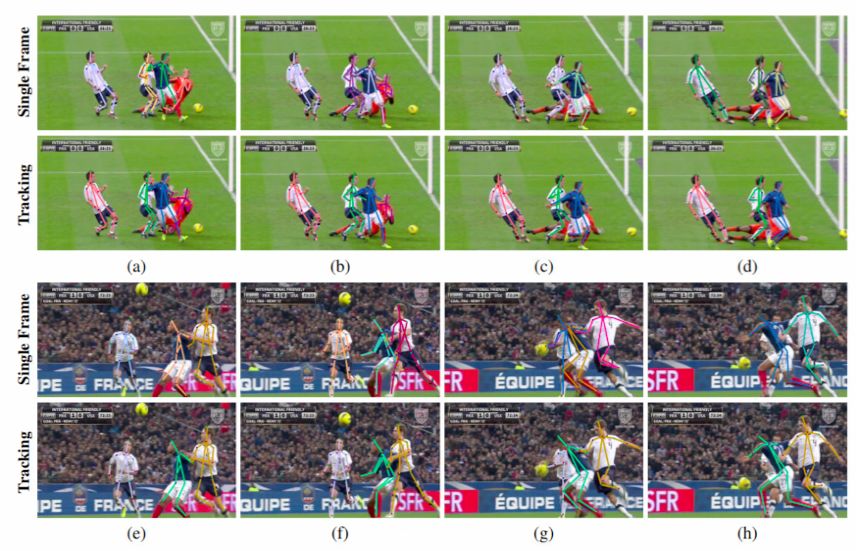

ArtTrack: Articulated Multi-person Tracking in the Wild

In this paper we propose an approach for articulated tracking of multiple people in unconstrained videos. Our starting point is a model that resembles existing architectures for single-frame pose estimation but is substantially faster.

代码:https://github.com/eldar/pose-tensorflow

论文:https://arxiv.org/abs/1612.01465v3

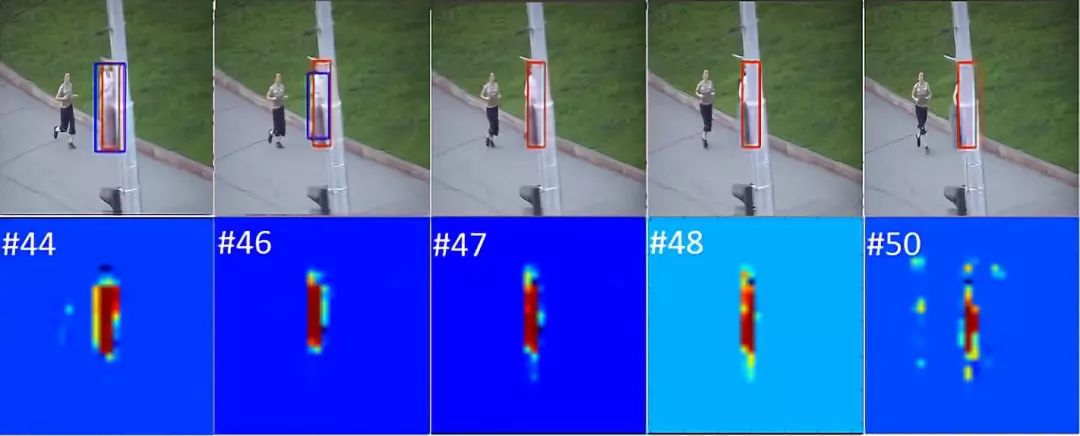

Spatially Supervised Recurrent Convolutional Neural Networks for Visual Object Tracking

In this paper, we develop a new approach of spatially supervised recurrent convolutional neural networks for visual object tracking. Our recurrent convolutional network exploits the history of locations as well as the distinctive visual features learned by the deep neural networks.

代码:https://github.com/Guanghan/ROLO

论文:https://arxiv.org/abs/1607.05781v1

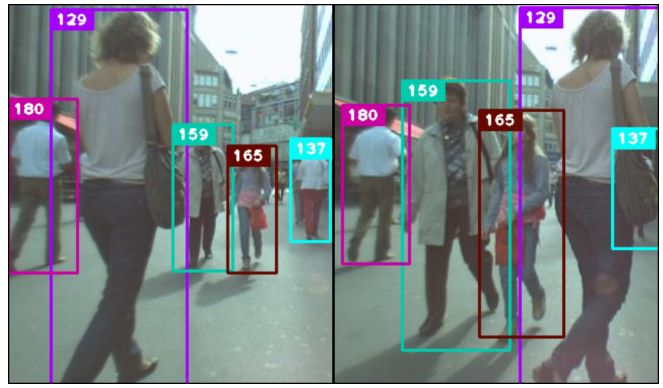

Simple Online and Realtime Tracking with a Deep Association Metric

Simple Online and Realtime Tracking (SORT) is a pragmatic approach to multiple object tracking with a focus on simple, effective algorithms. In this paper, we integrate appearance information to improve the performance of SORT.

代码:https://github.com/nwojke/deep_sort

论文:https://arxiv.org/abs/1703.07402v1

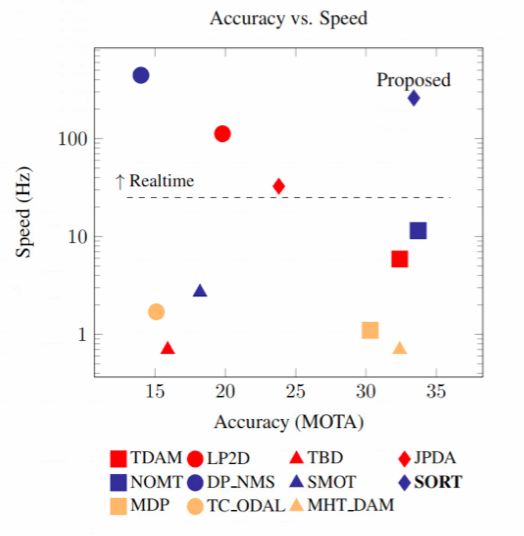

Simple Online and Realtime Tracking

This paper explores a pragmatic approach to multiple object tracking where the main focus is to associate objects efficiently for online and realtime applications. To this end, detection quality is identified as a key factor influencing tracking performance, where changing the detector can improve tracking by up to 18.9%.

代码:https://github.com/abewley/sort

论文:https://arxiv.org/abs/1602.00763v2

Provable Dynamic Robust PCA or Robust Subspace Tracking

Dynamic robust PCA refers to the dynamic (time-varying) extension of robust PCA (RPCA). It assumes that the true (uncorrupted) data lies in a low-dimensional subspace that can change with time, albeit slowly.

代码:https://github.com/andrewssobral/lrslibrary

论文:https://arxiv.org/abs/1705.08948v4

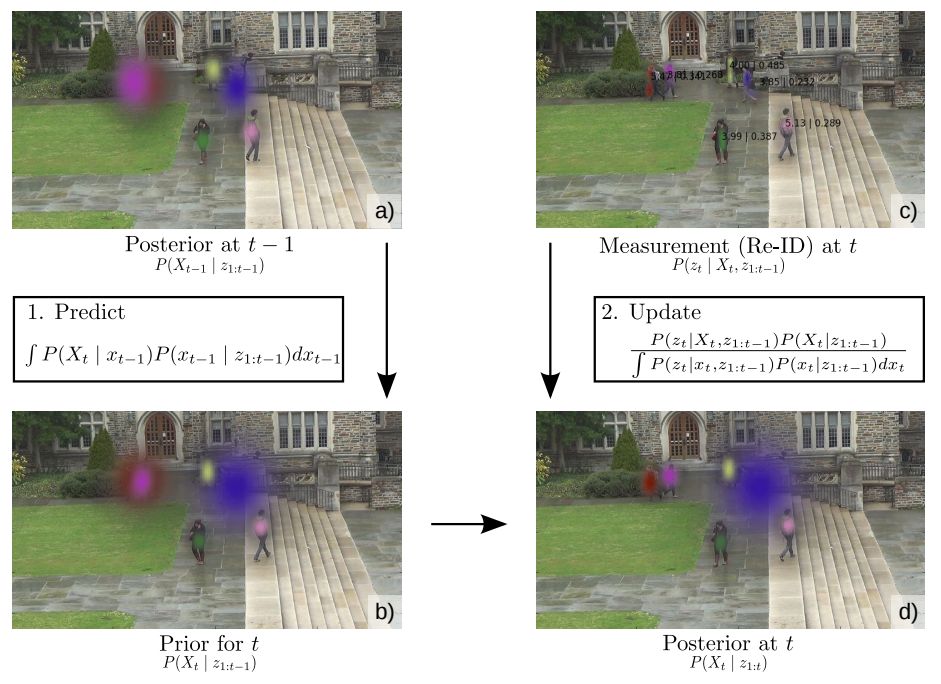

Towards a Principled Integration of Multi-Camera Re-Identification and Tracking through Optimal Bayes Filters

With the rise of end-to-end learning through deep learning, person detectors and re-identification (ReID) models have recently become very strong. Multi-camera multi-target (MCMT) tracking has not fully gone through this transformation yet.

代码:

https://github.com/VisualComputingInstitute/triplet-reid

论文:https://arxiv.org/abs/1705.04608v2

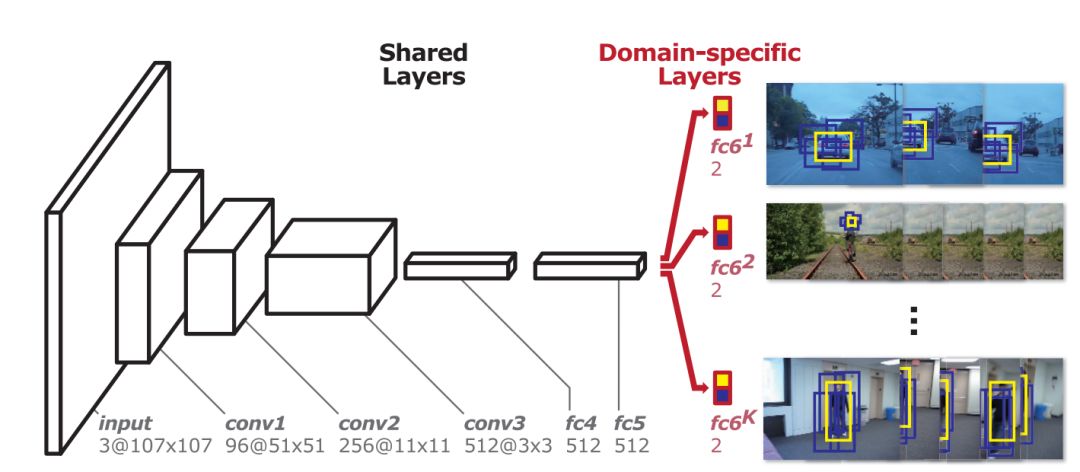

Learning Multi-Domain Convolutional Neural Networks for Visual Tracking

Our algorithm pretrains a CNN using a large set of videos with tracking ground-truths to obtain a generic target representation. Online tracking is performed by evaluating the candidate windows randomly sampled around the previous target state.

代码:https://github.com/HyeonseobNam/MDNet

论文:https://arxiv.org/abs/1510.07945v2

ECO: Efficient Convolution Operators for Tracking

In recent years, Discriminative Correlation Filter (DCF) based methods have significantly advanced the state-of-the-art in tracking. Moreover, our fast variant, using hand-crafted features, operates at 60 Hz on a single CPU, while obtaining 65.0% AUC on OTB-2015.

代码:https://github.com/martin-danelljan/ECO

论文:https://arxiv.org/abs/1611.09224v2

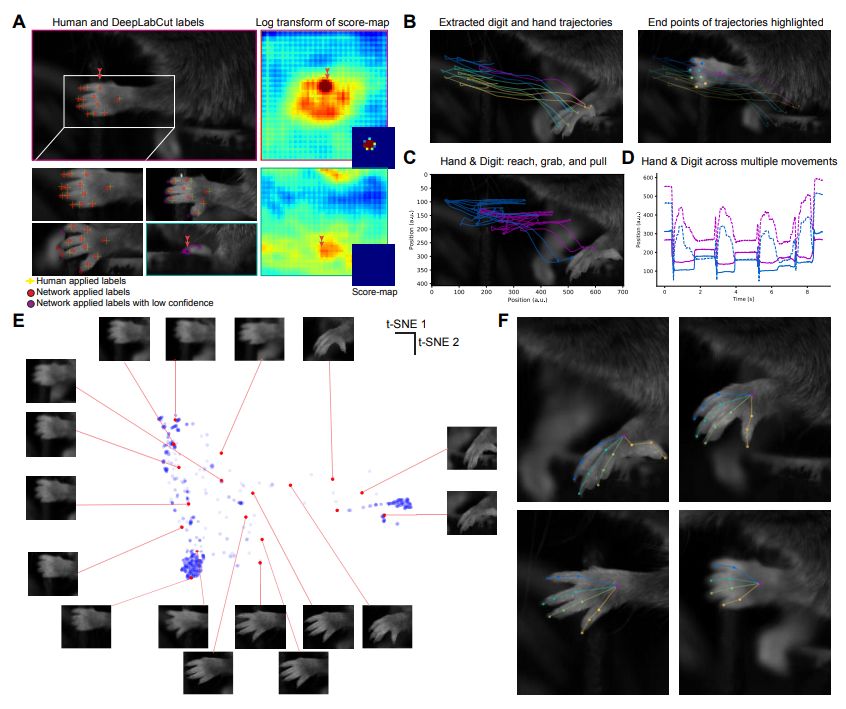

Markerless tracking of user-defined features with deep learning

Quantifying behavior is crucial for many applications in neuroscience. Videography provides easy methods for the observation and recording of animal behavior in diverse settings, yet extracting particular aspects of a behavior for further analysis can be highly time consuming.

代码:

https://github.com/AlexEMG/DeepLabCut

论文:https://arxiv.org/abs/1804.03142v1

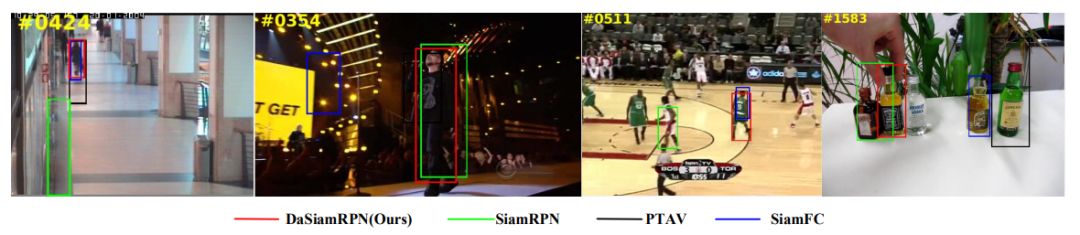

Distractor-aware Siamese Networks for Visual Object Tracking

In this paper, we focus on learning distractor-aware Siamese networks for accurate and long-term tracking. During the off-line training phase, an effective sampling strategy is introduced to control this distribution and make the model focus on the semantic distractors.

代码:https://github.com/foolwood/DaSiamRPN

论文:https://arxiv.org/abs/1808.06048v1

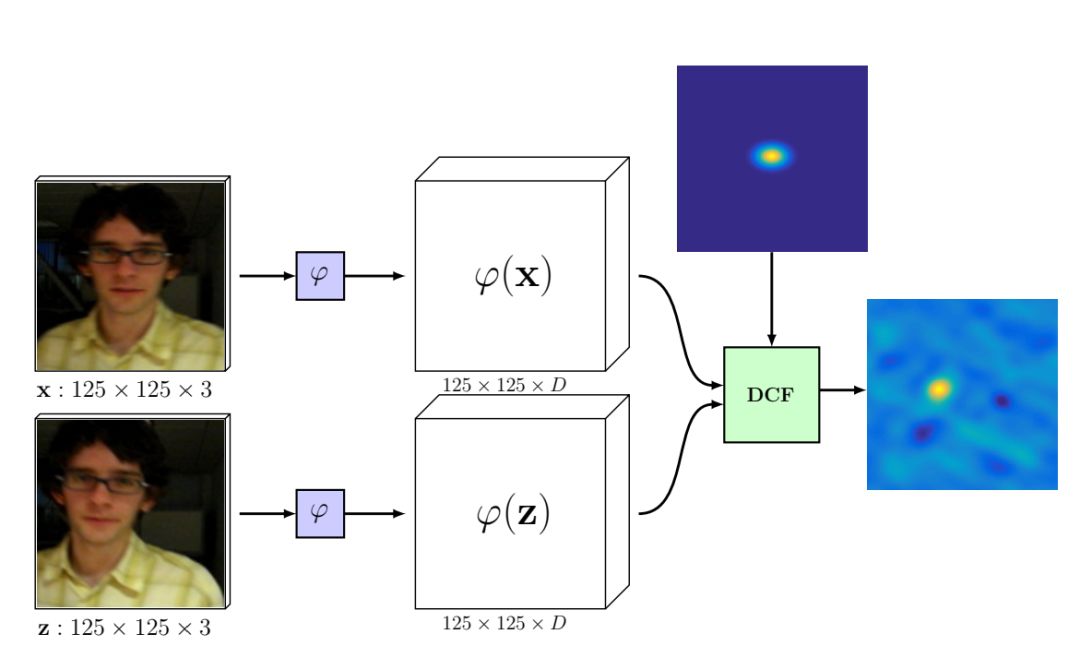

DCFNet: Discriminant Correlation Filters Network for Visual Tracking

Discriminant Correlation Filters (DCF) based methods now become a kind of dominant approach to online object tracking. In this work, we present an end-to-end lightweight network architecture, namely DCFNet, to learn the convolutional features and perform the correlation tracking process simultaneously.

代码:https://github.com/foolwood/DCFNet

论文:https://arxiv.org/abs/1704.04057v1

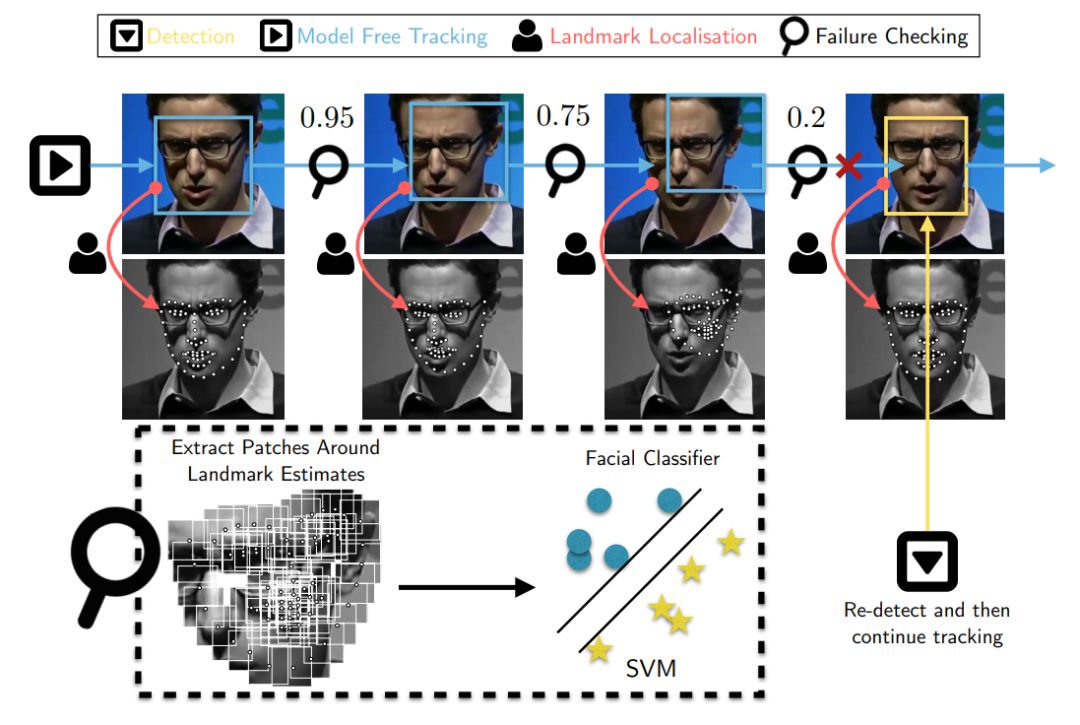

A Comprehensive Performance Evaluation of Deformable Face Tracking "In-the-Wild"

Recently, technologies such as face detection, facial landmark localisation and face recognition and verification have matured enough to provide effective and efficient solutions for imagery captured under arbitrary conditions (referred to as "in-the-wild"). Until now, the performance has mainly been assessed qualitatively by visually assessing the result of a deformable face tracking technology on short videos.

代码:https://github.com/zhusz/CVPR15-CFSS

论文:https://arxiv.org/abs/1603.06015v2

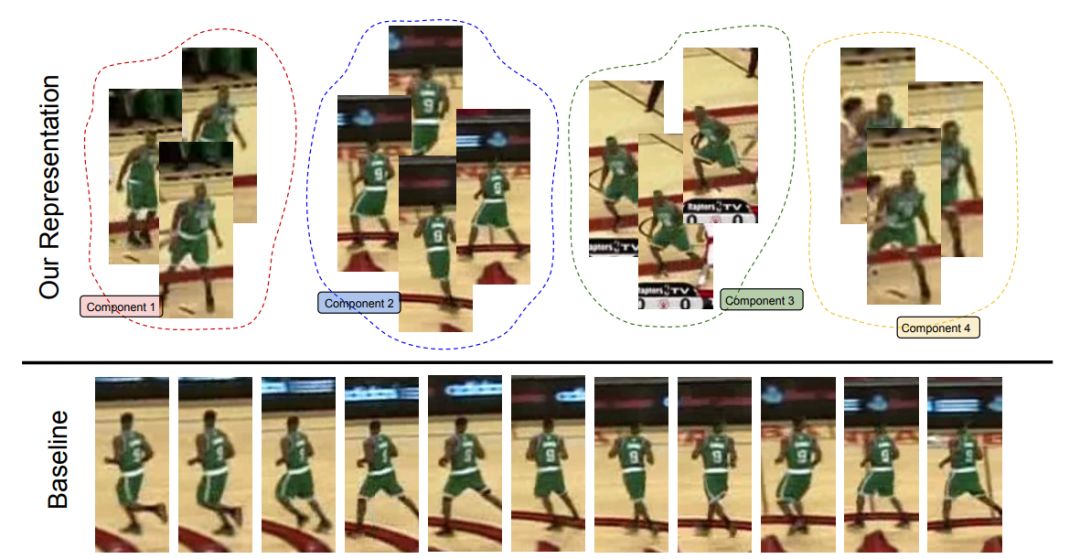

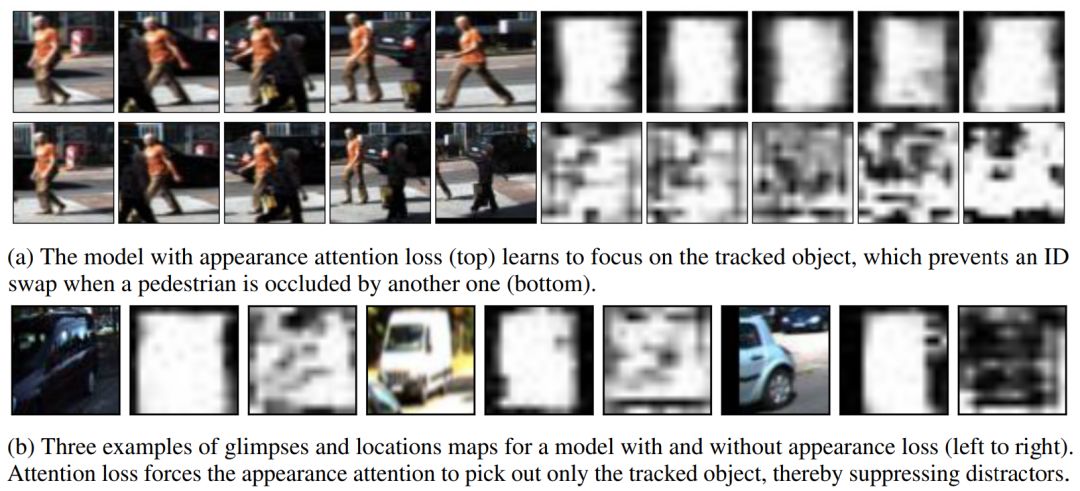

Hierarchical Attentive Recurrent Tracking

Class-agnostic object tracking is particularly difficult in cluttered environments as target specific discriminative models cannot be learned a priori. Inspired by how the human visual cortex employs spatial attention and separate "where" and "what" processing pathways to actively suppress irrelevant visual features, this work develops a hierarchical attentive recurrent model for single object tracking in videos.

代码:https://github.com/akosiorek/hart

论文:https://arxiv.org/abs/1706.09262v2

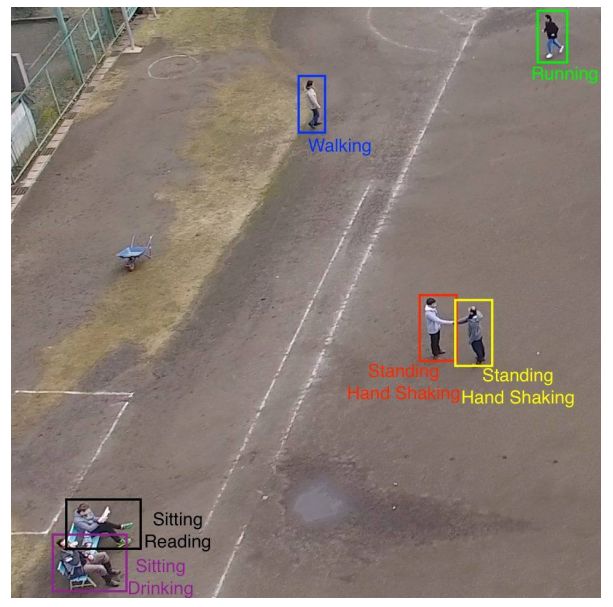

Real-Time Multiple Object Tracking - A Study on the Importance of Speed

In this project, we implement a multiple object tracker, following the tracking-by-detection paradigm, as an extension of an existing method. It works by modelling the movement of objects by solving the filtering problem, and associating detections with predicted new locations in new frames using the Hungarian algorithm.

代码:https://github.com/samuelmurray/tracking-by-detection

论文:https://arxiv.org/abs/1709.03572v2

-END-

专 · 知

人工智能领域26个主题知识资料全集获取与加入专知人工智能服务群: 欢迎微信扫一扫加入专知人工智能知识星球群,获取专业知识教程视频资料和与专家交流咨询!

请PC登录www.zhuanzhi.ai或者点击阅读原文,注册登录专知,获取更多AI知识资料!

请加专知小助手微信(扫一扫如下二维码添加),加入专知主题群(请备注主题类型:AI、NLP、CV、 KG等)交流~

请关注专知公众号,获取人工智能的专业知识!

点击“阅读原文”,使用专知