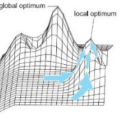

We introduce a new algorithm to learn on the fly the parameter value $\theta_\star:=\mathrm{argmax}_{\theta\in\Theta}\mathbb{E}[\log f_\theta(Y_0)]$ from a sequence $(Y_t)_{t\geq 1}$ of independent copies of $Y_0$, with $\{f_\theta,\,\theta\in\Theta\subseteq\mathbb{R}^d\}$ a parametric model. The main idea of the proposed approach is to define a sequence $(\tilde{\pi}_t)_{t\geq 1}$ of probability distributions on $\Theta$ which (i) is shown to concentrate on $\theta_\star$ as $t\rightarrow\infty$ and (ii) can be estimated in an online fashion by means of a standard particle filter (PF) algorithm. The sequence $(\tilde{\pi}_t)_{t\geq 1}$ depends on a learning rate $h_t\rightarrow 0$, with the slower $h_t$ converges to zero the greater is the ability of the PF approximation $\tilde{\pi}_t^N$ of $\tilde{\pi}_t$ to escape from a local optimum of the objective function, but the slower is the rate at which $\tilde{\pi}_t$ concentrates on $\theta_\star$. To conciliate ability to escape from a local optimum and fast convergence towards $\theta_\star$ we exploit the acceleration property of averaging, well-known in the stochastic gradient descent literature, by letting $\bar{\theta}_t^N:=t^{-1}\sum_{s=1}^t \int_{\Theta}\theta\ \tilde{\pi}_s^N(\mathrm{d} \theta)$ be the proposed estimator of $\theta_\star$. Our numerical experiments suggest that $\bar{\theta}_t^N$ converges to $\theta_\star$ at the optimal $t^{-1/2}$ rate in challenging models and in situations where $\tilde{\pi}_t^N$ concentrates on this parameter value at a slower rate. We illustrate the practical usefulness of the proposed optimization algorithm for online parameter learning and for computing the maximum likelihood estimator.

翻译:我们引入了一个新的算法, 用于在参数值上学习 $Nthetastar: < mathrm\ argmax\ tata\ in\ TheTa\\\\ a\\ a\\\\ a\ a\ a\ a\ a\\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\\ a\\\\\\\ a\ a\ a\ a\ a\ a\ a\\ a\ a\ a\ a\ a\ a\ a\\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ t\ a\ t\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ a\ dal deal deal dealstalstalstal a\\\\\\\\\ d d d dal a\ a\ a\ a\ dreal a\ dreal_\\\\\\\\\ d d dal_\\\\\\\\\\ a\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\ d