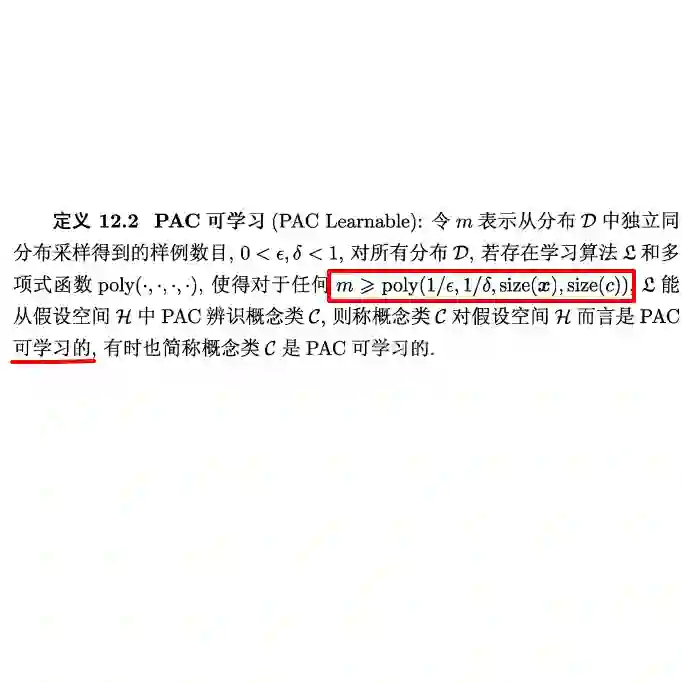

We study a fundamental question of domain generalization: given a family of domains (i.e., data distributions), how many randomly sampled domains do we need to collect data from in order to learn a model that performs reasonably well on every seen and unseen domain in the family? We model this problem in the PAC framework and introduce a new combinatorial measure, which we call the domain shattering dimension. We show that this dimension characterizes the domain sample complexity. Furthermore, we establish a tight quantitative relationship between the domain shattering dimension and the classic VC dimension, demonstrating that every hypothesis class that is learnable in the standard PAC setting is also learnable in our setting.

翻译:我们研究域泛化的一个基本问题:给定一个领域族(即数据分布族),我们需要从多少个随机采样的领域中收集数据,才能学得一个在该族内所有已见及未见领域上均表现良好的模型?我们在PAC框架下对此问题进行建模,并引入一种新的组合度量——域粉碎维度。我们证明该维度刻画了领域样本复杂度。此外,我们建立了域粉碎维度与经典VC维度之间的紧致定量关系,表明所有在标准PAC设定下可学习的假设类,在我们的设定下同样可学习。