本教程将概述最近机器学习对组合优化的影响,特别是在混合整数规划(MIP)框架下。涵盖的主题将包括用于预测可行解决方案的ML和强化学习,使用ML改进精确求解器,在精确MIP求解器中学习的软件框架,以及新兴的以决策为中心的学习范式。

https://sites.google.com/view/ml-co-aaai-21/

组合优化(CO)是计算机科学、人工智能(AI)和运筹学的基石。它在从机组人员规划到运动日程安排和的工业应用中取得了广泛的成功。虽然CO过去是大多数人工智能研究的基础,通过可满足性问题(SAT),现代人工智能研究已经转向更多的概率方法,并且这两个领域之间的联系已经减弱。然而,在过去的五到十年里,人们对使用机器学习方法改进组合优化的兴趣又强烈起来。

本教程旨在向观众介绍这一令人兴奋的不断发展的领域。我们相信,听众将从提出的教程中获益良多,因为它将布局这个研究空间的视角,不同的ML技术在CO设置中的优点,以及各种受益于ML使用的CO任务。我们还将引入一个新的开源库,Ecole,旨在方便该领域的新人访问。虽然本教程将主要关注作为CO的具体数学框架的混合整数规划,我们也将接触到MIP和其他约束推理框架之间的关系,如可满足性(SAT)和约束满足性(CSP),因为将提出的大多数思想都将适用于这些框架。

内容目录:

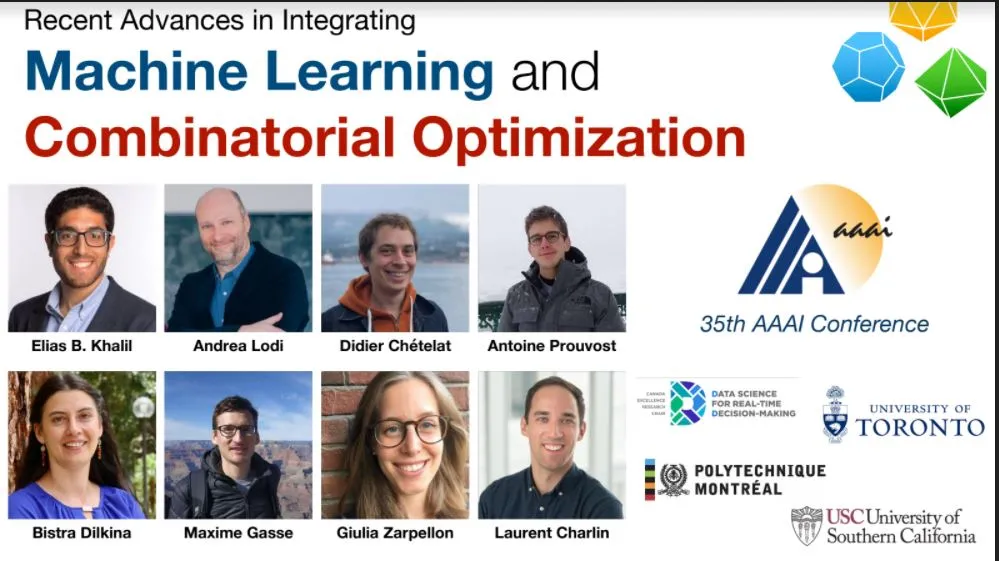

Part I by Elias B. Khalil:

- 组合优化导论 Introduction to combinatorial optimization & Tutorial overview.

- Modeling decision-making problems with Mixed Integer Programming (MIP);

- Complexity and solution approaches (exact and heuristic);

- Real-world applications;

- Data-driven algorithm design.

Part 2 by Elias B. Khalil

- 机器学习方法 The pure ML approach: predicting feasible solutions.

- Reinforcement learning for combinatorial optimization;

- Neural network architectures for representing graph problems;

- Limitations: lack of guarantees, scalability challenges.

Part 3 by Didier Chételat & Maxime Gasse: [slides]

- 混合方法 The hybrid approach: improving exact solvers with ML.

- The branch-and-bound framework for mixed-integer linear programs (MIP);

- Standard approaches to solver engineering;

- Learning solver search policies: a Markov decision process (MDP) perspective;

- Overview of tasks of interest;

- Open challenges for ML/RL.

Part 4 by Giulia Zarpellon & Laurent Charlin

- 机器学习MIP解决 Machine learning for MIP solving: challenges & literature.

- Hands-on ML-for-MIP with a focus on the Branching problem;

- Representations & Features;

- Generalization notions;

- Data & Metrics.

Part 5 by Antoine Prouvost

- Ecole: A python framework for learning in exact MIP solvers.

- A streamlined interface for doing ML in the open-source MIP solver SCIP, based on OpenAI Gym;

- Example: "learning to branch'' using Ecole;

- Easily extending predefined environments for your own research; Performance evaluation and analysis.

Part 6 by Bistra Dilkina 决策 Decision-focused Learning. Integrating LP/MIP combinatorial downstream tasks end-to-end in learning; Integrating graph optimization tasks end-to-end in learning.

Part 7 by Andrea Lodi: [slides]

- Concluding remarks and new frontiers.

- Business applications;

- Recap of various contributions in this area;

- Evaluation and Challenges going forward.