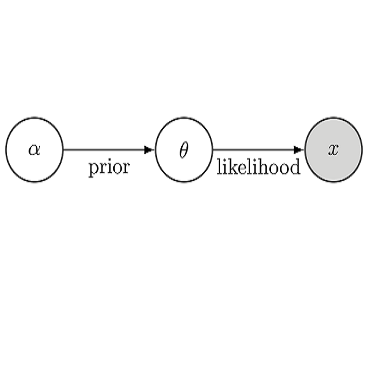

In Bayesian inference prior hyperparameters are chosen subjectively or estimated using empirical Bayes methods. Generalised Bayesian Inference (GBI) also has a learning rate hyperparameter. This is compounded in Semi-Modular Inference (SMI), a GBI framework for multiple datasets (multi-modular problems). As part of any GBI workflow it is necessary to check sensitivity to the choice of hyperparameters, but running MCMC or fitting a variational approximation at each of the hyperparameter values of interest is impractical. Simulation-based Inference has been used by previous authors to amortise over data and hyperparameters, fitting a posterior approximation targeting the forward-KL divergence. However, for GBI and SMI posteriors, it is not possible to amortise over data, as there is no generative model. Working with a variational family parameterised by a conditional normalising flow, we give a direct variational approximation for GBI and SMI posteriors, targeting the reverse-KL divergence, and amortised over prior and loss hyperparameters at fixed data. This can be sampled efficiently at different hyperparameter values without refitting, and supports efficient robustness checks and hyperparameter selection. We show that there exist amortised conditional normalising-flow architectures which are universal approximators. We illustrate our methods with an epidemiological example well known in SMI work and then give the motivating application, a spatial location-prediction task for linguistic-profile data. SMI gives improved prediction with hyperparameters chosen using our amortised framework. The code is available online.

翻译:在贝叶斯推断中,先验超参数通常通过主观选择或经验贝叶斯方法进行估计。广义贝叶斯推断(GBI)还包含一个学习率超参数。这一问题在半模块化推断(SMI)中更为复杂,后者是针对多数据集(多模块问题)的GBI框架。在GBI工作流程中,必须检验对超参数选择的敏感性,但在每个关注的超参数值上运行MCMC或拟合变分近似是不切实际的。先前研究者已使用基于模拟的推断对数据和超参数进行摊销,通过拟合针对前向KL散度的后验近似来实现。然而,对于GBI和SMI后验,由于缺乏生成模型,无法对数据进行摊销。我们采用由条件归一化流参数化的变分族,为GBI和SMI后验提供直接变分近似,以反向KL散度为优化目标,并在固定数据下对先验和损失超参数进行摊销。该方法可在不同超参数值下高效采样而无需重新拟合,支持高效的鲁棒性检验和超参数选择。我们证明了存在可作为通用逼近器的摊销条件归一化流架构。我们通过SMI研究中一个著名的流行病学案例展示方法,随后给出动机应用——针对语言特征数据的空间位置预测任务。使用我们的摊销框架选择超参数后,SMI实现了更优的预测性能。相关代码已在线公开。