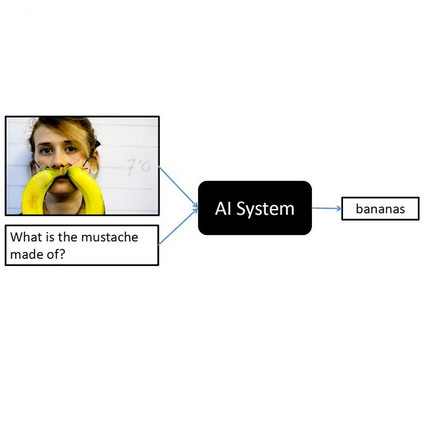

In visual question answering (VQA), a machine must answer a question given an associated image. Recently, accessibility researchers have explored whether VQA can be deployed in a real-world setting where users with visual impairments learn about their environment by capturing their visual surroundings and asking questions. However, most of the existing benchmarking datasets for VQA focus on machine "understanding" and it remains unclear how progress on those datasets corresponds to improvements in this real-world use case. We aim to answer this question by evaluating discrepancies between machine "understanding" datasets (VQA-v2) and accessibility datasets (VizWiz) by evaluating a variety of VQA models. Based on our findings, we discuss opportunities and challenges in VQA for accessibility and suggest directions for future work.

翻译:在直观回答(VQA)中,机器必须回答一个给出相关图像的问题。最近,无障碍研究人员探索了在现实环境中,视障用户能否通过捕捉其视觉环境并提问来了解其环境。然而,现有VQA的基准数据集大多侧重于机器“理解 ”, 而这些数据集的进展如何与这个真实世界使用案例的改进相吻合。我们的目标是通过评估各种VQA模型,评估机器“了解”数据集(VQA-v2)与无障碍数据集(VizWiz)之间的差异,以此回答这一问题。我们根据我们的调查结果,讨论在VQA中获取数据的机会和挑战,并提出未来工作的方向。