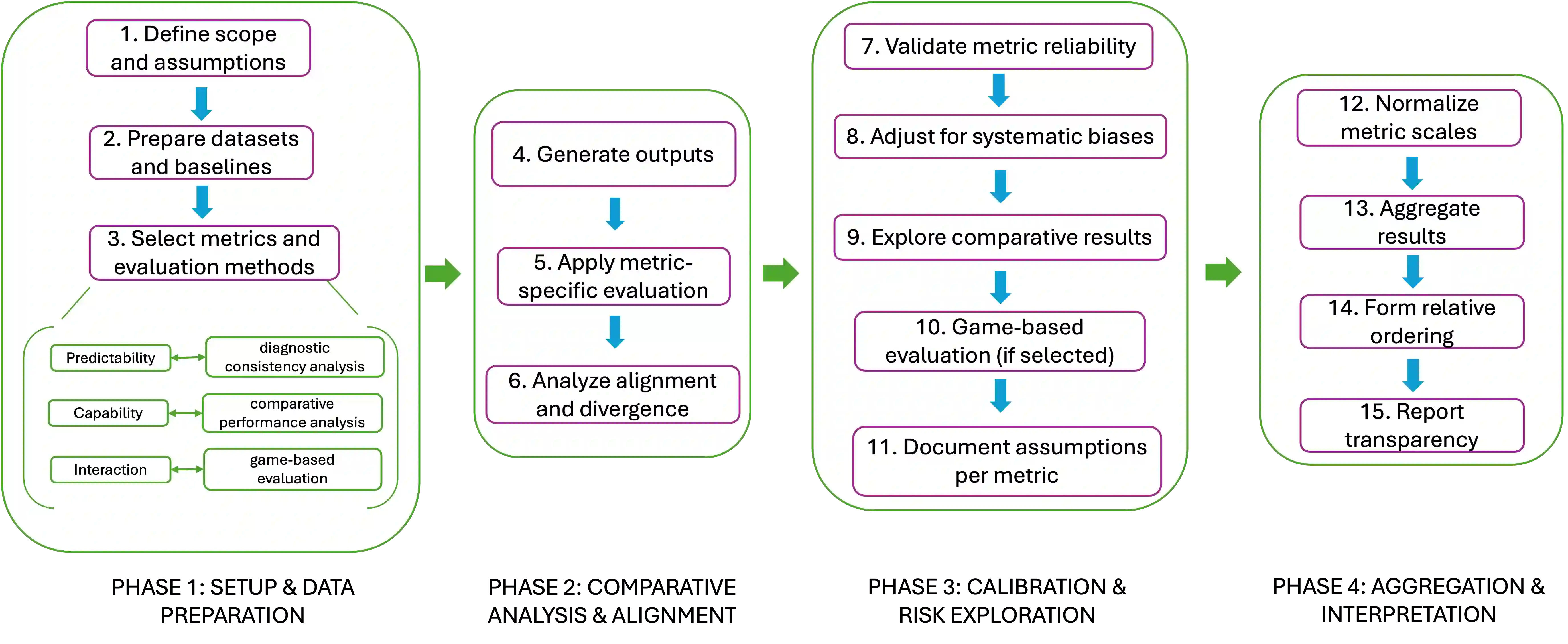

Before deploying an AI system to replace an existing process, it must be compared with the incumbent to ensure improvement without added risk. Traditional evaluation relies on ground truth for both systems, but this is often unavailable due to delayed or unknowable outcomes, high costs, or incomplete data, especially for long-standing systems deemed safe by convention. The more practical solution is not to compute absolute risk but the difference between systems. We therefore propose a marginal risk assessment framework, that avoids dependence on ground truth or absolute risk. It emphasizes three kinds of relative evaluation methodology, including predictability, capability and interaction dominance. By shifting focus from absolute to relative evaluation, our approach equips software teams with actionable guidance: identifying where AI enhances outcomes, where it introduces new risks, and how to adopt such systems responsibly.

翻译:在部署AI系统以替代现有流程之前,必须将其与原有系统进行比较,以确保改进的同时不增加风险。传统评估方法依赖于两个系统的真实标签,但由于结果延迟或不可知、成本高昂或数据不完整,真实标签往往无法获取,尤其是对于因惯例而被视为安全的长期运行系统。更实用的解决方案并非计算绝对风险,而是评估系统间的风险差异。为此,我们提出了一种边际风险评估框架,该框架避免了对真实标签或绝对风险的依赖。它强调三种相对评估方法,包括可预测性、能力与交互主导性。通过将焦点从绝对评估转向相对评估,我们的方法为软件团队提供了可操作的指导:识别AI在何处提升结果、在何处引入新风险,以及如何负责任地采用此类系统。