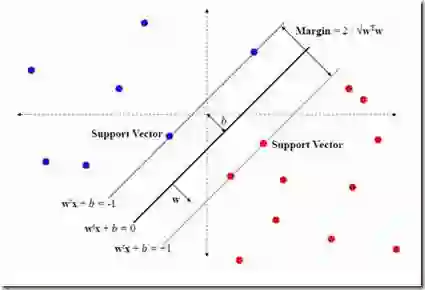

This paper investigates the efficacy of a regularized multi-task learning (MTL) framework based on SVM (M-SVM) to answer whether MTL always provides reliable results and how MTL outperforms independent learning. We first find that M-SVM is Bayes risk consistent in the limit of large sample size. This implies that despite the task dissimilarities, M-SVM always produces a reliable decision rule for each task in terms of misclassification error when the data size is large enough. Furthermore, we find that the task-interaction vanishes as the data size goes to infinity, and the convergence rates of M-SVM and its single-task counterpart have the same upper bound. The former suggests that M-SVM cannot improve the limit classifier's performance; based on the latter, we conjecture that the optimal convergence rate is not improved when the task number is fixed. As a novel insight of MTL, our theoretical and experimental results achieved an excellent agreement that the benefit of the MTL methods lies in the improvement of the pre-convergence-rate factor (PCR, to be denoted in Section III) rather than the convergence rate. Moreover, this improvement of PCR factors is more significant when the data size is small.

翻译:本文调查基于SVM(M-SVM)的常规化多任务学习框架(MTL)的功效,以回答MTL是否总是提供可靠的结果,以及MTL如何超越独立学习。我们首先发现,M-SVM是贝斯的风险在大抽样规模的限度内是一致的。这意味着,尽管任务不尽相同,M-SVM总是在数据大小足够大的情况下,从错误分类错误的角度对每项任务产生可靠的决定规则。此外,我们发现任务间交流随着数据规模变得不精确而消失,M-SVM及其单一任务对应方的趋同率也具有相同的最高约束。我们首先发现M-SVM无法改善限制分类员的性能;根据后者,我们推测,在任务数目固定时,最佳趋同率不会得到改善。由于对MTL的理论和实验结果有了新的认识,即MTL方法的好处在于改进前的精确范围,M-SVM及其单一任务对应方的趋同率的趋同率的趋同率比图三要小得多。