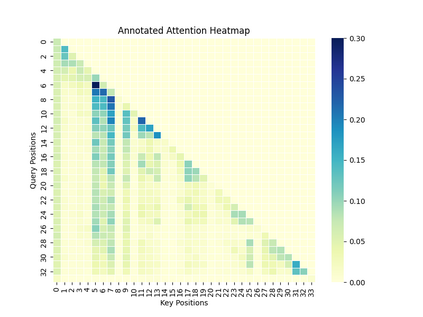

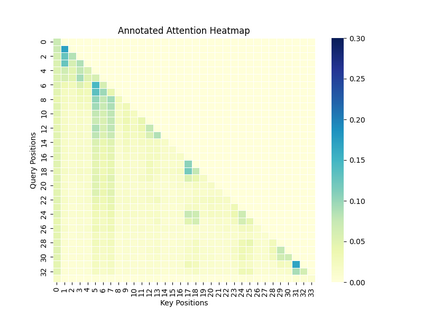

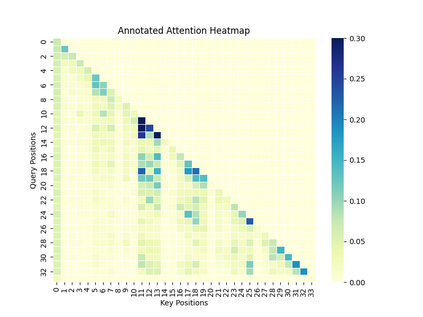

Rotary Position Embedding (RoPE) has shown strong performance in text-based Large Language Models (LLMs), but extending it to video remains a challenge due to the intricate spatiotemporal structure of video frames. Existing adaptations, such as RoPE-3D, attempt to encode spatial and temporal dimensions separately but suffer from two major limitations: positional bias in attention distribution and disruptions in video-text transitions. To overcome these issues, we propose Video Rotary Position Embedding (VRoPE), a novel positional encoding method tailored for Video-LLMs. Specifically, we introduce a more balanced encoding strategy that mitigates attention biases, ensuring a more uniform distribution of spatial focus. Additionally, our approach restructures positional indices to ensure a smooth transition between video and text tokens. Extensive experiments on different models demonstrate that VRoPE consistently outperforms previous RoPE variants, achieving significant improvements in video understanding, temporal reasoning, and retrieval tasks. Code is available at https://github.com/johncaged/VRoPE.

翻译:旋转位置编码(RoPE)在基于文本的大语言模型(LLMs)中展现出优异性能,但由于视频帧具有复杂的时空结构,将其扩展至视频领域仍面临挑战。现有的改进方法(如RoPE-3D)尝试分别编码空间与时间维度,但存在两个主要局限:注意力分布中的位置偏差,以及视频-文本转换中的不连续性。为克服这些问题,我们提出了视频旋转位置编码(VRoPE),一种专为视频大语言模型设计的新型位置编码方法。具体而言,我们引入了一种更均衡的编码策略,以减轻注意力偏差,确保空间关注分布更均匀。此外,我们的方法重构了位置索引,以保证视频与文本标记之间的平滑过渡。在不同模型上的大量实验表明,VRoPE始终优于先前的RoPE变体,在视频理解、时序推理与检索任务中均取得显著提升。代码发布于 https://github.com/johncaged/VRoPE。