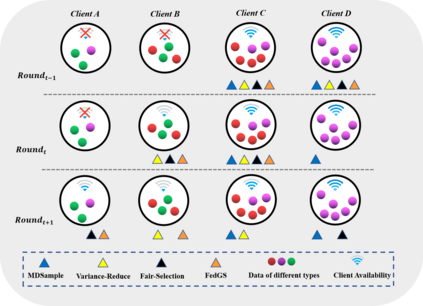

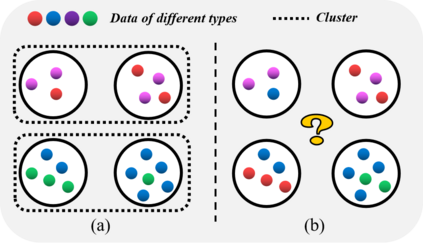

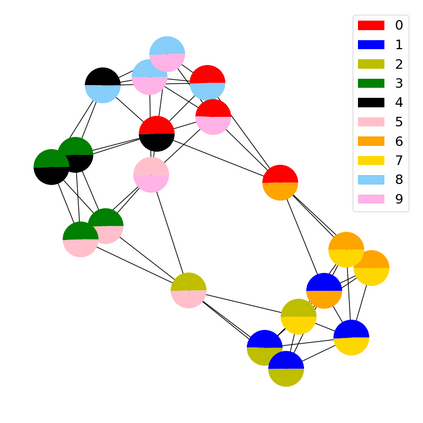

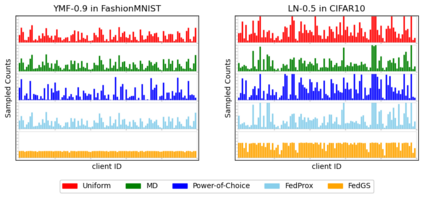

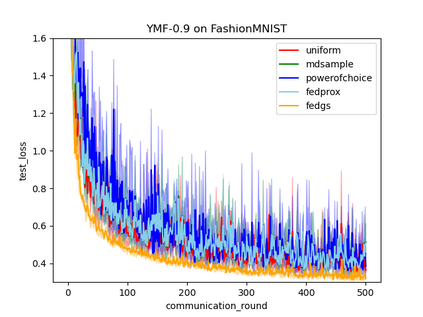

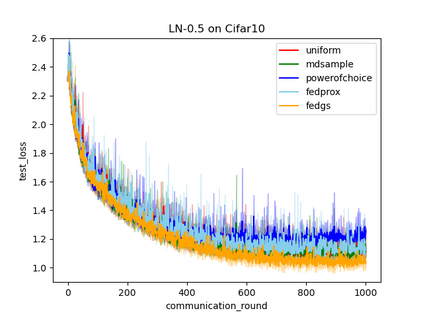

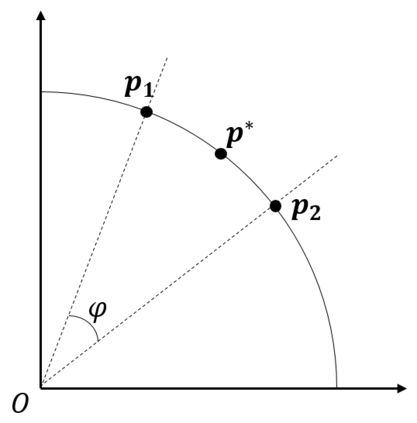

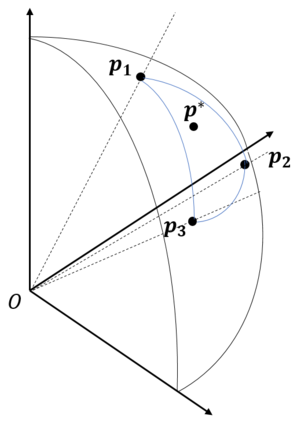

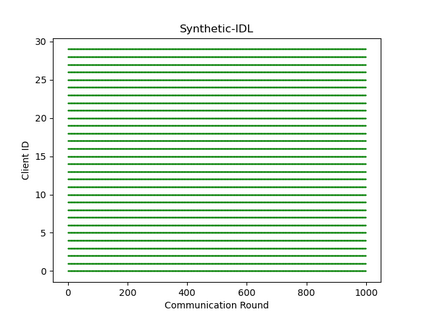

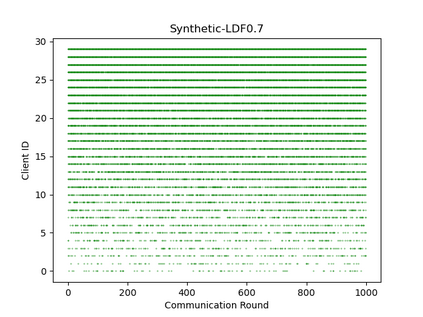

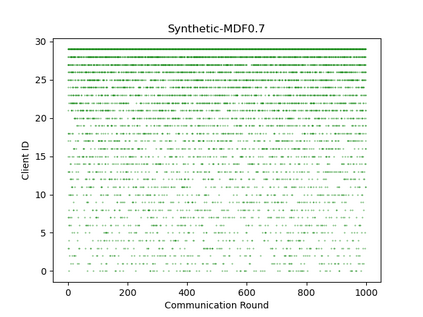

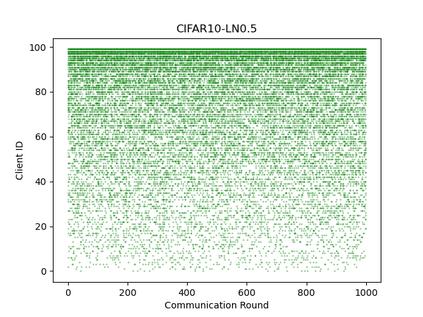

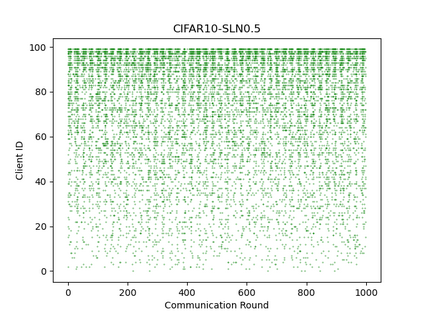

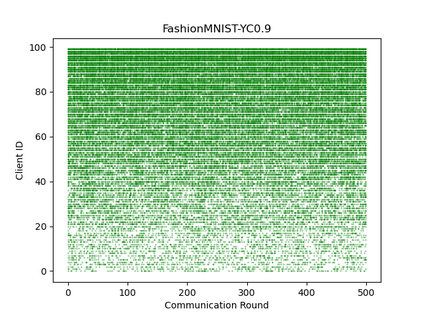

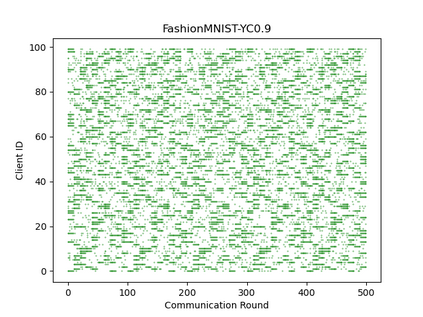

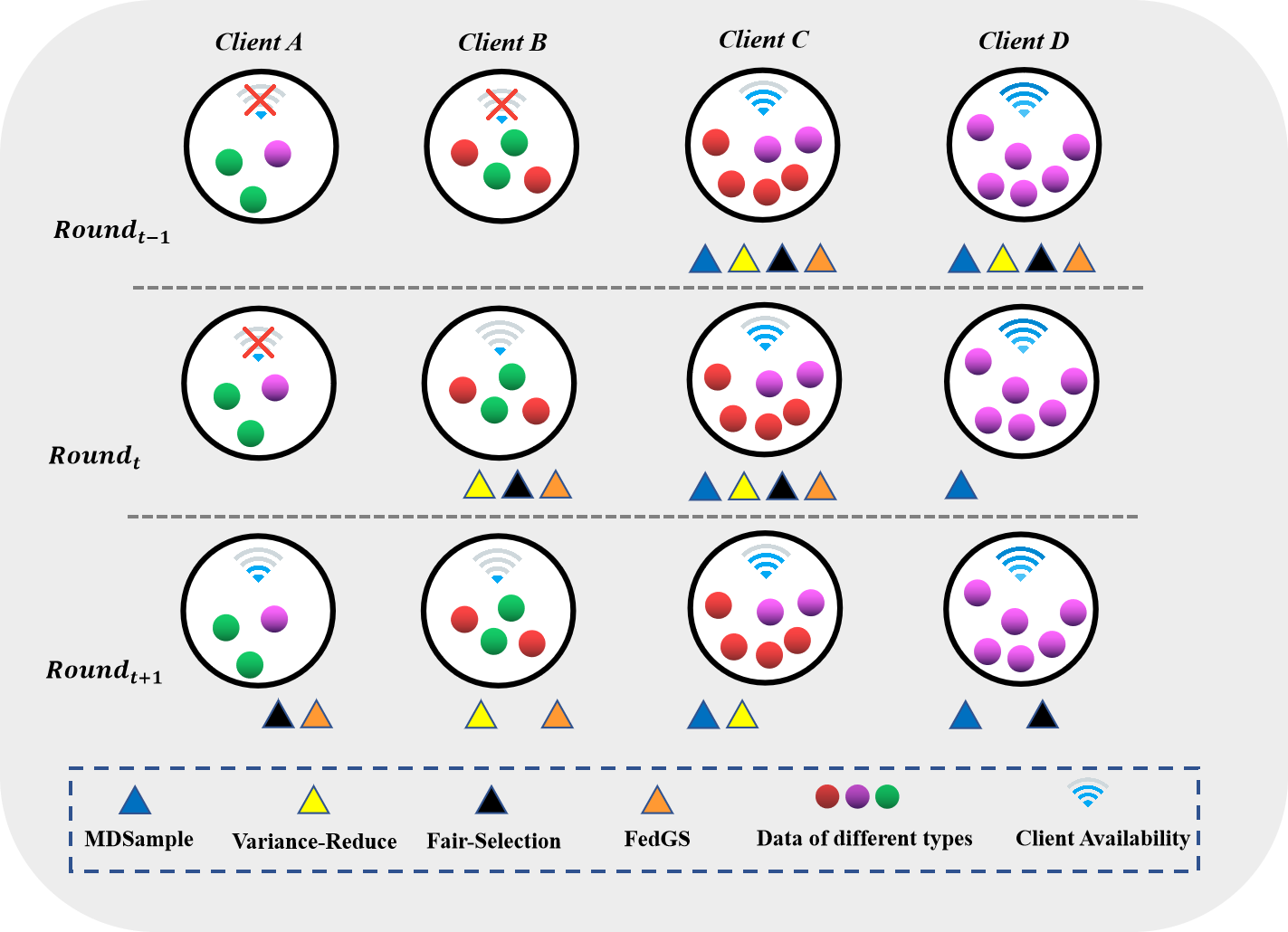

While federated learning has shown strong results in optimizing a machine learning model without direct access to the original data, its performance may be hindered by intermittent client availability which slows down the convergence and biases the final learned model. There are significant challenges to achieve both stable and bias-free training under arbitrary client availability. To address these challenges, we propose a framework named Federated Graph-based Sampling (FedGS), to stabilize the global model update and mitigate the long-term bias given arbitrary client availability simultaneously. First, we model the data correlations of clients with a Data-Distribution-Dependency Graph (3DG) that helps keep the sampled clients data apart from each other, which is theoretically shown to improve the approximation to the optimal model update. Second, constrained by the far-distance in data distribution of the sampled clients, we further minimize the variance of the numbers of times that the clients are sampled, to mitigate long-term bias. To validate the effectiveness of FedGS, we conduct experiments on three datasets under a comprehensive set of seven client availability modes. Our experimental results confirm FedGS's advantage in both enabling a fair client-sampling scheme and improving the model performance under arbitrary client availability. Our code is available at \url{https://github.com/WwZzz/FedGS}.

翻译:虽然联谊会学习在优化机器学习模式、不直接获取原始数据方面已显示出强有力的成果,但其业绩可能因客户间歇性可用性而受阻,从而减缓了趋同和对最后学习模式的偏见;在任意提供客户的情况下,实现稳定和无偏见的培训面临重大挑战;为应对这些挑战,我们提议了一个称为基于联邦图表的抽样框架,以稳定全球模式更新,并减少长期偏差,因为客户同时可以任意获取。首先,我们用数据-分发-依赖性图表(3DG)来模拟客户的数据相关性,帮助抽样客户的数据彼此分离,理论上显示这些数据有助于改进最佳模式更新的近似性。第二,由于抽样客户的数据分布距离遥远,我们进一步尽量减少客户抽样次数的差异,以缓解长期的偏差。为了验证FDGS的实效,我们在一套七种客户可用性模式下对三套数据集进行实验。我们的实验结果证实FDGS在促成公平客户/用户可获取性方面的优势。