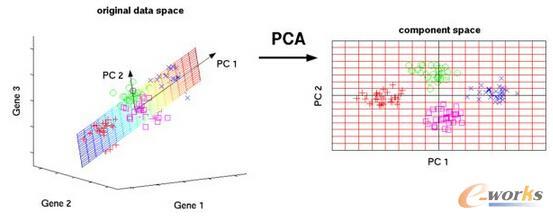

While utilizing syntactic tools such as parts-of-speech (POS) tagging has helped us understand sentence structures and their distribution across diverse corpora, it is quite complex and poses a challenge in natural language processing (NLP). This study focuses on understanding sentence structure balance - usages of nouns, verbs, determiners, etc - harmoniously without relying on such tools. It proposes a novel statistical method that uses American Standard Code for Information Interchange (ASCII) codes to represent text of 11 text corpora from various sources and their lexical category alignment after using their compressed versions through PCA, and analyzes the results through histograms and normality tests such as Shapiro-Wilk and Anderson-Darling Tests. By focusing on ASCII codes, this approach simplifies text processing, although not replacing any syntactic tools but complementing them by offering it as a resource-efficient tool for assessing text balance. The story generated by Grok shows near normality indicating balanced sentence structures in LLM outputs, whereas 4 out of the remaining 10 pass the normality tests. Further research could explore potential applications in text quality evaluation and style analysis with syntactic integration for more broader tasks.

翻译:尽管利用词性标注等句法工具有助于理解句子结构及其在不同语料库中的分布,但此类方法较为复杂,对自然语言处理(NLP)构成挑战。本研究旨在不依赖此类工具的情况下,理解句子结构的平衡性——即名词、动词、限定词等用法的和谐分布。我们提出一种新颖的统计方法:使用美国信息交换标准代码(ASCII)表示来自不同来源的11个文本语料库的文本,并通过主成分分析(PCA)压缩后对齐其词汇类别,最后通过直方图以及夏皮罗-威尔克检验和安德森-达林检验等正态性检验分析结果。该方法聚焦于ASCII编码,简化了文本处理流程,虽不替代任何句法工具,但可作为评估文本平衡性的资源高效工具与之互补。由Grok生成的故事显示出接近正态分布,表明大语言模型(LLM)输出的句子结构较为平衡,而其余10个语料库中有4个通过了正态性检验。未来研究可探索该方法在文本质量评估和风格分析中的潜在应用,并结合句法整合以处理更广泛的任务。