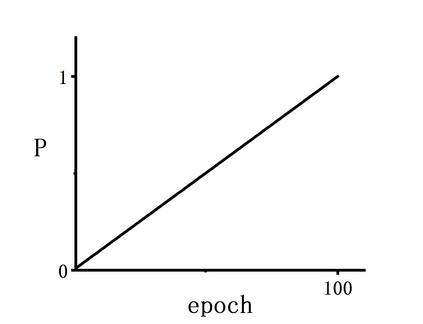

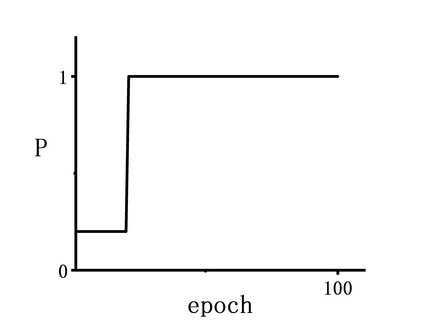

Semi-supervised Fine-Grained Recognition is a challenge task due to the difficulty of data imbalance, high inter-class similarity and domain mismatch. Recent years, this field has witnessed great progress and many methods has gained great performance. However, these methods can hardly generalize to the large-scale datasets, such as Semi-iNat, as they are prone to suffer from noise in unlabeled data and the incompetence for learning features from imbalanced fine-grained data. In this work, we propose Bilateral-Branch Self-Training Framework (BiSTF), a simple yet effective framework to improve existing semi-supervised learning methods on class-imbalanced and domain-shifted fine-grained data. By adjusting the update frequency through stochastic epoch update, BiSTF iteratively retrains a baseline SSL model with a labeled set expanded by selectively adding pseudo-labeled samples from an unlabeled set, where the distribution of pseudo-labeled samples are the same as the labeled data. We show that BiSTF outperforms the existing state-of-the-art SSL algorithm on Semi-iNat dataset.

翻译:由于数据不平衡、阶级间相似性和域际不匹配的困难,半监督的精密识别是一项艰巨的任务。 近年来,这个领域取得了巨大进展,许多方法也取得了巨大绩效。 然而,这些方法很难概括到大规模数据集,如Semi-Nat, 因为它们容易在未贴标签的数据中受到噪音的影响,而且无法从不平衡的细微采集的数据中学习特征。在这项工作中,我们提议了双边-Branch自我培训框架(BISTF),这是一个简单而有效的框架,用以改进关于类平衡和域易变微缩数据的现有半监督学习方法。通过Stochepoch更新调整更新更新更新更新频率,BiSTF迭代重置一个基线SLF模型,通过有选择地从未贴标签的数据集中添加假标签样本而扩大一个标签模式。在那里,假标签样本的分布与标签数据相同。我们发现,BistFTF在S-NaSL在SL上超越了现有的状态数据。