【泡泡一分钟】条件生成式对抗网络的阴影检测

每天一分钟,带你读遍机器人顶级会议文章

标题:Shadow Detection with Conditional Generative Adversarial Networks

作者:Vu Nguyen, Tomas F. Yago Vicente, Maozheng Zhao, Minh Hoai, Dimitris Samara.

来源:International Conference on Computer Vision (ICCV 2017)

编译:黄思宇

审核:颜青松 陈世浪

欢迎个人转发朋友圈;其他机构或自媒体如需转载,后台留言申请授权

摘要

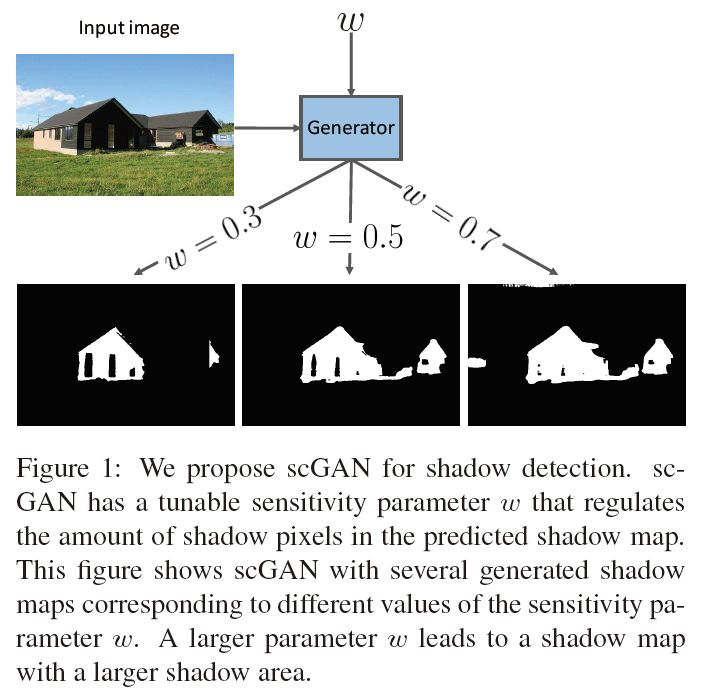

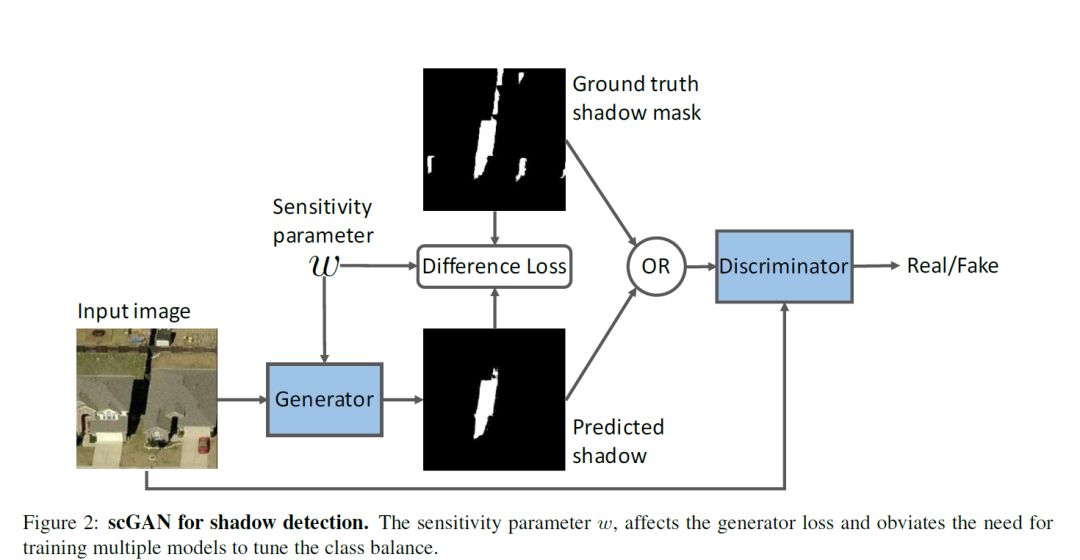

本文提出了一个扩展的条件生成式对抗网络(GAN)——scGAN,其针对于图像中的阴影检测问题。先前的阴影检测方法集中于学习阴影区域的局部外观,同时在条件随机场中以成对的潜在目标形式进行有限的局部内容推理。相比之下,我们提出的对抗方法能够对更高层次的关系和全局场景特征建模。我们用条件GAN生成器来训练阴影检测器,并通过将典型的GAN损失与数据丢失项组合来增强其阴影精度。由于阴影标签分布的不平衡性,我们使用加权交叉熵。在标准的GAN架构下,正确设置交叉熵的权重需要训练多个GAN,计算资源昂贵。在scGAN中,我们在生成器中引入额外的灵敏度参数w。该方法能有效地参数化训练中检测器的误差。

结果表明,由此产生的阴影检测器是一个可以生成对应于不同灵敏度级别的阴影图的单一网络,从而避免了对多个模型的计算和昂贵的训练过程。

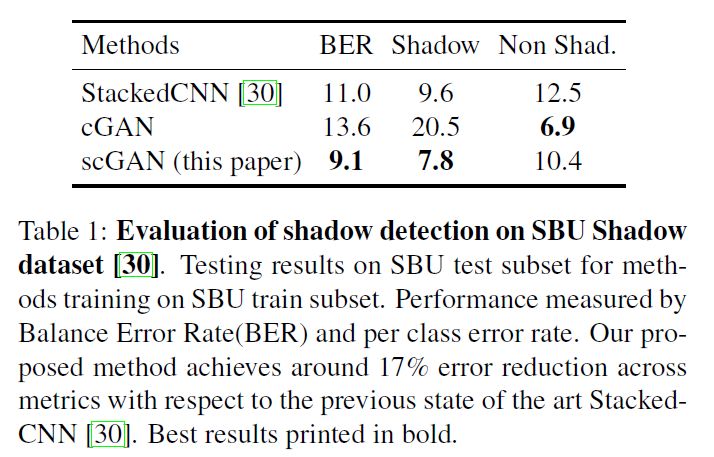

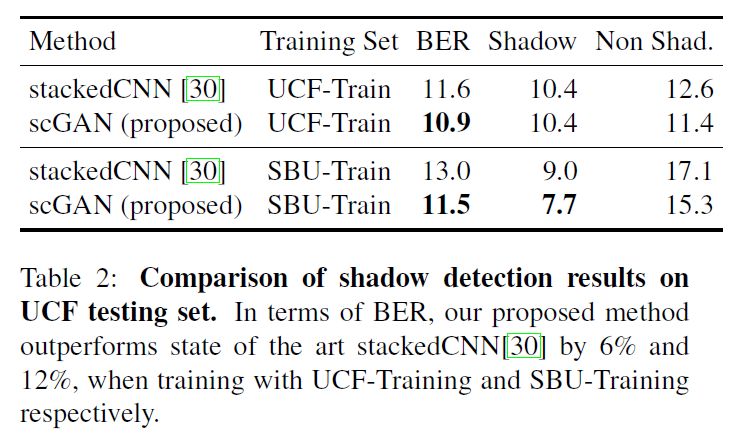

我们在大规模的阴影数据集SBU和UCF测试了该方法,相对于之前最先进的方法,该方法减少了高达17%的误差。

Abstract

We introduce scGAN, a novel extension of conditional Generative Adversarial Networks (GAN) tailored for the challenging problem of shadow detection in images. Previous methods for shadow detection focus on learning the local appearance of shadow regions, while using limited local context reasoning in the form of pairwise potentials in a Conditional Random Field. In contrast, the proposed adversarial approach is able to model higher level relationships and global scene characteristics. We train a shadow detector that corresponds to the generator of a conditional GAN, and augment its shadow accuracy by combining the typical GAN loss with a data loss term. Due to the unbalanced distribution of the shadow labels, we use weighted cross entropy. With the standard GAN architecture, properly setting the weight for the cross entropy would require training multiple GANs, a computationally expensive grid procedure. In scGAN, we introduce an additional sensitivity parameter w to the generator. The proposed approach effectively parameterizes the loss of the trained detector. The resulting shadow detector is a single network that can generate shadow maps corresponding to different sensitivity levels, obviating the need for multiple models and a costly training procedure. We evaluate our method on the large-scale SBU and UCF shadow datasets, and observe up to 17% error reduction with respect to the previous state-of-the-art method.

如果你对本文感兴趣,想要下载完整文章进行阅读,可以关注【泡泡机器人SLAM】公众号(paopaorobot_slam)。

欢迎来到泡泡论坛,这里有大牛为你解答关于SLAM的任何疑惑。

有想问的问题,或者想刷帖回答问题,泡泡论坛欢迎你!

泡泡网站:www.paopaorobot.org

泡泡论坛:http://paopaorobot.org/forums/

泡泡机器人SLAM的原创内容均由泡泡机器人的成员花费大量心血制作而成,希望大家珍惜我们的劳动成果,转载请务必注明出自【泡泡机器人SLAM】微信公众号,否则侵权必究!同时,我们也欢迎各位转载到自己的朋友圈,让更多的人能进入到SLAM这个领域中,让我们共同为推进中国的SLAM事业而努力!

商业合作及转载请联系liufuqiang_robot@hotmail.com