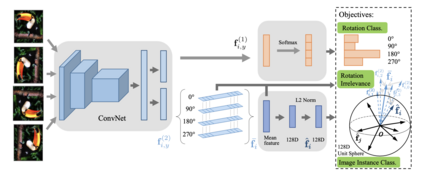

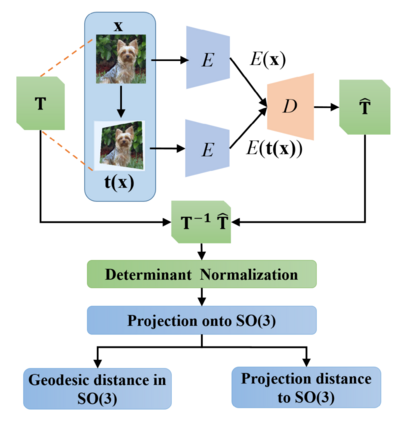

Deep neural networks need huge amount of training data, while in real world there is a scarcity of data available for training purposes. To resolve these issues, self-supervised learning (SSL) methods are used. SSL using geometric transformations (GT) is a simple yet powerful technique used in unsupervised representation learning. Although multiple survey papers have reviewed SSL techniques, there is none that only focuses on those that use geometric transformations. Furthermore, such methods have not been covered in depth in papers where they are reviewed. Our motivation to present this work is that geometric transformations have shown to be powerful supervisory signals in unsupervised representation learning. Moreover, many such works have found tremendous success, but have not gained much attention. We present a concise survey of SSL approaches that use geometric transformations. We shortlist six representative models that use image transformations including those based on predicting and autoencoding transformations. We review their architecture as well as learning methodologies. We also compare the performance of these models in the object recognition task on CIFAR-10 and ImageNet datasets. Our analysis indicates the AETv2 performs the best in most settings. Rotation with feature decoupling also performed well in some settings. We then derive insights from the observed results. Finally, we conclude with a summary of the results and insights as well as highlighting open problems to be addressed and indicating various future directions.

翻译:深心神经网络需要大量的培训数据,而在现实世界中,缺乏可供培训使用的数据。为了解决这些问题,使用了自我监督的学习(SSL)方法。使用几何变换(GT)是一个简单而有力的技术,在未经监督的代言学习中使用了这种技术。虽然多份调查文件审查了SSL技术,但没有一份仅侧重于那些使用几何变换换的技术。此外,这些方法没有在经过审查的文件中深入涵盖。我们介绍这项工作的动机是,几何变换在未经监督的代言学习中显示是强大的监督信号。此外,许多这类工作都取得了巨大成功,但没有得到多少关注。我们对使用几何变换的SSL方法进行了简要调查。我们简短地列出了六种使用图像变换的有代表性的模式,包括基于预测和自动化变换的变换。我们审查了它们的结构以及学习方法。我们还比较了这些模型在CIFAR-10和图像网数据集目标识别任务中的绩效。我们的分析表明,AETv2在大多数代言变方法中展示了最佳的直观结果。我们随后也很好地展示了我们所观察到的外观。