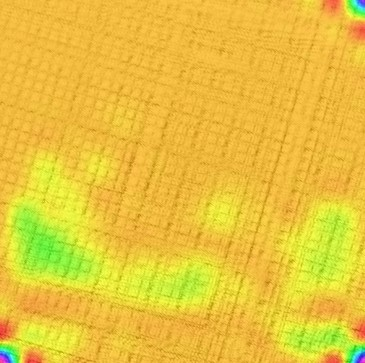

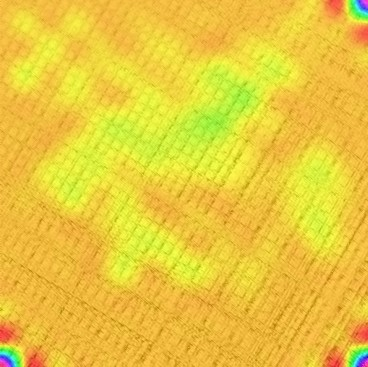

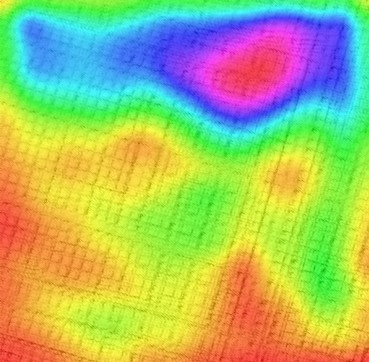

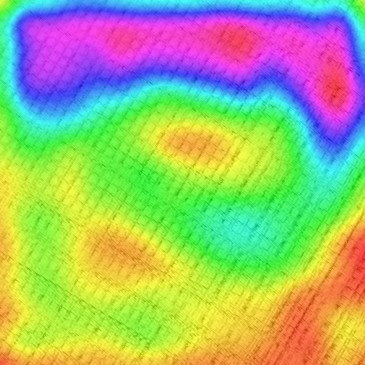

In Digital Holography (DH), it is crucial to extract the object distance from a hologram in order to reconstruct its amplitude and phase. This step is called auto-focusing and it is conventionally solved by first reconstructing a stack of images and then by sharpening each reconstructed image using a focus metric such as entropy or variance. The distance corresponding to the sharpest image is considered the focal position. This approach, while effective, is computationally demanding and time-consuming. In this paper, the determination of the distance is performed by Deep Learning (DL). Two deep learning (DL) architectures are compared: Convolutional Neural Network (CNN)and Visual transformer (ViT). ViT and CNN are used to cope with the problem of auto-focusing as a classification problem. Compared to a first attempt [11] in which the distance between two consecutive classes was 100{\mu}m, our proposal allows us to drastically reduce this distance to 1{\mu}m. Moreover, ViT reaches similar accuracy and is more robust than CNN.

翻译:在数字全息学(DH)中,从全息图中提取物体距离至关重要,以便重建其振幅和阶段。这个步骤被称为自动聚焦,通常通过首先重建一堆图像,然后使用一个聚焦度度(如英特罗比或变异)使每个重建的图像更加精细来解决这个问题。与最锋利图像相对应的距离被认为是焦距。这个方法虽然有效,但具有计算上的要求和耗时性。在本文中,距离的确定由深度学习(DL)进行。两个深度学习(DL)结构是比较的:进化神经网络(CNN)和视觉变异器(ViT)。 ViT和CNN用来处理作为分类问题的自动定向问题。与第一个尝试(11)相比,两个连续班之间的距离是100 mmu}m,我们的建议使我们能够将距离大幅缩短到1 um 。此外,ViT的精确度比CNN更强。