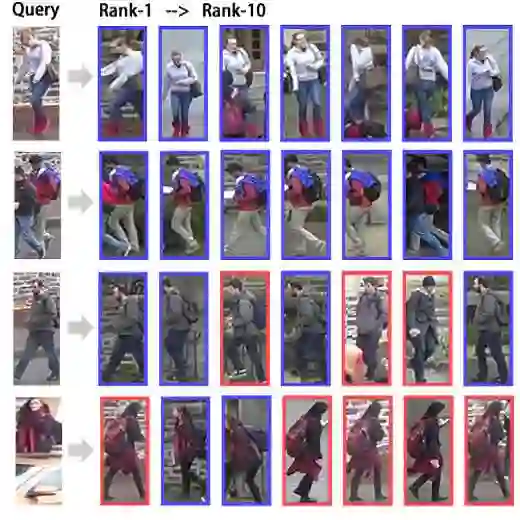

Text-to-Image Person Retrieval (TIPR) is a cross-modal matching task that aims to retrieve the most relevant person images based on a given text query. The key challenge in TIPR lies in achieving effective alignment between textual and visual modalities within a common latent space. To address this challenge, prior approaches incorporate attention mechanisms for implicit cross-modal local alignment. However, they lack the ability to verify whether all local features are correctly aligned. Moreover, existing methods primarily focus on hard negative samples during model updates, with the goal of refining distinctions between positive and negative pairs, often neglecting incorrectly matched positive pairs. To alleviate these issues, we propose FMFA, a cross-modal Full-Mode Fine-grained Alignment framework, which enhances global matching through explicit fine-grained alignment and existing implicit relational reasoning -- hence the term ``full-mode" -- without requiring additional supervision. Specifically, we design an Adaptive Similarity Distribution Matching (A-SDM) module to rectify unmatched positive sample pairs. A-SDM adaptively pulls the unmatched positive pairs closer in the joint embedding space, thereby achieving more precise global alignment. Additionally, we introduce an Explicit Fine-grained Alignment (EFA) module, which makes up for the lack of verification capability of implicit relational reasoning. EFA strengthens explicit cross-modal fine-grained interactions by sparsifying the similarity matrix and employs a hard coding method for local alignment. Our proposed method is evaluated on three public datasets, achieving state-of-the-art performance among all global matching methods. Our code is available at https://github.com/yinhao1102/FMFA.

翻译:文本到图像行人检索(TIPR)是一项跨模态匹配任务,旨在根据给定的文本查询检索最相关的行人图像。TIPR的核心挑战在于在共同的潜在空间中实现文本与视觉模态之间的有效对齐。为解决这一挑战,现有方法引入了注意力机制进行隐式的跨模态局部对齐。然而,这些方法缺乏验证所有局部特征是否已正确对齐的能力。此外,现有方法在模型更新过程中主要关注困难负样本,以优化正负样本对之间的区分度,却往往忽视了错误匹配的正样本对。为缓解这些问题,我们提出了FMFA——一种跨模态全模式细粒度对齐框架,该框架通过显式的细粒度对齐与现有的隐式关系推理(即“全模式”的含义)来增强全局匹配能力,且无需额外的监督。具体而言,我们设计了一个自适应相似度分布匹配(A-SDM)模块来校正未匹配的正样本对。A-SDM在联合嵌入空间中自适应地拉近未匹配正样本对的距离,从而实现更精确的全局对齐。此外,我们引入了显式细粒度对齐(EFA)模块,以弥补隐式关系推理缺乏验证能力的不足。EFA通过对相似度矩阵进行稀疏化来加强显式的跨模态细粒度交互,并采用硬编码方法进行局部对齐。我们在三个公开数据集上评估了所提出的方法,在所有全局匹配方法中取得了最先进的性能。我们的代码发布于 https://github.com/yinhao1102/FMFA。