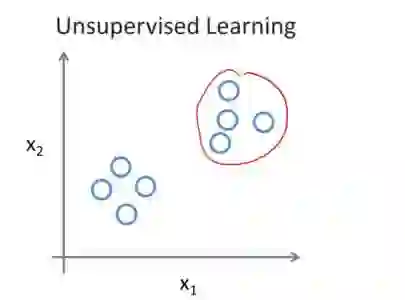

Unsupervised learning permits the development of algorithms that are able to adapt to a variety of different data sets using the same underlying rules thanks to the autonomous discovery of discriminating features during training. Recently, a new class of Hebbian-like and local unsupervised learning rules for neural networks have been developed that minimise a similarity matching cost-function. These have been shown to perform sparse representation learning. This study tests the effectiveness of one such learning rule for learning features from images. The rule implemented is derived from a nonnegative classical multidimensional scaling cost-function, and is applied to both single and multi-layer architectures. The features learned by the algorithm are then used as input to an SVM to test their effectiveness in classification on the established CIFAR-10 image dataset. The algorithm performs well in comparison to other unsupervised learning algorithms and multi-layer networks, thus suggesting its validity in the design of a new class of compact, online learning networks.

翻译:由于在培训期间自主发现歧视特征,因此能够利用相同的基本规则来适应各种不同的数据集的未经监督的学习方法。最近,为神经网络开发了一个新的类类类的赫比亚型和地方级的不受监督的学习规则,以尽量减少相似性与成本-功能的对比。这些都证明是执行很少的代言学习。这项研究测试了从图像中学习特征的这种学习规则的有效性。所执行的规则来自一种非消极的经典的多层面缩放成本功能,并适用于单一和多层结构。该算法所学的特征随后被用作SVM的投入,以测试其在既定的CIFAR-10图像数据集的分类中的有效性。该算法与其他非监督的学习算法和多层网络相比表现良好,从而表明它在设计一个新的集约、在线学习网络中的有效性。