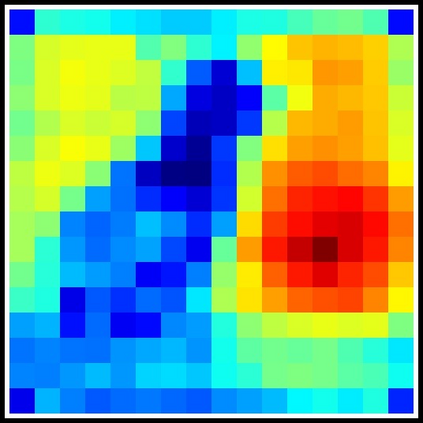

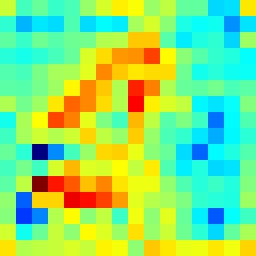

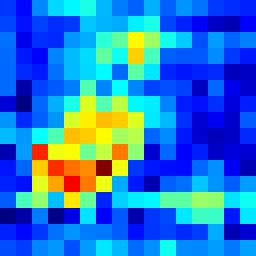

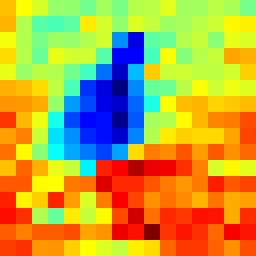

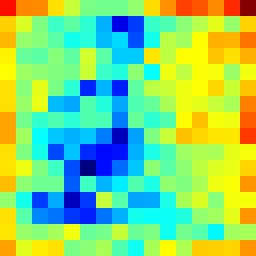

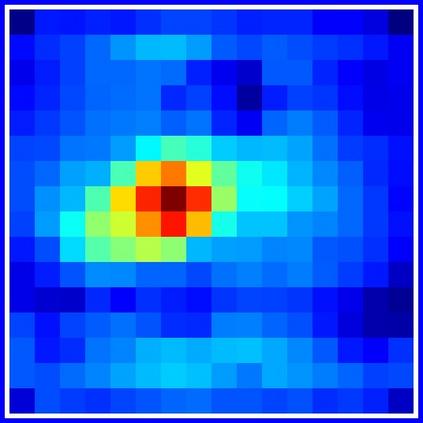

Learning representations with self-supervision for convolutional networks (CNN) has proven effective for vision tasks. As an alternative for CNN, vision transformers (ViTs) emerge strong representation ability with the pixel-level self-attention and channel-level feed-forward networks. Recent works reveal that self-supervised learning helps unleash the great potential of ViTs. Still, most works follow self-supervised strategy designed for CNNs, e.g., instance-level discrimination of samples, but they ignore the unique properties of ViTs. We observe that modeling relations among pixels and channels distinguishes ViTs from other networks. To enforce this property, we explore the feature self-relations for training self-supervised ViTs. Specifically, instead of conducting self-supervised learning solely on feature embeddings from multiple views, we utilize the feature self-relations, i.e., pixel/channel-level self-relations, for self-supervised learning. Self-relation based learning further enhance the relation modeling ability of ViTs, resulting in strong representations that stably improve performance on multiple downstream tasks. Our source code will be made publicly available.

翻译:革命网络(CNN)自我监督的学习表现证明对愿景任务有效。作为CNN的替代方案,视觉变压器(VYTs)在像素级自我关注和频道级向向导网络中表现出强大的代表能力。最近的工作显示,自我监督的学习有助于释放VTs的巨大潜力。不过,大多数工作都遵循为CNN设计的自我监督战略,例如,样本的例级差别,但忽视VTs的独特性。我们观察到,像素和频道之间的建模关系使VITs与其他网络不同。为了执行这一特性,我们探索了培训自我监督的VITs的特征自我关系。具体地说,我们不是仅仅对嵌入多种观点的特征进行自我监督的学习,而是利用为CNNs设计的特征自我监督的自我关系,例如,像素级/网络级自我关系,而忽视VTs的独特性。我们发现,基于建模的自我关系和频道之间的建模关系将进一步加强VTs的关系建模能力,从而在下游进行强有力的表现。