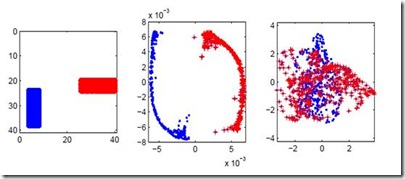

Learning a new task from a handful of examples remains an open challenge in machine learning. Despite the recent progress in few-shot learning, most methods rely on supervised pretraining or meta-learning on labeled meta-training data and cannot be applied to the case where the pretraining data is unlabeled. In this study, we present an unsupervised few-shot learning method via deep Laplacian eigenmaps. Our method learns representation from unlabeled data by grouping similar samples together and can be intuitively interpreted by random walks on augmented training data. We analytically show how deep Laplacian eigenmaps avoid collapsed representation in unsupervised learning without explicit comparison between positive and negative samples. The proposed method significantly closes the performance gap between supervised and unsupervised few-shot learning. Our method also achieves comparable performance to current state-of-the-art self-supervised learning methods under linear evaluation protocol.

翻译:从几个例子中学习新任务仍然是在机器学习方面的公开挑战。 尽管在少数的学习方面最近有所进展,但大多数方法都依赖于在标签的元培训数据上有监督的预培训或元学习,不能适用于没有标记的培训前数据的情况。在本研究中,我们通过深层的Lapalcian eigenmaps 展示了一种未经监督的少见学习方法。我们的方法通过将相似的样本集中到一起,从未标记的数据中学习,并可以通过在强化的培训数据上随机散步来直截了当地解释。我们分析表明深层的Laplacian egenmaps如何避免在未经明确比较的未经监督的学习中,在未对正和负抽样进行明确比较的情况下,在未经监督的学习中出现崩溃。拟议的方法大大缩小了受监督的和未经监督的少见的学习之间的性能差距。我们的方法还实现了在线性评估协议下与目前最先进的自我监督的学习方法的相似性能。