TensorFlow 2.0官方Transformer教程 (Attention is All you Need)

【导读】Transformer是最经典的机器翻译算法之一,它不仅在机器翻译方面有着很大的贡献,更重要的是它提供了一个基于Attention的高性能可并行的序列模型,可在许多场景下取代LSTM。本文介绍TensorFlow 2.0官方Transformer图文教程。

Transformer是文章《Attention is All you Need》提出的经典自动翻译模型。本文介绍TensorFlow 2.0实现的Transformer,使用了TensorFlow 2.0的TFDS (Datasets)等特性,从预处理到模型构建等大大降低了实现的成本,也具有很高的可读性和可调试性。

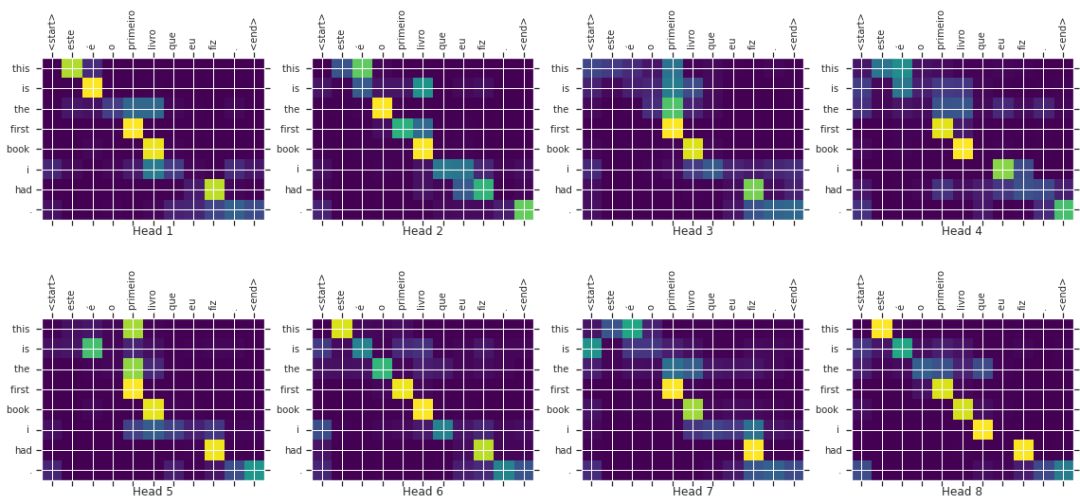

本文介绍的Transformer可以将输入的葡萄牙语自动翻译为英文(Portuguese to English),并可以捕捉输入语种和输出语种之间的注意力关系:

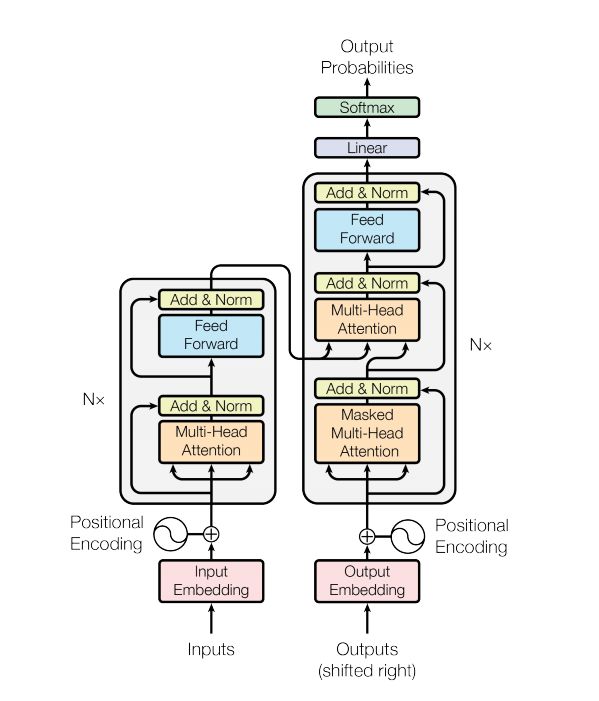

本文代码采用的是《Attention is all you need》中的模型结构,包含了Positional Encoding、Scaled dot product attention、Multi-head Attention等多种经典的结构:

有Google账号的可以直接在Colab中体验:

https://colab.research.google.com/github/tensorflow/docs/blob/master/site/en/r2/tutorials/sequences/transformer.ipynb

教程代码如下:

【论文便捷下载】

请关注专知公众号(点击上方蓝色专知关注)

后台回复“TF2TF”就可以获取《TensorFlow 2.0官方Transformer教程》的下载链接~

# -*- coding: utf-8 -*-

from __future__ import absolute_import, division, print_function, unicode_literals

import tensorflow_datasets as tfds

import tensorflow as tf

import time

import numpy as np

import matplotlib.pyplot as plt

# 用[TFDS](https://www.tensorflow.org/datasets)

# 载入[Portugese-English翻译数据集]

# 数据包含大约50000训练样本, 1100验证样本, 2000测试样本.

examples, metadata = tfds.load('ted_hrlr_translate/pt_to_en', with_info=True,

as_supervised=True)

train_examples, val_examples = examples['train'], examples['validation']

#为训练集定制subword tokenizer

tokenizer_en = tfds.features.text.SubwordTextEncoder.build_from_corpus(

(en.numpy() for pt, en in train_examples), target_vocab_size=2**13)

tokenizer_pt = tfds.features.text.SubwordTextEncoder.build_from_corpus(

(pt.numpy() for pt, en in train_examples), target_vocab_size=2**13)

sample_string = 'Transformer is awesome.'

tokenized_string = tokenizer_en.encode(sample_string)

print ('Tokenized string is {}'.format(tokenized_string))

original_string = tokenizer_en.decode(tokenized_string)

print ('The original string: {}'.format(original_string))

assert original_string == sample_string

for ts in tokenized_string:

print ('{} ----> {}'.format(ts, tokenizer_en.decode([ts])))

BUFFER_SIZE = 20000

BATCH_SIZE = 64

# 为输入和目标添加起始和结束token

def encode(lang1, lang2):

lang1 = [tokenizer_pt.vocab_size] + tokenizer_pt.encode(

lang1.numpy()) + [tokenizer_pt.vocab_size+1]

lang2 = [tokenizer_en.vocab_size] + tokenizer_en.encode(

lang2.numpy()) + [tokenizer_en.vocab_size+1]

return lang1, lang2

MAX_LENGTH = 40

def filter_max_length(x, y, max_length=MAX_LENGTH):

return tf.logical_and(tf.size(x) <= max_length,

tf.size(y) <= max_length)

def tf_encode(pt, en):

return tf.py_function(encode, [pt, en], [tf.int64, tf.int64])

train_dataset = train_examples.map(tf_encode)

train_dataset = train_dataset.filter(filter_max_length)

# cache the dataset to memory to get a speedup while reading from it.

train_dataset = train_dataset.cache()

train_dataset = train_dataset.shuffle(BUFFER_SIZE).padded_batch(

BATCH_SIZE, padded_shapes=([-1], [-1]))

train_dataset = train_dataset.prefetch(tf.data.experimental.AUTOTUNE)

val_dataset = val_examples.map(tf_encode)

val_dataset = val_dataset.filter(filter_max_length).padded_batch(

BATCH_SIZE, padded_shapes=([-1], [-1]))

de_batch, en_batch = next(iter(val_dataset))

de_batch, en_batch

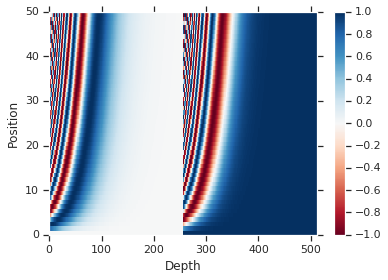

# 位置编码(Positional encoding)

def get_angles(pos, i, d_model):

angle_rates = 1 / np.power(10000, (2 * (i//2)) / np.float32(d_model))

return pos * angle_rates

def positional_encoding(position, d_model):

angle_rads = get_angles(np.arange(position)[:, np.newaxis],

np.arange(d_model)[np.newaxis, :],

d_model)

# apply sin to even indices in the array; 2i

sines = np.sin(angle_rads[:, 0::2])

# apply cos to odd indices in the array; 2i+1

cosines = np.cos(angle_rads[:, 1::2])

pos_encoding = np.concatenate([sines, cosines], axis=-1)

pos_encoding = pos_encoding[np.newaxis, ...]

return tf.cast(pos_encoding, dtype=tf.float32)

pos_encoding = positional_encoding(50, 512)

print (pos_encoding.shape)

plt.pcolormesh(pos_encoding[0], cmap='RdBu')

plt.xlabel('Depth')

plt.xlim((0, 512))

plt.ylabel('Position')

plt.colorbar()

plt.show()

def create_padding_mask(seq):

seq = tf.cast(tf.math.equal(seq, 0), tf.float32)

# add extra dimensions so that we can add the padding

# to the attention logits.

return seq[:, tf.newaxis, tf.newaxis, :] # (batch_size, 1, 1, seq_len)

x = tf.constant([[7, 6, 0, 0, 1], [1, 2, 3, 0, 0], [0, 0, 0, 4, 5]])

create_padding_mask(x)

def create_look_ahead_mask(size):

mask = 1 - tf.linalg.band_part(tf.ones((size, size)), -1, 0)

return mask # (seq_len, seq_len)

x = tf.random.uniform((1, 3))

temp = create_look_ahead_mask(x.shape[1])

temp

# Scaled dot product attention

def scaled_dot_product_attention(q, k, v, mask):

matmul_qk = tf.matmul(q, k, transpose_b=True) # (..., seq_len_q, seq_len_k)

# scale matmul_qk

dk = tf.cast(tf.shape(k)[-1], tf.float32)

scaled_attention_logits = matmul_qk / tf.math.sqrt(dk)

# add the mask to the scaled tensor.

if mask is not None:

scaled_attention_logits += (mask * -1e9)

# softmax is normalized on the last axis (seq_len_k) so that the scores

# add up to 1.

attention_weights = tf.nn.softmax(scaled_attention_logits, axis=-1) # (..., seq_len_q, seq_len_k)

output = tf.matmul(attention_weights, v) # (..., seq_len_v, depth)

return output, attention_weights

def print_out(q, k, v):

temp_out, temp_attn = scaled_dot_product_attention(

q, k, v, None)

print ('Attention weights are:')

print (temp_attn)

print ('Output is:')

print (temp_out)

np.set_printoptions(suppress=True)

temp_k = tf.constant([[10,0,0],

[0,10,0],

[0,0,10],

[0,0,10]], dtype=tf.float32) # (4, 3)

temp_v = tf.constant([[ 1,0],

[ 10,0],

[ 100,5],

[1000,6]], dtype=tf.float32) # (4, 3)

# This `query` aligns with the second `key`,

# so the second `value` is returned.

temp_q = tf.constant([[0, 10, 0]], dtype=tf.float32) # (1, 3)

print_out(temp_q, temp_k, temp_v)

# This query aligns with a repeated key (third and fourth),

# so all associated values get averaged.

temp_q = tf.constant([[0, 0, 10]], dtype=tf.float32) # (1, 3)

print_out(temp_q, temp_k, temp_v)

# This query aligns equally with the first and second key,

# so their values get averaged.

temp_q = tf.constant([[10, 10, 0]], dtype=tf.float32) # (1, 3)

print_out(temp_q, temp_k, temp_v)

temp_q = tf.constant([[0, 0, 10], [0, 10, 0], [10, 10, 0]], dtype=tf.float32) # (3, 3)

print_out(temp_q, temp_k, temp_v)

# Multi-head Attention层

class MultiHeadAttention(tf.keras.layers.Layer):

def __init__(self, d_model, num_heads):

super(MultiHeadAttention, self).__init__()

self.num_heads = num_heads

self.d_model = d_model

assert d_model % self.num_heads == 0

self.depth = d_model // self.num_heads

self.wq = tf.keras.layers.Dense(d_model)

self.wk = tf.keras.layers.Dense(d_model)

self.wv = tf.keras.layers.Dense(d_model)

self.dense = tf.keras.layers.Dense(d_model)

def split_heads(self, x, batch_size):

"""Split the last dimension into (num_heads, depth).

Transpose the result such that the shape is (batch_size, num_heads, seq_len, depth)

"""

x = tf.reshape(x, (batch_size, -1, self.num_heads, self.depth))

return tf.transpose(x, perm=[0, 2, 1, 3])

def call(self, v, k, q, mask):

batch_size = tf.shape(q)[0]

q = self.wq(q) # (batch_size, seq_len, d_model)

k = self.wk(k) # (batch_size, seq_len, d_model)

v = self.wv(v) # (batch_size, seq_len, d_model)

q = self.split_heads(q, batch_size) # (batch_size, num_heads, seq_len_q, depth)

k = self.split_heads(k, batch_size) # (batch_size, num_heads, seq_len_k, depth)

v = self.split_heads(v, batch_size) # (batch_size, num_heads, seq_len_v, depth)

# scaled_attention.shape == (batch_size, num_heads, seq_len_v, depth)

# attention_weights.shape == (batch_size, num_heads, seq_len_q, seq_len_k)

scaled_attention, attention_weights = scaled_dot_product_attention(

q, k, v, mask)

scaled_attention = tf.transpose(scaled_attention, perm=[0, 2, 1, 3]) # (batch_size, seq_len_v, num_heads, depth)

concat_attention = tf.reshape(scaled_attention,

(batch_size, -1, self.d_model)) # (batch_size, seq_len_v, d_model)

output = self.dense(concat_attention) # (batch_size, seq_len_v, d_model)

return output, attention_weights

temp_mha = MultiHeadAttention(d_model=512, num_heads=8)

y = tf.random.uniform((1, 60, 512)) # (batch_size, encoder_sequence, d_model)

out, attn = temp_mha(y, k=y, q=y, mask=None)

out.shape, attn.shape

def point_wise_feed_forward_network(d_model, dff):

return tf.keras.Sequential([

tf.keras.layers.Dense(dff, activation='relu'), # (batch_size, seq_len, dff)

tf.keras.layers.Dense(d_model) # (batch_size, seq_len, d_model)

])

sample_ffn = point_wise_feed_forward_network(512, 2048)

sample_ffn(tf.random.uniform((64, 50, 512))).shape

# Encoder层

class EncoderLayer(tf.keras.layers.Layer):

def __init__(self, d_model, num_heads, dff, rate=0.1):

super(EncoderLayer, self).__init__()

self.mha = MultiHeadAttention(d_model, num_heads)

self.ffn = point_wise_feed_forward_network(d_model, dff)

self.layernorm1 = tf.keras.layers.LayerNormalization(epsilon=1e-6)

self.layernorm2 = tf.keras.layers.LayerNormalization(epsilon=1e-6)

self.dropout1 = tf.keras.layers.Dropout(rate)

self.dropout2 = tf.keras.layers.Dropout(rate)

def call(self, x, training, mask):

attn_output, _ = self.mha(x, x, x, mask) # (batch_size, input_seq_len, d_model)

attn_output = self.dropout1(attn_output, training=training)

out1 = self.layernorm1(x + attn_output) # (batch_size, input_seq_len, d_model)

ffn_output = self.ffn(out1) # (batch_size, input_seq_len, d_model)

ffn_output = self.dropout2(ffn_output, training=training)

out2 = self.layernorm2(out1 + ffn_output) # (batch_size, input_seq_len, d_model)

return out2

sample_encoder_layer = EncoderLayer(512, 8, 2048)

sample_encoder_layer_output = sample_encoder_layer(

tf.random.uniform((64, 43, 512)), False, None)

sample_encoder_layer_output.shape # (batch_size, input_seq_len, d_model)

# Decoder层

class DecoderLayer(tf.keras.layers.Layer):

def __init__(self, d_model, num_heads, dff, rate=0.1):

super(DecoderLayer, self).__init__()

self.mha1 = MultiHeadAttention(d_model, num_heads)

self.mha2 = MultiHeadAttention(d_model, num_heads)

self.ffn = point_wise_feed_forward_network(d_model, dff)

self.layernorm1 = tf.keras.layers.LayerNormalization(epsilon=1e-6)

self.layernorm2 = tf.keras.layers.LayerNormalization(epsilon=1e-6)

self.layernorm3 = tf.keras.layers.LayerNormalization(epsilon=1e-6)

self.dropout1 = tf.keras.layers.Dropout(rate)

self.dropout2 = tf.keras.layers.Dropout(rate)

self.dropout3 = tf.keras.layers.Dropout(rate)

def call(self, x, enc_output, training,

look_ahead_mask, padding_mask):

# enc_output.shape == (batch_size, input_seq_len, d_model)

attn1, attn_weights_block1 = self.mha1(x, x, x, look_ahead_mask) # (batch_size, target_seq_len, d_model)

attn1 = self.dropout1(attn1, training=training)

out1 = self.layernorm1(attn1 + x)

attn2, attn_weights_block2 = self.mha2(

enc_output, enc_output, out1, padding_mask) # (batch_size, target_seq_len, d_model)

attn2 = self.dropout2(attn2, training=training)

out2 = self.layernorm2(attn2 + out1) # (batch_size, target_seq_len, d_model)

ffn_output = self.ffn(out2) # (batch_size, target_seq_len, d_model)

ffn_output = self.dropout3(ffn_output, training=training)

out3 = self.layernorm3(ffn_output + out2) # (batch_size, target_seq_len, d_model)

return out3, attn_weights_block1, attn_weights_block2

sample_decoder_layer = DecoderLayer(512, 8, 2048)

sample_decoder_layer_output, _, _ = sample_decoder_layer(

tf.random.uniform((64, 50, 512)), sample_encoder_layer_output,

False, None, None)

sample_decoder_layer_output.shape # (batch_size, target_seq_len, d_model)

# Encoder模型

class Encoder(tf.keras.layers.Layer):

def __init__(self, num_layers, d_model, num_heads, dff, input_vocab_size,

rate=0.1):

super(Encoder, self).__init__()

self.d_model = d_model

self.num_layers = num_layers

self.embedding = tf.keras.layers.Embedding(input_vocab_size, d_model)

self.pos_encoding = positional_encoding(input_vocab_size, self.d_model)

self.enc_layers = [EncoderLayer(d_model, num_heads, dff, rate)

for _ in range(num_layers)]

self.dropout = tf.keras.layers.Dropout(rate)

def call(self, x, training, mask):

seq_len = tf.shape(x)[1]

# adding embedding and position encoding.

x = self.embedding(x) # (batch_size, input_seq_len, d_model)

x *= tf.math.sqrt(tf.cast(self.d_model, tf.float32))

x += self.pos_encoding[:, :seq_len, :]

x = self.dropout(x, training=training)

for i in range(self.num_layers):

x = self.enc_layers[i](x, training, mask)

return x # (batch_size, input_seq_len, d_model)

sample_encoder = Encoder(num_layers=2, d_model=512, num_heads=8,

dff=2048, input_vocab_size=8500)

sample_encoder_output = sample_encoder(tf.random.uniform((64, 62)),

training=False, mask=None)

print (sample_encoder_output.shape) # (batch_size, input_seq_len, d_model)

# Decoder模型

class Decoder(tf.keras.layers.Layer):

def __init__(self, num_layers, d_model, num_heads, dff, target_vocab_size,

rate=0.1):

super(Decoder, self).__init__()

self.d_model = d_model

self.num_layers = num_layers

self.embedding = tf.keras.layers.Embedding(target_vocab_size, d_model)

self.pos_encoding = positional_encoding(target_vocab_size, self.d_model)

self.dec_layers = [DecoderLayer(d_model, num_heads, dff, rate)

for _ in range(num_layers)]

self.dropout = tf.keras.layers.Dropout(rate)

def call(self, x, enc_output, training,

look_ahead_mask, padding_mask):

seq_len = tf.shape(x)[1]

attention_weights = {}

x = self.embedding(x) # (batch_size, target_seq_len, d_model)

x *= tf.math.sqrt(tf.cast(self.d_model, tf.float32))

x += self.pos_encoding[:, :seq_len, :]

x = self.dropout(x, training=training)

for i in range(self.num_layers):

x, block1, block2 = self.dec_layers[i](x, enc_output, training,

look_ahead_mask, padding_mask)

attention_weights['decoder_layer{}_block1'.format(i+1)] = block1

attention_weights['decoder_layer{}_block2'.format(i+1)] = block2

# x.shape == (batch_size, target_seq_len, d_model)

return x, attention_weights

sample_decoder = Decoder(num_layers=2, d_model=512, num_heads=8,

dff=2048, target_vocab_size=8000)

output, attn = sample_decoder(tf.random.uniform((64, 26)),

enc_output=sample_encoder_output,

training=False, look_ahead_mask=None,

padding_mask=None)

output.shape, attn['decoder_layer2_block2'].shape

# Transformer的Encoder-Decoder模型

class Transformer(tf.keras.Model):

def __init__(self, num_layers, d_model, num_heads, dff, input_vocab_size,

target_vocab_size, rate=0.1):

super(Transformer, self).__init__()

self.encoder = Encoder(num_layers, d_model, num_heads, dff,

input_vocab_size, rate)

self.decoder = Decoder(num_layers, d_model, num_heads, dff,

target_vocab_size, rate)

self.final_layer = tf.keras.layers.Dense(target_vocab_size)

def call(self, inp, tar, training, enc_padding_mask,

look_ahead_mask, dec_padding_mask):

enc_output = self.encoder(inp, training, enc_padding_mask) # (batch_size, inp_seq_len, d_model)

# dec_output.shape == (batch_size, tar_seq_len, d_model)

dec_output, attention_weights = self.decoder(

tar, enc_output, training, look_ahead_mask, dec_padding_mask)

final_output = self.final_layer(dec_output) # (batch_size, tar_seq_len, target_vocab_size)

return final_output, attention_weights

sample_transformer = Transformer(

num_layers=2, d_model=512, num_heads=8, dff=2048,

input_vocab_size=8500, target_vocab_size=8000)

temp_input = tf.random.uniform((64, 62))

temp_target = tf.random.uniform((64, 26))

fn_out, _ = sample_transformer(temp_input, temp_target, training=False,

enc_padding_mask=None,

look_ahead_mask=None,

dec_padding_mask=None)

fn_out.shape # (batch_size, tar_seq_len, target_vocab_size)

# 各种参数

num_layers = 4

d_model = 128

dff = 512

num_heads = 8

input_vocab_size = tokenizer_pt.vocab_size + 2

target_vocab_size = tokenizer_en.vocab_size + 2

dropout_rate = 0.1

# 优化器

class CustomSchedule(tf.keras.optimizers.schedules.LearningRateSchedule):

def __init__(self, d_model, warmup_steps=4000):

super(CustomSchedule, self).__init__()

self.d_model = d_model

self.d_model = tf.cast(self.d_model, tf.float32)

self.warmup_steps = warmup_steps

def __call__(self, step):

arg1 = tf.math.rsqrt(step)

arg2 = step * (self.warmup_steps ** -1.5)

return tf.math.rsqrt(self.d_model) * tf.math.minimum(arg1, arg2)

learning_rate = CustomSchedule(d_model)

optimizer = tf.keras.optimizers.Adam(learning_rate, beta_1=0.9, beta_2=0.98,

epsilon=1e-9)

temp_learning_rate_schedule = CustomSchedule(d_model)

plt.plot(temp_learning_rate_schedule(tf.range(40000, dtype=tf.float32)))

plt.ylabel("Learning Rate")

plt.xlabel("Train Step")

# 损失

loss_object = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True)

def loss_function(real, pred):

mask = tf.math.logical_not(tf.math.equal(real, 0))

loss_ = loss_object(real, pred)

mask = tf.cast(mask, dtype=loss_.dtype)

loss_ *= mask

return tf.reduce_mean(loss_)

# 指标

train_loss = tf.keras.metrics.Mean(name='train_loss')

train_accuracy = tf.keras.metrics.SparseCategoricalAccuracy(

name='train_accuracy')

transformer = Transformer(num_layers, d_model, num_heads, dff,

input_vocab_size, target_vocab_size, dropout_rate)

# 定义Mask,这是Transformer里重要的概念

# 可以用来屏蔽特定数据之间的依赖

def create_masks(inp, tar):

# Encoder padding mask

enc_padding_mask = create_padding_mask(inp)

# Used in the 2nd attention block in the decoder.

# This padding mask is used to mask the encoder outputs.

dec_padding_mask = create_padding_mask(inp)

# Used in the 1st attention block in the decoder.

# It is used to pad and mask future tokens in the input received by

# the decoder.

look_ahead_mask = create_look_ahead_mask(tf.shape(tar)[1])

dec_target_padding_mask = create_padding_mask(tar)

combined_mask = tf.maximum(dec_target_padding_mask, look_ahead_mask)

return enc_padding_mask, combined_mask, dec_padding_mask

# 定义模型断点保存

checkpoint_path = "./checkpoints/train"

ckpt = tf.train.Checkpoint(transformer=transformer,

optimizer=optimizer)

ckpt_manager = tf.train.CheckpointManager(ckpt, checkpoint_path, max_to_keep=5)

# if a checkpoint exists, restore the latest checkpoint.

if ckpt_manager.latest_checkpoint:

ckpt.restore(ckpt_manager.latest_checkpoint)

print ('Latest checkpoint restored!!')

EPOCHS = 20

# 定义训练的每次迭代

@tf.function

def train_step(inp, tar):

tar_inp = tar[:, :-1]

tar_real = tar[:, 1:]

enc_padding_mask, combined_mask, dec_padding_mask = create_masks(inp, tar_inp)

with tf.GradientTape() as tape:

predictions, _ = transformer(inp, tar_inp,

True,

enc_padding_mask,

combined_mask,

dec_padding_mask)

loss = loss_function(tar_real, predictions)

gradients = tape.gradient(loss, transformer.trainable_variables)

optimizer.apply_gradients(zip(gradients, transformer.trainable_variables))

train_loss(loss)

train_accuracy(tar_real, predictions)

# 训练,输入葡萄牙语,输出英文

for epoch in range(EPOCHS):

start = time.time()

train_loss.reset_states()

train_accuracy.reset_states()

# inp -> portuguese, tar -> english

for (batch, (inp, tar)) in enumerate(train_dataset):

train_step(inp, tar)

if batch % 500 == 0:

print ('Epoch {} Batch {} Loss {:.4f} Accuracy {:.4f}'.format(

epoch + 1, batch, train_loss.result(), train_accuracy.result()))

if (epoch + 1) % 5 == 0:

ckpt_save_path = ckpt_manager.save()

print ('Saving checkpoint for epoch {} at {}'.format(epoch+1,

ckpt_save_path))

print ('Epoch {} Loss {:.4f} Accuracy {:.4f}'.format(epoch + 1,

train_loss.result(),

train_accuracy.result()))

print ('Time taken for 1 epoch: {} secs\n'.format(time.time() - start))

# 评价

def evaluate(inp_sentence):

start_token = [tokenizer_pt.vocab_size]

end_token = [tokenizer_pt.vocab_size + 1]

# inp sentence is portuguese, hence adding the start and end token

inp_sentence = start_token + tokenizer_pt.encode(inp_sentence) + end_token

encoder_input = tf.expand_dims(inp_sentence, 0)

# as the target is english, the first word to the transformer should be the

# english start token.

decoder_input = [tokenizer_en.vocab_size]

output = tf.expand_dims(decoder_input, 0)

for i in range(MAX_LENGTH):

enc_padding_mask, combined_mask, dec_padding_mask = create_masks(

encoder_input, output)

# predictions.shape == (batch_size, seq_len, vocab_size)

predictions, attention_weights = transformer(encoder_input,

output,

False,

enc_padding_mask,

combined_mask,

dec_padding_mask)

# select the last word from the seq_len dimension

predictions = predictions[: ,-1:, :] # (batch_size, 1, vocab_size)

predicted_id = tf.cast(tf.argmax(predictions, axis=-1), tf.int32)

# return the result if the predicted_id is equal to the end token

if tf.equal(predicted_id, tokenizer_en.vocab_size+1):

return tf.squeeze(output, axis=0), attention_weights

# concatentate the predicted_id to the output which is given to the decoder

# as its input.

output = tf.concat([output, predicted_id], axis=-1)

return tf.squeeze(output, axis=0), attention_weights

# 可视化Attention权重

def plot_attention_weights(attention, sentence, result, layer):

fig = plt.figure(figsize=(16, 8))

sentence = tokenizer_pt.encode(sentence)

attention = tf.squeeze(attention[layer], axis=0)

for head in range(attention.shape[0]):

ax = fig.add_subplot(2, 4, head+1)

# plot the attention weights

ax.matshow(attention[head][:-1, :], cmap='viridis')

fontdict = {'fontsize': 10}

ax.set_xticks(range(len(sentence)+2))

ax.set_yticks(range(len(result)))

ax.set_ylim(len(result)-1.5, -0.5)

ax.set_xticklabels(

['<start>']+[tokenizer_pt.decode([i]) for i in sentence]+['<end>'],

fontdict=fontdict, rotation=90)

ax.set_yticklabels([tokenizer_en.decode([i]) for i in result

if i < tokenizer_en.vocab_size],

fontdict=fontdict)

ax.set_xlabel('Head {}'.format(head+1))

plt.tight_layout()

plt.show()

# 执行翻译

def translate(sentence, plot=''):

result, attention_weights = evaluate(sentence)

predicted_sentence = tokenizer_en.decode([i for i in result

if i < tokenizer_en.vocab_size])

print('Input: {}'.format(sentence))

print('Predicted translation: {}'.format(predicted_sentence))

if plot:

plot_attention_weights(attention_weights, sentence, result, plot)

translate("este é um problema que temos que resolver.")

print ("Real translation: this is a problem we have to solve .")

translate("os meus vizinhos ouviram sobre esta ideia.")

print ("Real translation: and my neighboring homes heard about this idea .")

translate("vou então muito rapidamente partilhar convosco algumas histórias de algumas coisas mágicas que aconteceram.")

print ("Real translation: so i 'll just share with you some stories very quickly of some magical things that have happened .")

translate("este é o primeiro livro que eu fiz.", plot='decoder_layer4_block2')

print ("Real translation: this is the first book i've ever done.")

其中关于Positional Encoding的可视化结果:

最终运行结果:

参考链接:

https://www.tensorflow.org/alpha/tutorials/sequences/transformer

http://papers.nips.cc/paper/7181-attention-is-all-you-need

-END-

专 · 知

专知,专业可信的人工智能知识分发,让认知协作更快更好!欢迎登录www.zhuanzhi.ai,注册登录专知,获取更多AI知识资料!

欢迎微信扫一扫加入专知人工智能知识星球群,获取最新AI专业干货知识教程视频资料和与专家交流咨询!

请加专知小助手微信(扫一扫如下二维码添加),加入专知人工智能主题群,咨询技术商务合作~

专知《深度学习:算法到实战》课程全部完成!520+位同学在学习,现在报名,限时优惠!网易云课堂人工智能畅销榜首位!

点击“阅读原文”,了解报名专知《深度学习:算法到实战》课程