强化学习三篇论文 避免遗忘等

Learning to Learn without Forgetting by Maximizing Transfer and Minimizing Interference

Matthew Riemer, Ignacio Cases, Robert Ajemian, Miao Liu, Irina Rish, Yuhai Tu, Gerald Tesauro

(Submitted on 29 Oct 2018 (v1), last revised 3 May 2019 (this version, v3))

Lack of performance when it comes to continual learning over non-stationary distributions of data remains a major challenge in scaling neural network learning to more human realistic settings. In this work we propose a new conceptualization of the continual learning problem in terms of a temporally symmetric trade-off between transfer and interference that can be optimized by enforcing gradient alignment across examples. We then propose a new algorithm, Meta-Experience Replay (MER), that directly exploits this view by combining experience replay with optimization based meta-learning. This method learns parameters that make interference based on future gradients less likely and transfer based on future gradients more likely. We conduct experiments across continual lifelong supervised learning benchmarks and non-stationary reinforcement learning environments demonstrating that our approach consistently outperforms recently proposed baselines for continual learning. Our experiments show that the gap between the performance of MER and baseline algorithms grows both as the environment gets more non-stationary and as the fraction of the total experiences stored gets smaller.

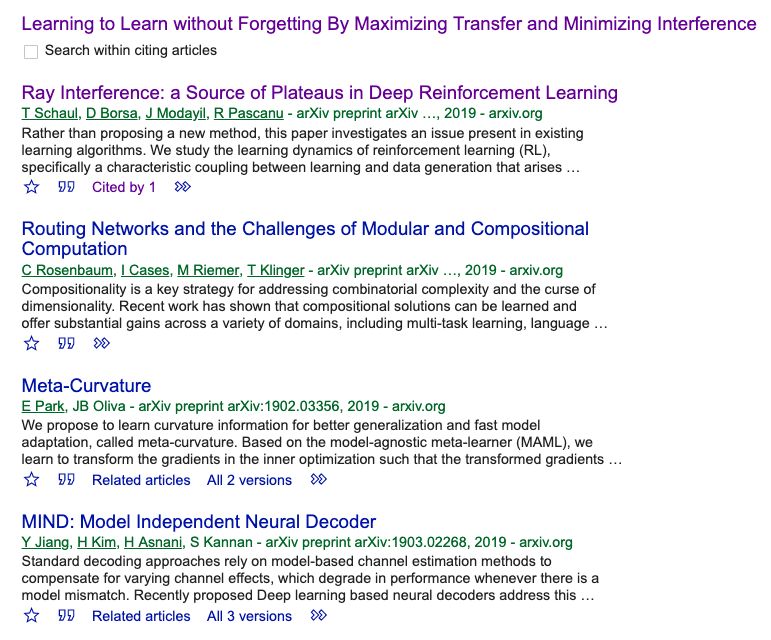

Ray Interference: a Source of Plateaus in Deep Reinforcement Learning

Tom Schaul, Diana Borsa, Joseph Modayil, Razvan Pascanu

(Submitted on 25 Apr 2019)

Rather than proposing a new method, this paper investigates an issue present in existing learning algorithms. We study the learning dynamics of reinforcement learning (RL), specifically a characteristic coupling between learning and data generation that arises because RL agents control their future data distribution. In the presence of function approximation, this coupling can lead to a problematic type of 'ray interference', characterized by learning dynamics that sequentially traverse a number of performance plateaus, effectively constraining the agent to learn one thing at a time even when learning in parallel is better. We establish the conditions under which ray interference occurs, show its relation to saddle points and obtain the exact learning dynamics in a restricted setting. We characterize a number of its properties and discuss possible remedies.

Meta-learners' learning dynamics are unlike learners'

Neil C. Rabinowitz

(Submitted on 3 May 2019)

Meta-learning is a tool that allows us to build sample-efficient learning systems. Here we show that, once meta-trained, LSTM Meta-Learners aren't just faster learners than their sample-inefficient deep learning (DL) and reinforcement learning (RL) brethren, but that they actually pursue fundamentally different learning trajectories. We study their learning dynamics on three sets of structured tasks for which the corresponding learning dynamics of DL and RL systems have been previously described: linear regression (Saxe et al., 2013), nonlinear regression (Rahaman et al., 2018; Xu et al., 2018), and contextual bandits (Schaul et al., 2019). In each case, while sample-inefficient DL and RL Learners uncover the task structure in a staggered manner, meta-trained LSTM Meta-Learners uncover almost all task structure concurrently, congruent with the patterns expected from Bayes-optimal inference algorithms. This has implications for research areas wherever the learning behaviour itself is of interest, such as safety, curriculum design, and human-in-the-loop machine learning.

登录查看更多

相关内容

强化学习(RL)是机器学习的一个领域,与软件代理应如何在环境中采取行动以最大化累积奖励的概念有关。除了监督学习和非监督学习外,强化学习是三种基本的机器学习范式之一。

强化学习与监督学习的不同之处在于,不需要呈现带标签的输入/输出对,也不需要显式纠正次优动作。相反,重点是在探索(未知领域)和利用(当前知识)之间找到平衡。

该环境通常以马尔可夫决策过程(MDP)的形式陈述,因为针对这种情况的许多强化学习算法都使用动态编程技术。经典动态规划方法和强化学习算法之间的主要区别在于,后者不假设MDP的确切数学模型,并且针对无法采用精确方法的大型MDP。

Arxiv

5+阅读 · 2020年4月2日

Arxiv

34+阅读 · 2019年10月24日