逆强化学习-学习人先验的动机

LEARNING A PRIOR OVER INTENT VIA META-INVERSE REINFORCEMENT LEARNING

https://arxiv.org/abs/1805.12573v3

ABSTRACT

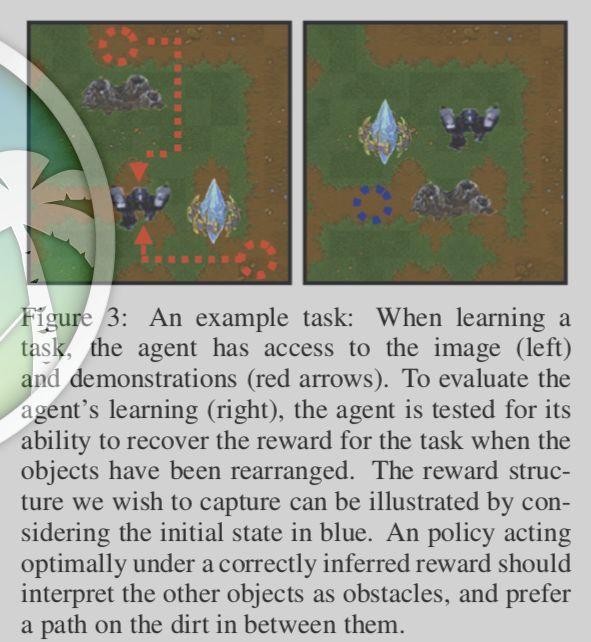

A significant challenge for the practical application of reinforcement learning to real world problems is the need to specify an oracle reward function that correctly defines a task. Inverse reinforcement learning (IRL) seeks to avoid this challenge by instead inferring a reward function from expert behavior. While appealing, it can be impractically expensive to collect datasets of demonstrations that cover the variation common in the real world (e.g. opening any type of door). Thus in practice, IRL must commonly be performed with only a limited set of demon- strations where it can be exceedingly difficult to unambiguously recover a reward function. In this work, we exploit the insight that demonstrations from other tasks can be used to constrain the set of possible reward functions by learning a “prior” that is specifically optimized for the ability to infer expressive reward functions from limited numbers of demonstrations. We demonstrate that our method can efficiently recover rewards from images for novel tasks and provide intuition as to how our approach is analogous to learning a prior.

1

Our approach relies on the key observation that related tasks share common structure that we can leverage when learning new tasks. To illustrate, considering a robot navigating through a home.

While the exact reward function we provide to the robot may differ depending on the task, there is a structure amid the space of useful behaviours, such as navigating to a series of landmarks, and there are certain behaviors we always want to encourage or discourage, such as avoiding obstacles or staying a reasonable distance from humans. This notion agrees with our understanding of why humans can easily infer the intents and goals (i.e., reward functions) of even abstract agents from just one or a few demonstrations Baker et al. (2007), as humans have access to strong priors about how other humans accomplish similar tasks accrued over many years. Similarly, our objective is to discover the common structure among different tasks, and encode the structure in a way that can be used to infer reward functions from a few demonstrations.

3.1

Learning in general energy-based models of this form is common in many applications such as structured prediction. However, in contrast to applications where learning can be supervised by millions of labels (e.g. semantic segmentation), the learning problem in Eq. 3 must typically be performed with a relatively small number of example demonstrations. In this work, we seek to address this issue in IRL by providing a way to integrate information from prior tasks to constrain the optimization in Eq. 3 in the regime of limited demonstrations.

4 LEARNING TO LEARN REWARDS

Our goal in meta-IRL is to learn how to learn reward functions across many tasks such that the model can infer the reward function for a new task using only one or a few expert demonstrations. Intuitively, we can view this problem as aiming to learn a prior over the intentions of human demon- strators, such that when given just one or a few demonstrations of a new task, we can combine the learned prior with the new data to effectively determine the human’s reward function. Such a prior is helpful in inverse reinforcement learning settings, since the space of relevant reward functions is much smaller than the space of all possible rewards definable on the raw observations.

Erin Grant, Chelsea Finn, Sergey Levine, Trevor Darrell, and Thomas Griffiths. Recasting gradient- based meta-learning as hierarchical bayes. International Conference on Learning Representations (ICLR), 2018.

4.2 INTERPRETATION AS LEARNING A PRIOR OVER INTENT

The objective in Eq. 6 optimizes for parameters that enable that reward function to adapt and gener- alize efficiently on a wide range of tasks. Intuitively, constraining the space of reward functions to lie within a few steps of gradient descent can be interpreted as expressing a “locality” prior over reward function parameters. This intuition can be made more concrete with the following analysis.

By viewing IRL as maximum likelihood estimation, we

can take the perspective of Grant et al. (2018) who

showed that for a linear model, fast adaptation via a few

steps of gradient descent in MAML is performing MAP

inference over φ, under a Gaussian prior with the mean

θ and a covariance that depends on the step size, num-

ber of steps and curvature of the loss. This is based on

the connection between early stopping and regularization

previously discussed in Santos (1996), which we refer

the readers to for a more detailed discussion. The in-

terpretation of MAML as imposing a Gaussian prior on

the parameters is exact in the case of a likelihood that is

quadratic in the parameters (such as the log-likelihood of

a Gaussian in terms of its mean). For any non-quadratic

likelihood, this is an approximation in a local neighbor-

hood around θ (i.e. up to convex quadratic approxima-

tion). In the case of very complex parameterizations, such

as deep function approximators, this is a coarse approximation and unlikely to be the mode of a pos- terior. However, we can still frame the effect of early stopping and initialization as serving as a prior in a similar way as prior work (Sjo ̈berg & Ljung, 1995; Duvenaud et al., 2016; Grant et al., 2018).

More importantly, this interpretation hints at future extensions to our approach that could benefit from employing more fully Bayesian approaches to reward and goal inference.

then ref by Few-Shot Goal Inference for Visuomotor Learning and Planning

then ref by visual foresight