无人机视觉挑战赛 | ICCV 2019 Workshop—VisDrone2019

14 different cities spanning thousands of kilometers

采集遍布中国14个城市

272117 video frames/images

272117张视频帧/图像

2.6 million bounding boxes

260万个标注框

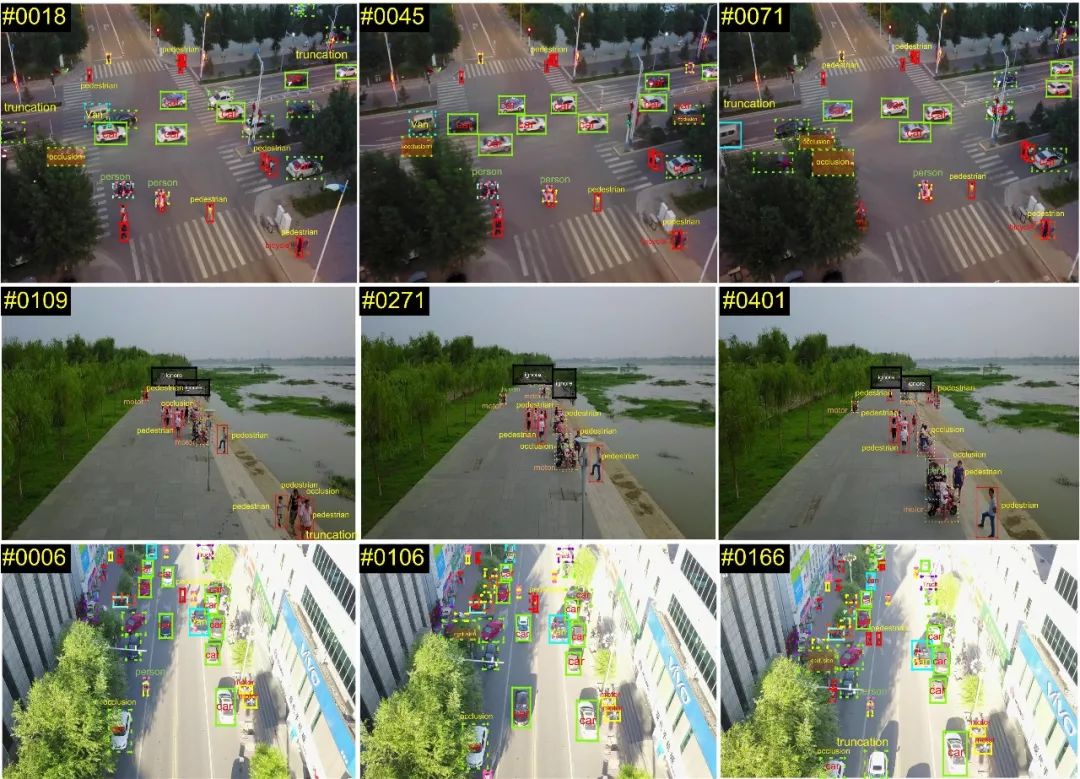

Task 1: object detection in images

The task aims to detect objects of predefined categories (e.g., cars and pedestrians) from individual images taken from drones.

Task 2: object detection in videos

The task is similar to Task 1, except that objects are required to be detected from videos.

Task 3: single-object tracking

The task aims to estimate the state of a target, indicated in the first frame, in the subsequent video frames.

Task 4: multi-object tracking

The task aims to recover the trajectories of objects in each video frame.

Task1: object detection in images

Task2: object detection in videos

Task3: single-object tracking

Task4: multi-object tracking

Website open: April 25, 2019

Data available: April 25, 2019

Submission deadline: TBD

Author notification: TBD

Workshop date: TBD

Camera-ready due: TBD

Pengfei Zhu

Tianjin University

Longyin Wen

JD Digits

Dawei Du

University AT Albany, SUNY

Xiao Bian

GE Global Research

Qinghua Hu

Tianjin University

Haibin Ling

Temple University

Liefeng Bo (JD Digits, USA)

Hamilton Scott Clouse (US Airforce Research)

Liyi Dai (US Army Research Office)

Riad I. Hammound (BAE Systems, USA)

David Jacobs (Univ. Maryland College Park, USA)

SiweiLyu (Univ. At Albany, SUNY, USA)

Stan Z. Li (Institute of Automation, Chinese Academy of Sciences, China)

Fuxin Li (Oregon State Univ.,USA)

Anton Milan (Amazon Research and Development Center, Germany)

Hailin Shi (JD AI Research)

Siyu Tang (Max Planck Institute forIntelligent Systems, Germany)

Technical Committee

Hailin Shi

JD AI Research

Tao Peng

Tianjin University

Jiayu Zheng

Tianjin University

Yue Si

JD AI Research

Xiaolu Li

Tianjin University

Wenya Ma

Tianjin University

Sponsor

Website:http://www.aiskyeye.com

Email:tju.drone.vision@gmail.com

WeChat Official Accounts

点击“阅读原文”进入VisDrone2019官网