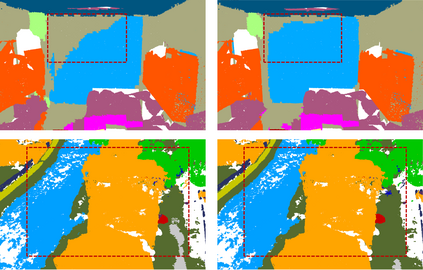

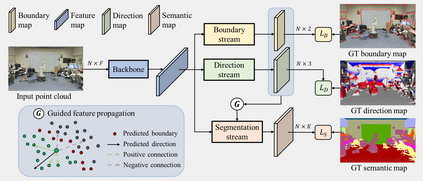

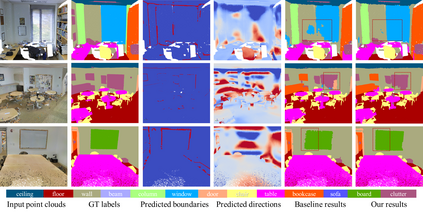

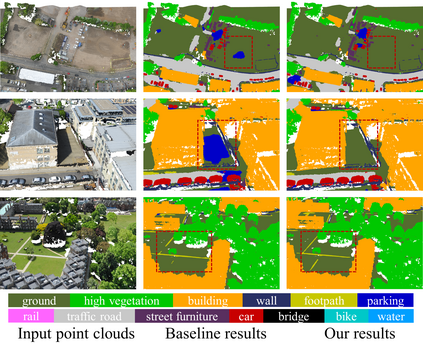

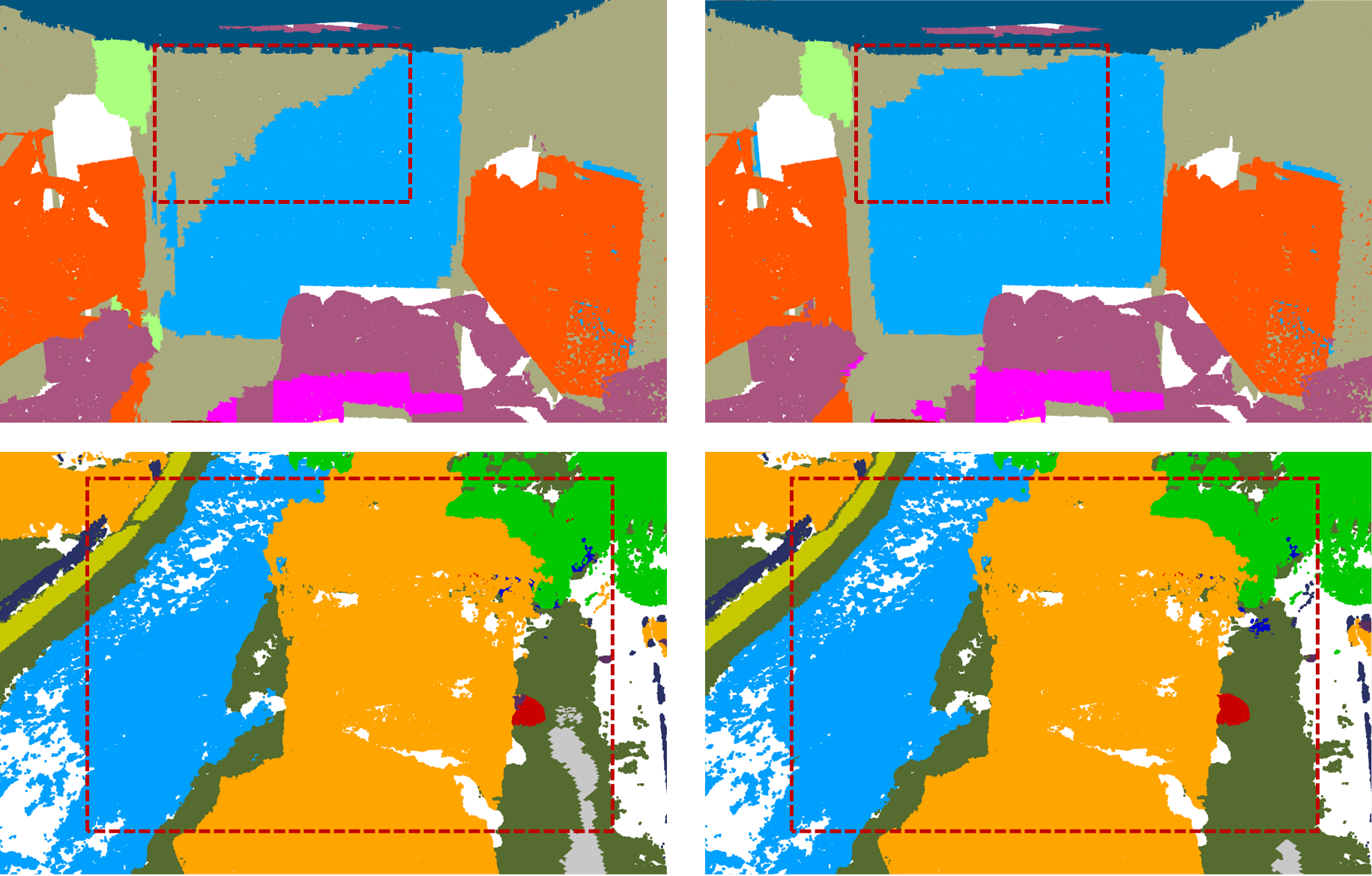

Feedforward fully convolutional neural networks currently dominate in semantic segmentation of 3D point clouds. Despite their great success, they suffer from the loss of local information at low-level layers, posing significant challenges to accurate scene segmentation and precise object boundary delineation. Prior works either address this issue by post-processing or jointly learn object boundaries to implicitly improve feature encoding of the networks. These approaches often require additional modules which are difficult to integrate into the original architecture. To improve the segmentation near object boundaries, we propose a boundary-aware feature propagation mechanism. This mechanism is achieved by exploiting a multi-task learning framework that aims to explicitly guide the boundaries to their original locations. With one shared encoder, our network outputs (i) boundary localization, (ii) prediction of directions pointing to the object's interior, and (iii) semantic segmentation, in three parallel streams. The predicted boundaries and directions are fused to propagate the learned features to refine the segmentation. We conduct extensive experiments on the S3DIS and SensatUrban datasets against various baseline methods, demonstrating that our proposed approach yields consistent improvements by reducing boundary errors. Our code is available at https://github.com/shenglandu/PushBoundary.

翻译:在3D点云的语义分割中,目前完全进化的神经网络目前占主导地位。尽管它们取得了巨大成功,但它们在低层失去了当地信息,对准确的现场分割和精确的物体划界提出了重大挑战。以前的工作要么通过后处理或共同学习对象边界来解决这个问题,从而暗中改善网络的特征编码。这些方法往往需要额外的模块,而这些模块难以融入原始结构。为了改善在物体边界附近的分隔,我们提议了一个边界认知特征传播机制。这个机制是通过利用一个多任务学习框架来实现的,该框架旨在明确引导边界到其原始位置。一个共享的编码器,我们网络的产出(一) 边界定位,(二) 指向物体内部的方向预测,(三) 三个平行流的语义分割。预测的边界和方向将结合起来,以传播学到的特征来完善分界线。我们针对各种基线方法对S3DIS和SensatUrban数据集进行了广泛的实验,表明我们提议的方法是通过减少边界误差来取得一致的改进。我们的代码在httpscods://Bsqours。