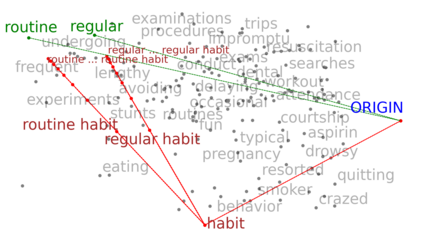

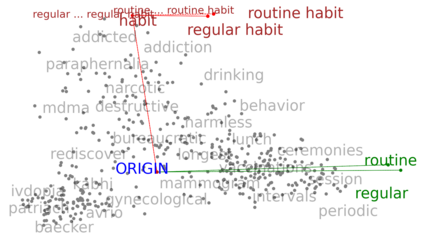

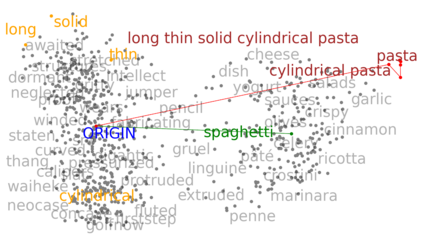

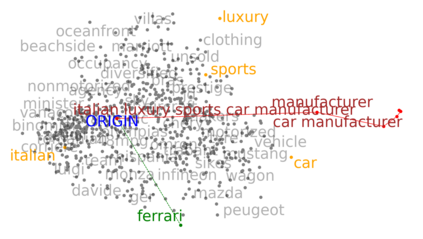

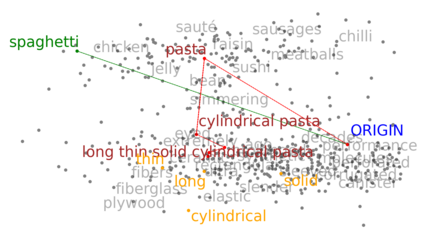

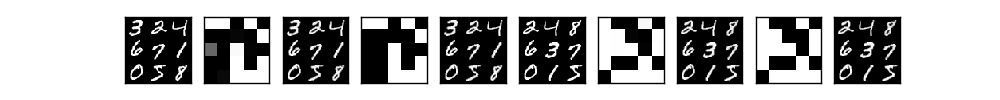

We propose an unsupervised neural model for learning a discrete embedding of words. Unlike existing discrete embeddings, our binary embedding supports vector arithmetic operations similar to continuous embeddings. Our embedding represents each word as a set of propositional statements describing a transition rule in classical/STRIPS planning formalism. This makes the embedding directly compatible with symbolic, state of the art classical planning solvers.

翻译:我们提出一个不受监督的神经模型来学习单词嵌入。 与现有的单词嵌入不同, 我们的二进制嵌入支持矢量算术操作, 类似于连续嵌入。 我们的嵌入代表每个单词都是一组建议性声明, 描述古典/ STRIPS 计划形式主义中的过渡规则。 这使得嵌入直接符合符号性、 古典计划状态的典型计划解决方案。

相关内容

Arxiv

15+阅读 · 2020年2月28日

Arxiv

4+阅读 · 2018年10月4日