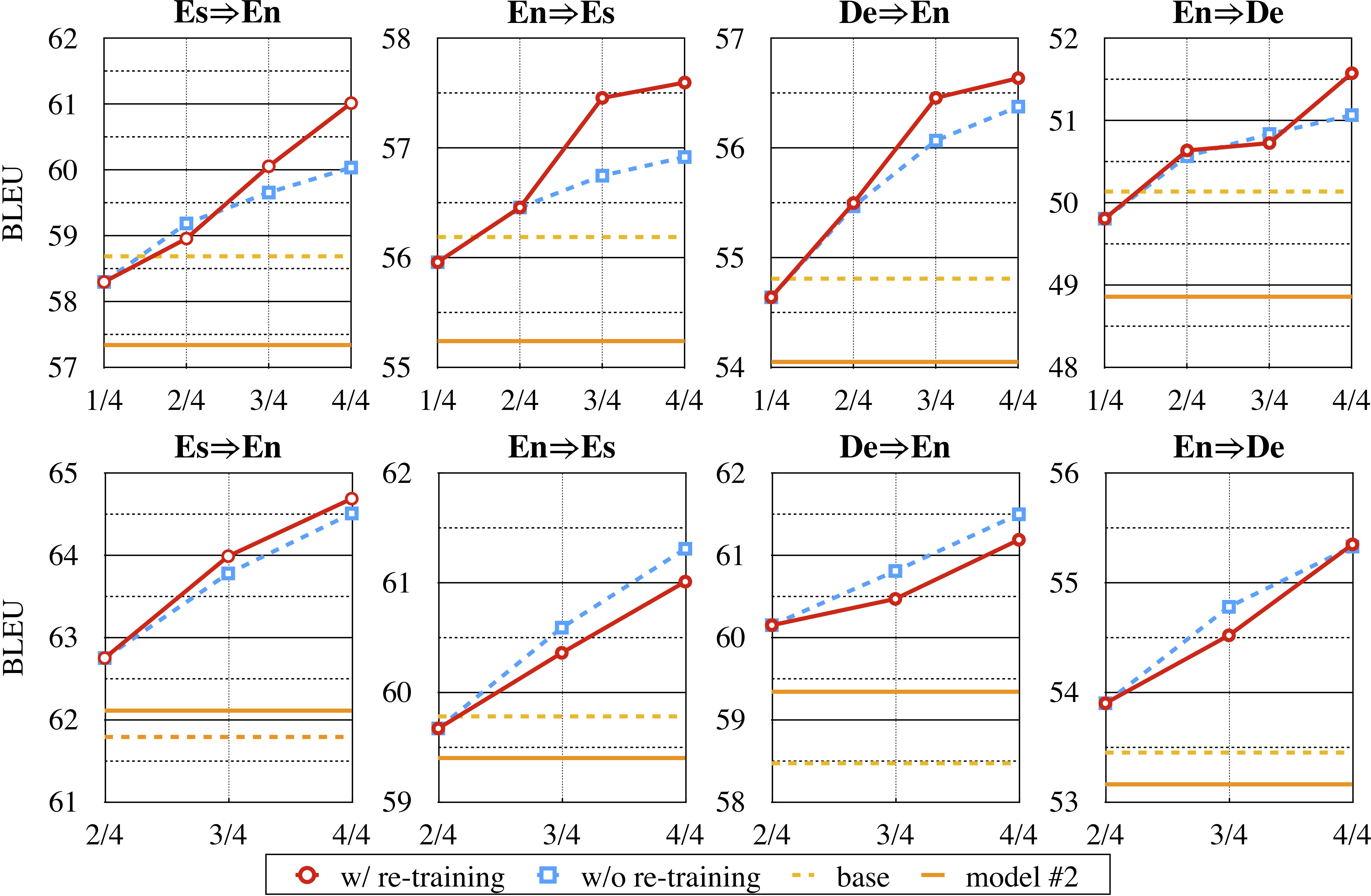

Prior work has proved that Translation memory (TM) can boost the performance of Neural Machine Translation (NMT). In contrast to existing work that uses bilingual corpus as TM and employs source-side similarity search for memory retrieval, we propose a new framework that uses monolingual memory and performs learnable memory retrieval in a cross-lingual manner. Our framework has unique advantages. First, the cross-lingual memory retriever allows abundant monolingual data to be TM. Second, the memory retriever and NMT model can be jointly optimized for the ultimate translation goal. Experiments show that the proposed method obtains substantial improvements. Remarkably, it even outperforms strong TM-augmented NMT baselines using bilingual TM. Owning to the ability to leverage monolingual data, our model also demonstrates effectiveness in low-resource and domain adaptation scenarios.

翻译:先前的工作证明,翻译记忆(TM)可以提高神经机器翻译(NMT)的性能。 与现有工作相比,现有工作使用双语软件作为TM(TM),并使用源侧相似性搜索来检索记忆,我们提议了一个新框架,使用单一语言存储,以跨语言方式进行可学习的记忆检索。我们的框架具有独特的优势。首先,跨语言记忆检索器可以使大量单语数据成为TM(TM)。第二,可以联合优化记忆检索器和NMT模型,以达到最终翻译目标。实验表明,拟议方法取得了很大的改进。值得注意的是,它甚至超过了使用双语TM(TM)的强化TM(TM)NMT(TM)基线。掌握利用单一语言数据的能力,我们的模型还展示了低资源和领域适应情景的有效性。