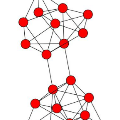

Network embedding aims to learn a latent, low-dimensional vector representations of network nodes, effective in supporting various network analytic tasks. While prior arts on network embedding focus primarily on preserving network topology structure to learn node representations, recently proposed attributed network embedding algorithms attempt to integrate rich node content information with network topological structure for enhancing the quality of network embedding. In reality, networks often have sparse content, incomplete node attributes, as well as the discrepancy between node attribute feature space and network structure space, which severely deteriorates the performance of existing methods. In this paper, we propose a unified framework for attributed network embedding-attri2vec-that learns node embeddings by discovering a latent node attribute subspace via a network structure guided transformation performed on the original attribute space. The resultant latent subspace can respect network structure in a more consistent way towards learning high-quality node representations. We formulate an optimization problem which is solved by an efficient stochastic gradient descent algorithm, with linear time complexity to the number of nodes. We investigate a series of linear and non-linear transformations performed on node attributes and empirically validate their effectiveness on various types of networks. Another advantage of attri2vec is its ability to solve out-of-sample problems, where embeddings of new coming nodes can be inferred from their node attributes through the learned mapping function. Experiments on various types of networks confirm that attri2vec is superior to state-of-the-art baselines for node classification, node clustering, as well as out-of-sample link prediction tasks. The source code of this paper is available at https://github.com/daokunzhang/attri2vec.

翻译:网络嵌入的目的是学习网络节点的潜在、 低维矢量表达方式, 有效地支持网络分析任务。 虽然先前的网络艺术嵌入框架主要侧重于保存网络表层结构以学习节点表达方式, 但最近建议的网络嵌入算法试图将丰富的节点内容信息与网络嵌入结构相结合, 以提高网络嵌入质量。 事实上, 网络通常内容稀少, 节点属性属性属性属性空间与网络结构空间之间存在差异, 这严重地恶化了现有方法的性能。 在本文中, 我们提议了一个统一的网络属性嵌入 attrieve2 框架, 通过网络结构发现一个潜在节点属性子表达方式, 学习节点表达, 试图将丰富的节点内容信息嵌入网络。 由此产生的潜在亚空间可以更一致地尊重网络结构, 学习高质量的节点表达方式。 我们提出一个优化问题, 由高效的感知性梯度梯度的梯度下降级位位位表达, 与节点的精度复杂度相比, 我们从一个直线性和非线性网络的代码嵌入点嵌入点嵌入框架嵌入点嵌入网络, 其直流的精度的精度转换能力在新类型上, 的精度的精度的精度的精度的精度流的精度的精度的精度流的精度流的精度是, 。