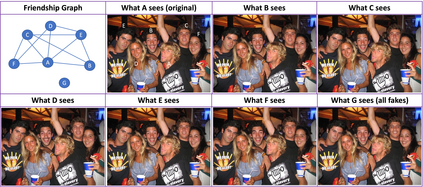

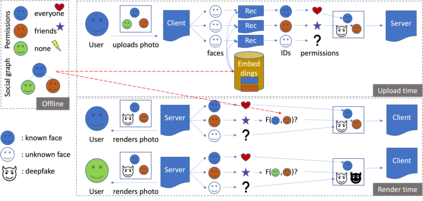

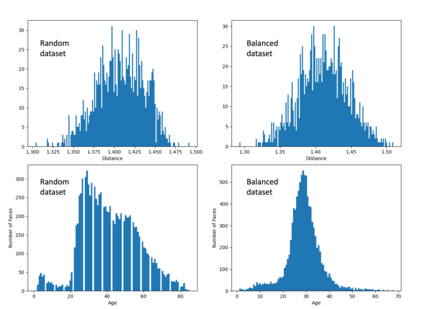

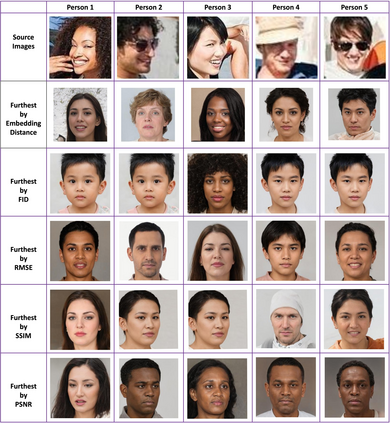

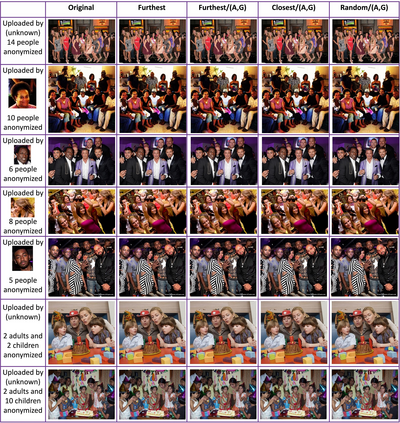

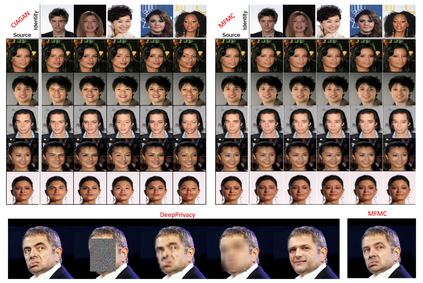

Recently, productization of face recognition and identification algorithms have become the most controversial topic about ethical AI. As new policies around digital identities are formed, we introduce three face access models in a hypothetical social network, where the user has the power to only appear in photos they approve. Our approach eclipses current tagging systems and replaces unapproved faces with quantitatively dissimilar deepfakes. In addition, we propose new metrics specific for this task, where the deepfake is generated at random with a guaranteed dissimilarity. We explain access models based on strictness of the data flow, and discuss impact of each model on privacy, usability, and performance. We evaluate our system on Facial Descriptor Dataset as the real dataset, and two synthetic datasets with random and equal class distributions. Running seven SOTA face recognizers on our results, MFMC reduces the average accuracy by 61%. Lastly, we extensively analyze similarity metrics, deepfake generators, and datasets in structural, visual, and generative spaces; supporting the design choices and verifying the quality.

翻译:最近,面部识别和识别算法的成品化已成为道德AI最有争议的话题。随着围绕数字身份的新政策形成,我们在一个假设的社会网络中引入了三种面部访问模型,即用户只有权力在他们认可的照片中出现。我们的方法取代了目前的标记系统,用数量上不相同的深度假相取代了未经批准的面部。此外,我们为这项任务提出了新的指标,其中深层假相是随机产生的,保证了差异性。我们根据数据流的严格性来解释访问模型,并讨论了每个模型对隐私、可用性和性能的影响。我们评估了我们关于法西式描述数据集的系统作为真实的数据集,以及两个有随机和等分级分布的合成数据集。我们运行了7个SOTA脸识别器,MFMC将平均准确率降低了61%。最后,我们广泛分析了相似度度量度、深片生成器和结构、视觉和基因化空间的数据集;支持设计选择并核实质量。