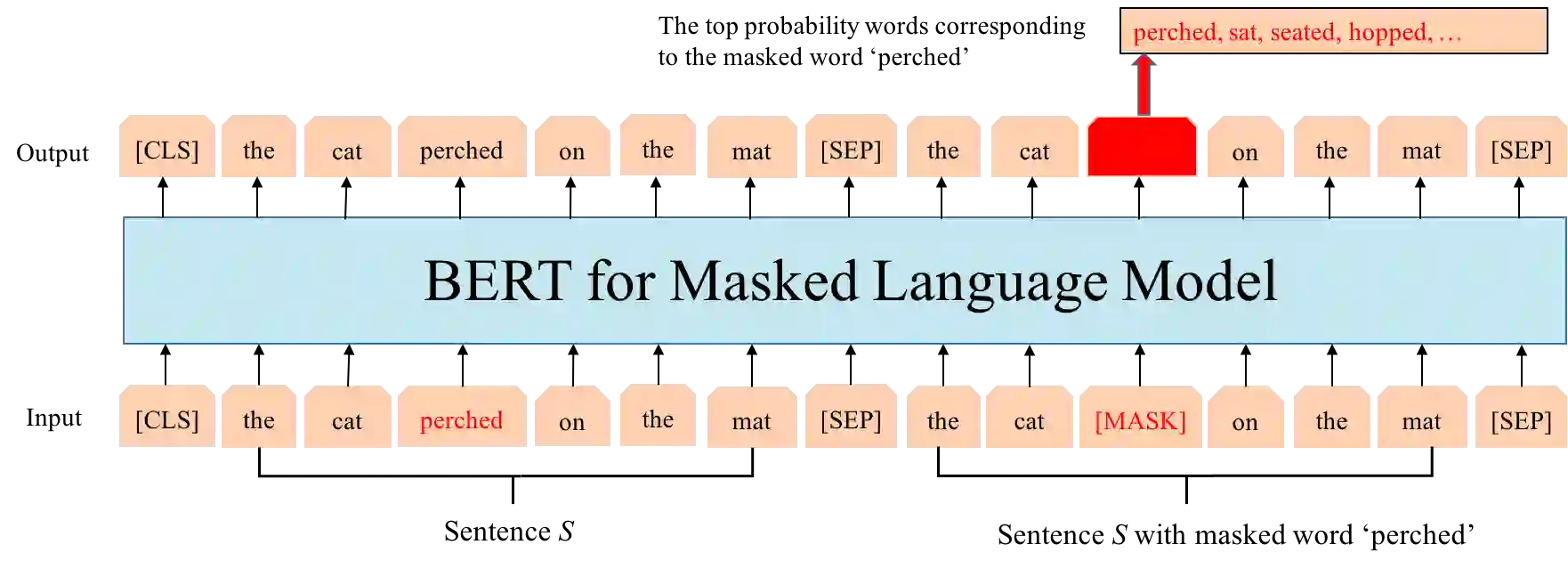

Lexical simplification (LS) aims to replace complex words in a given sentence with their simpler alternatives of equivalent meaning. Recently unsupervised lexical simplification approaches only rely on the complex word itself regardless of the given sentence to generate candidate substitutions, which will inevitably produce a large number of spurious candidates. We present a simple BERT-based LS approach that makes use of the pre-trained unsupervised deep bidirectional representations BERT. We feed the given sentence masked the complex word into the masking language model of BERT to generate candidate substitutions. By considering the whole sentence, the generated simpler alternatives are easier to hold cohesion and coherence of a sentence. Experimental results show that our approach obtains obvious improvement on standard LS benchmark.

翻译:术语简化(LS)的目的是用其含义相当的简单替代词取代某一句中的复杂词句。最近,未经监督的词汇简化方法仅仅依赖复杂的词句本身,而不管给定的句子如何产生替代物,这不可避免地会产生大量虚假的候选人。我们提出了一个简单的基于BERT的LS方法,利用预先训练的、未经监督的深度双向代表物BERT。我们将给定的句子掩盖了复杂的词句子,将其注入BERT的隐蔽语言模式,以产生候选人替代物。考虑到整个句子,生成的更简单的替代物更容易保持句子的凝聚力和一致性。实验结果表明,我们的方法在标准的LS基准上取得了明显的改进。